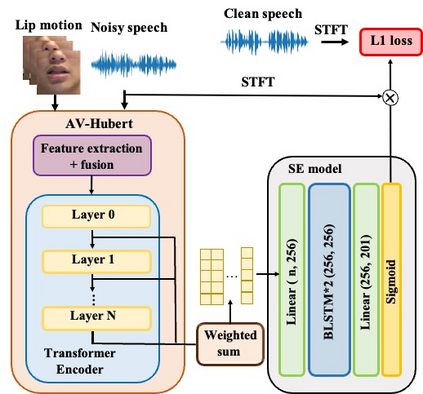

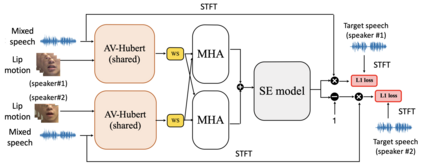

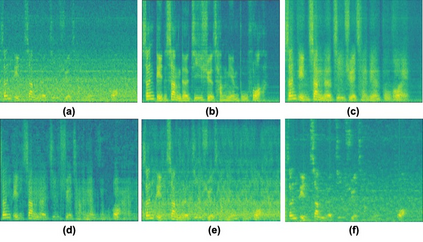

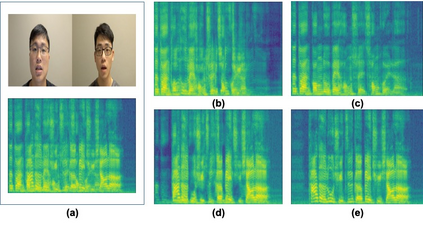

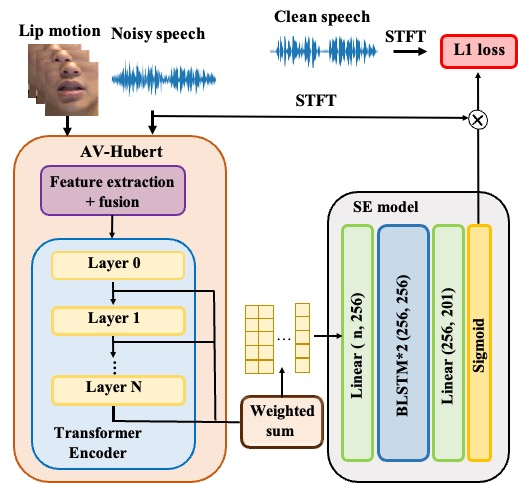

AV-HuBERT, a multi-modal self-supervised learning model, has been shown to be effective for categorical problems such as automatic speech recognition and lip-reading. This suggests that useful audio-visual speech representations can be obtained via utilizing multi-modal self-supervised embeddings. Nevertheless, it is unclear if such representations can be generalized to solve real-world multi-modal AV regression tasks, such as audio-visual speech enhancement (AVSE) and audio-visual speech separation (AVSS). In this study, we leveraged the pre-trained AV-HuBERT model followed by an SE module for AVSE and AVSS. Comparative experimental results demonstrate that our proposed model performs better than the state-of-the-art AVSE and traditional audio-only SE models. In summary, our results confirm the effectiveness of our proposed model for the AVSS task with proper fine-tuning strategies, demonstrating that multi-modal self-supervised embeddings obtained from AV-HUBERT can be generalized to audio-visual regression tasks.

翻译:AV-HuBERT是一种多式自我监督的学习模式,已证明对自动语音识别和唇读等绝对问题十分有效,这意味着可以通过多式自我监督嵌入器获得有用的视听演讲演示,然而,尚不清楚这种演示能否被普遍化,以解决现实世界多式AV回归任务,如视听语音增强和视听语音分离。 在这项研究中,我们利用了AVSE和AVSS的SE模块之后经过预先培训的AV-HuBERT模型。比较实验结果表明,我们提议的模型比AVSE和传统的只听觉SE模型表现得更好。 总之,我们的结果证实了我们提议的AVSS任务模式的有效性,它有适当的微调战略,表明从AV-HUBERT获得的多式自我监督嵌入器可以被普遍化为视听回归任务。