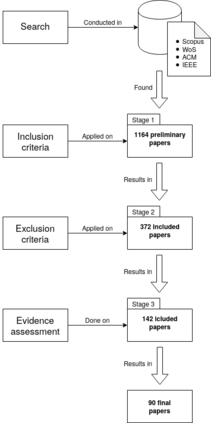

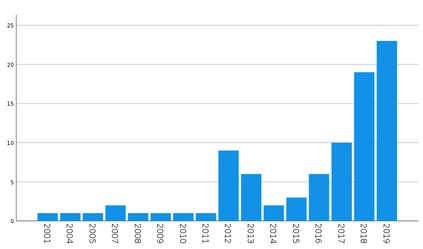

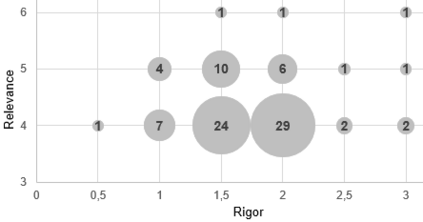

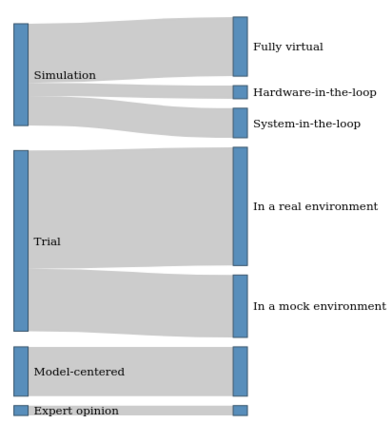

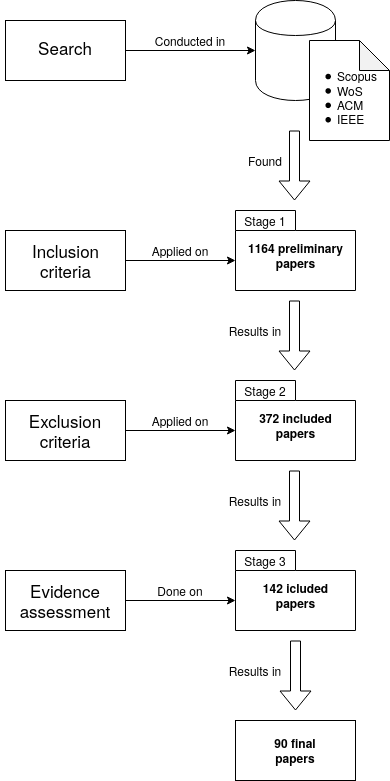

Context: Artificial intelligence (AI) has made its way into everyday activities, particularly through new techniques such as machine learning (ML). These techniques are implementable with little domain knowledge. This, combined with the difficulty of testing AI systems with traditional methods, has made system trustworthiness a pressing issue. Objective: This paper studies the methods used to validate practical AI systems reported in the literature. Our goal is to classify and describe the methods that are used in realistic settings to ensure the dependability of AI systems. Method: A systematic literature review resulted in 90 papers. Systems presented in the papers were analysed based on their domain, task, complexity, and applied validation methods. Results: The validation methods were synthesized into a taxonomy consisting of trial, simulation, model-centred validation, and expert opinion. Failure monitors, safety channels, redundancy, voting, and input and output restrictions are methods used to continuously validate the systems after deployment. Conclusions: Our results clarify existing strategies applied to validation. They form a basis for the synthesization, assessment, and refinement of AI system validation in research and guidelines for validating individual systems in practice. While various validation strategies have all been relatively widely applied, only few studies report on continuous validation. Keywords: artificial intelligence, machine learning, validation, testing, V&V, systematic literature review.

翻译::人工智能(AI)已经进入日常活动,特别是通过机械学习(ML)等新技术。这些技术是可实施的,几乎没有领域知识。这加上难以用传统方法测试AI系统,使系统具有可信度成为紧迫的问题。目标:本文件研究了用于验证文献中报告的实用AI系统的方法。我们的目标是分类和描述在现实环境中使用的方法,以确保AI系统的可靠性。方法:系统文献审查产生了90篇论文。文件中介绍的系统根据它们的领域、任务、复杂性和应用验证方法进行了分析。结果:验证方法被合成为由试验、模拟、以模型为中心的验证和专家意见组成的分类。失败监测、安全渠道、冗余、投票、投入和产出限制是部署后持续验证系统的方法。结论:我们的结果澄清了用于验证的现有战略。这些结果构成了综合、评估和完善用于验证个别系统的研究和准则的基础。虽然各种验证战略都得到了较广泛的应用,只是关键和系统化的验证,但是关于不断验证的系统、系统化的核查报告:关于不断验证的系统化的系统化的文献。

相关内容

Source: Apple - iOS 8