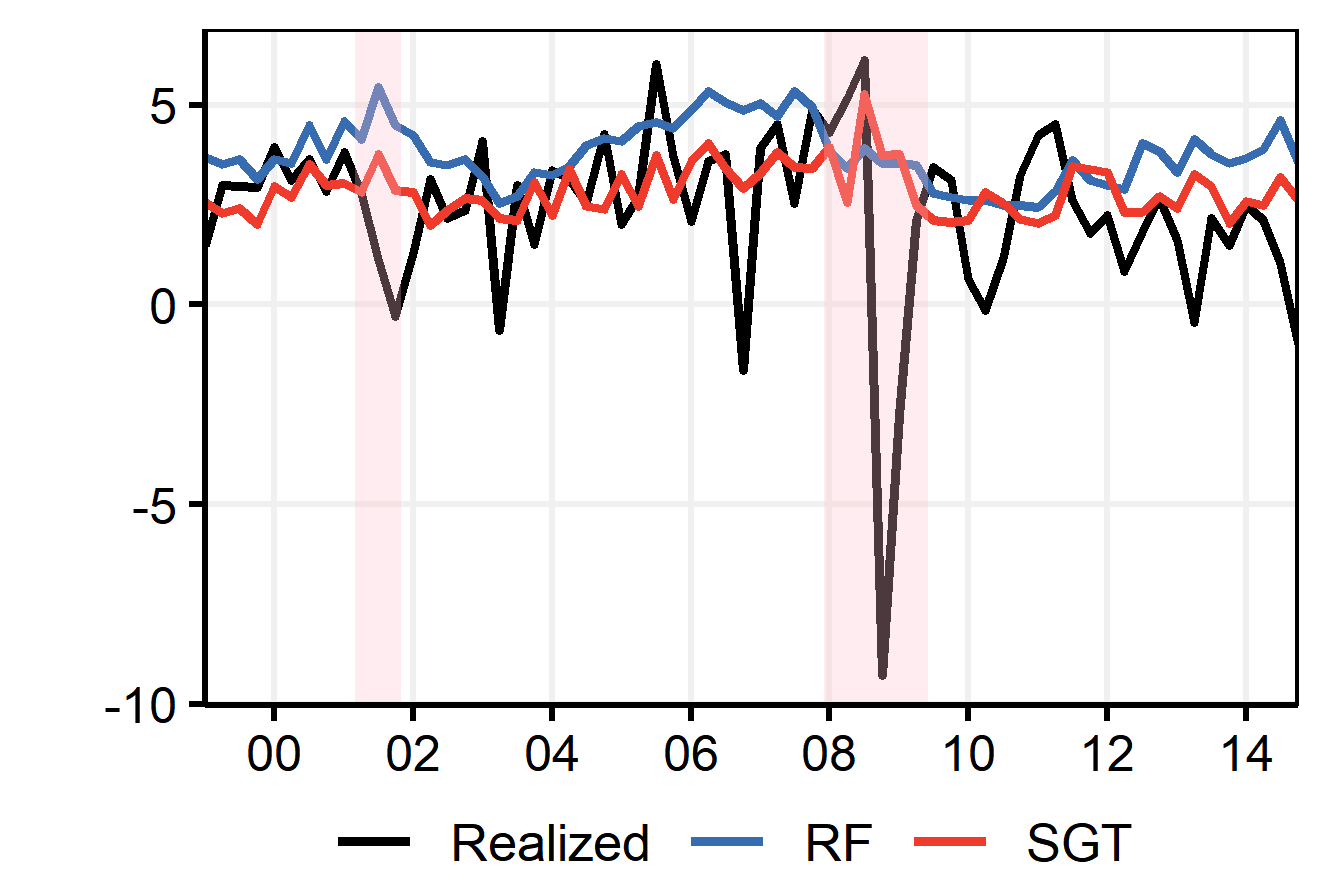

Random Forest's performance can be matched by a single slow-growing tree (SGT), which uses a learning rate to tame CART's greedy algorithm. SGT exploits the view that CART is an extreme case of an iterative weighted least square procedure. Moreover, a unifying view of Boosted Trees (BT) and Random Forests (RF) is presented. Greedy ML algorithms' outcomes can be improved using either "slow learning" or diversification. SGT applies the former to estimate a single deep tree, and Booging (bagging stochastic BT with a high learning rate) uses the latter with additive shallow trees. The performance of this tree ensemble quaternity (Booging, BT, SGT, RF) is assessed on simulated and real regression tasks.

翻译:随机森林的性能可以与单一的缓慢生长的树(SGT)相匹配,它使用学习率来驯服CART的贪婪算法。 SGT利用了这样一种观点,即CART是迭代加权最小平方程序的一个极端案例。 此外,还展示了对诱导树(BT)和随机森林(RF)的统一观点。 贪婪的ML算法的结果可以用“低度学习”或多样化来改进。 SGT应用前者来估计一棵深的树,而布丁(高度学习率的对切型BT)则用添加的浅树来利用后者。 这种树团(Booging、BT、SGT、RF)的性能通过模拟和真正的回归任务来评估。