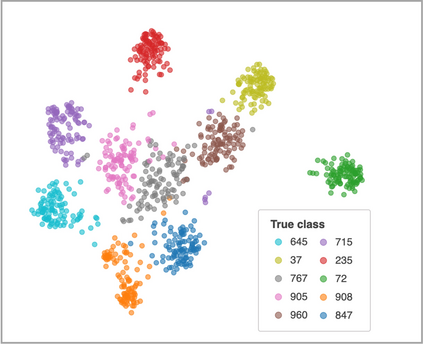

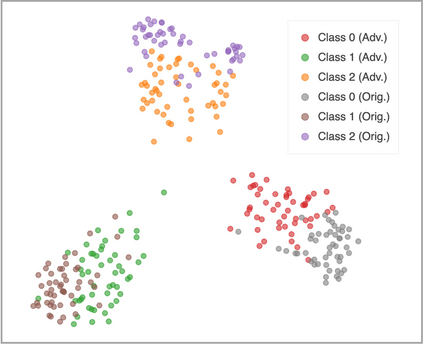

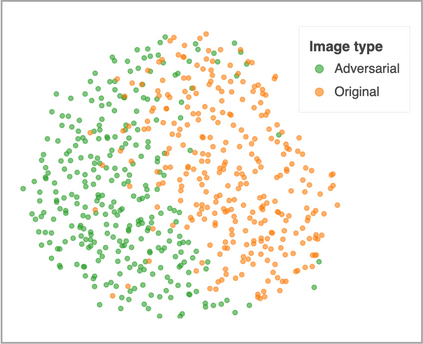

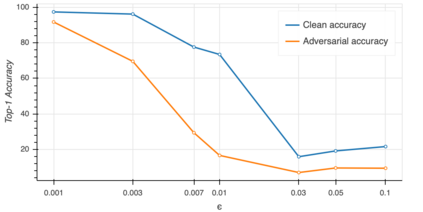

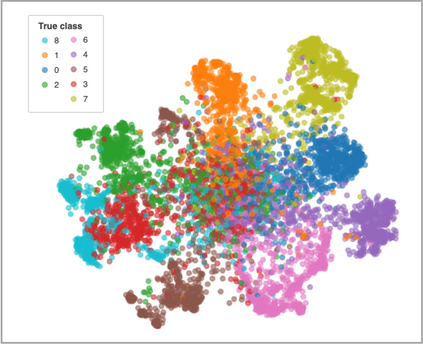

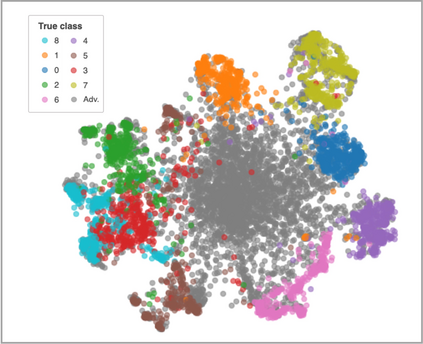

This work conducts the first analysis on the robustness against adversarial attacks on self-supervised Vision Transformers trained using DINO. First, we evaluate whether features learned through self-supervision are more robust to adversarial attacks than those emerging from supervised learning. Then, we present properties arising for attacks in the latent space. Finally, we evaluate whether three well-known defense strategies can increase adversarial robustness in downstream tasks by only fine-tuning the classification head to provide robustness even in view of limited compute resources. These defense strategies are: Adversarial Training, Ensemble Adversarial Training and Ensemble of Specialized Networks.

翻译:这项工作首次分析了对使用DINO培训的自我监督的视觉变形人进行对抗性攻击的强力。 首先,我们评估通过自我监督的观察所学到的特征是否比从监督的学习中发现的特征对对抗性攻击更为有力。 然后,我们介绍了潜藏空间攻击的特性。 最后,我们评估了三种众所周知的防御战略能否在下游任务中增强对抗性强力,只需微调分类头,即使考虑到计算资源有限,也能提供强力。这些防御战略是:反向培训、联合反向培训和专门网络的集合。