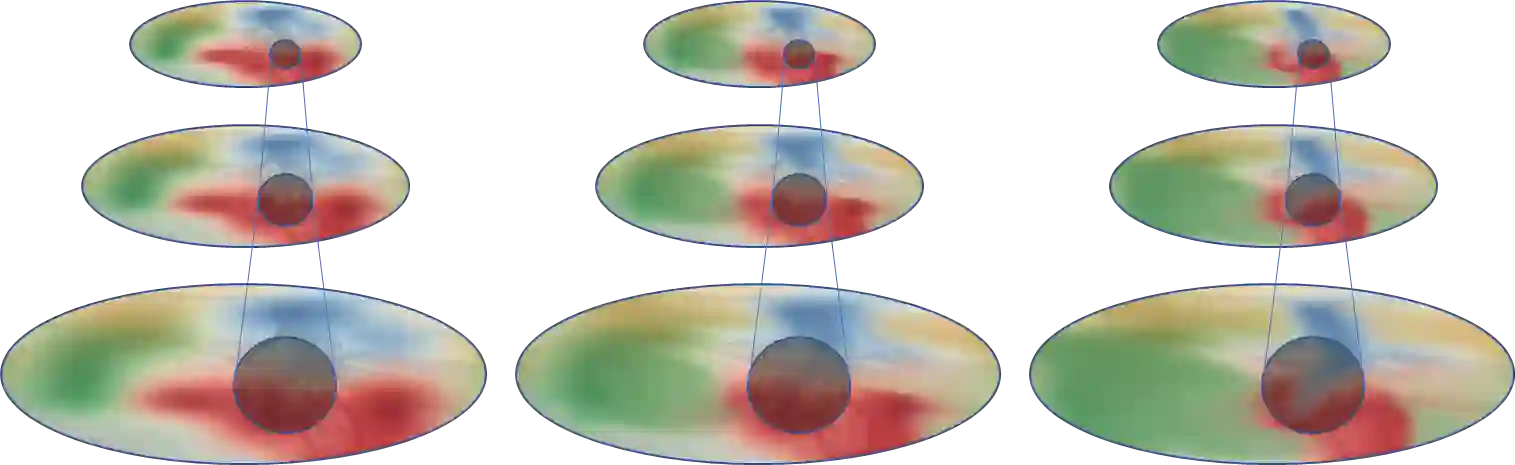

In this paper, we present an approach for Recurrent Iterative Gating called RIGNet. The core elements of RIGNet involve recurrent connections that control the flow of information in neural networks in a top-down manner, and different variants on the core structure are considered. The iterative nature of this mechanism allows for gating to spread in both spatial extent and feature space. This is revealed to be a powerful mechanism with broad compatibility with common existing networks. Analysis shows how gating interacts with different network characteristics, and we also show that more shallow networks with gating may be made to perform better than much deeper networks that do not include RIGNet modules.

翻译:在本文中,我们介绍了经常迭代Ging的“RIGNet”方法。RIGET的核心要素涉及经常性连接,以自上而下的方式控制神经网络中的信息流动,并考虑了核心结构的不同变体。这一机制的迭接性质允许在空间范围和地物空间中进行扩展。这被揭示为与现有共同网络广泛兼容的强大机制。分析表明与不同网络特性的交融如何进行。我们还表明,可以使更浅的带有标志的网络运行得更好,而不是不包含RIGET模块的更深的网络。