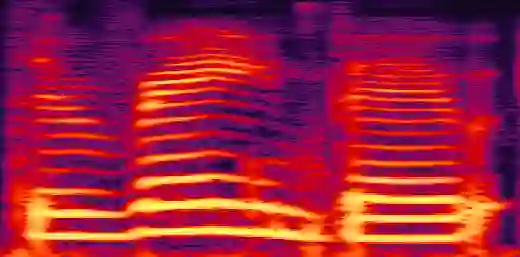

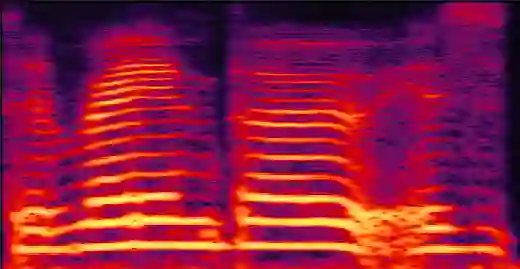

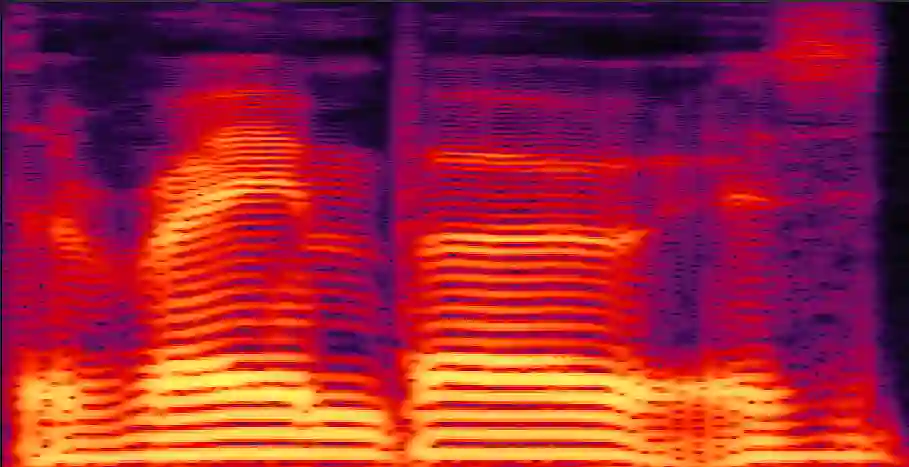

We present an unsupervised non-parallel many-to-many voice conversion (VC) method using a generative adversarial network (GAN) called StarGAN v2. Using a combination of adversarial source classifier loss and perceptual loss, our model significantly outperforms previous VC models. Although our model is trained only with 20 English speakers, it generalizes to a variety of voice conversion tasks, such as any-to-many, cross-lingual, and singing conversion. Using a style encoder, our framework can also convert plain reading speech into stylistic speech, such as emotional and falsetto speech. Subjective and objective evaluation experiments on a non-parallel many-to-many voice conversion task revealed that our model produces natural sounding voices, close to the sound quality of state-of-the-art text-to-speech (TTS) based voice conversion methods without the need for text labels. Moreover, our model is completely convolutional and with a faster-than-real-time vocoder such as Parallel WaveGAN can perform real-time voice conversion.

翻译:我们使用称为StarGAN v2的基因对抗网络(GAN), 使用对抗源分类器损失和感官损失的组合,我们的模式大大优于先前的VC模式。虽然我们的模型只受过20个英语语言的培训,但被概括为多种声音转换任务,如任何到many、跨语言和歌唱转换。我们的框架还可以将普通读音转换成文体语言,如情感和假话。对非平行源分类器损失和感官损失的主观和客观评估实验显示,我们的模型产生自然声音,接近于以最先进的文本到语音(TTTS)为基础的声音转换方法的音质,而不需要文字标签。此外,我们的模型是完全革命性的,而且具有比实时更快的语音转换,例如平行的WaveGAN能够进行实时语音转换。