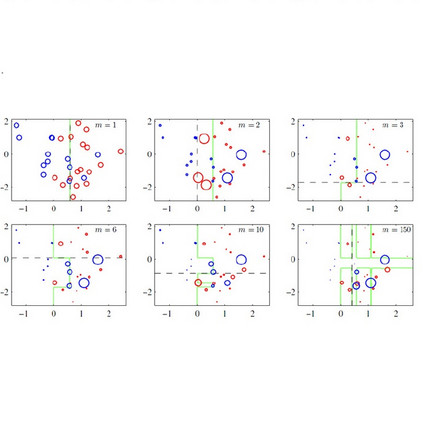

Most machine learning (ML) algorithms have several stochastic elements, and their performances are affected by these sources of randomness. This paper uses an empirical study to systematically examine the effects of two sources: randomness in model training and randomness in the partitioning of a dataset into training and test subsets. We quantify and compare the magnitude of the variation in predictive performance for the following ML algorithms: Random Forests (RFs), Gradient Boosting Machines (GBMs), and Feedforward Neural Networks (FFNNs). Among the different algorithms, randomness in model training causes larger variation for FFNNs compared to tree-based methods. This is to be expected as FFNNs have more stochastic elements that are part of their model initialization and training. We also found that random splitting of datasets leads to higher variation compared to the inherent randomness from model training. The variation from data splitting can be a major issue if the original dataset has considerable heterogeneity. Keywords: Model Training, Reproducibility, Variation

翻译:大多数机器学习(ML)算法有几个随机因素,其性能受到这些随机性来源的影响。本文使用经验性研究系统地审查两个来源的影响:模型培训的随机性和将数据集分割成培训和测试子集的随机性。我们量化和比较下列ML算法预测性能差异的程度:随机森林(Random Forests),渐进式推动机(GBMs)和进化前神经网络(FFNS)。在不同的算法中,模型培训的随机性导致FFNS与基于树种的方法相比出现更大的差异。这是预期的,因为FFNNS具有更多的随机性要素,是其模型初始化和培训的一部分。我们还发现,与模型培训的内在随机随机性相比,随机性差使数据集的变化更大。如果原始数据集具有相当的异质性,数据分离的差异可能是一个主要问题。关键词:模型培训、可复制性、Variation。