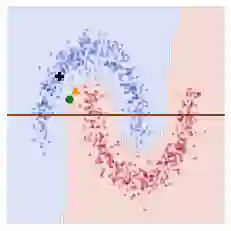

In the field of eXplainable Artificial Intelligence (XAI), post-hoc interpretability methods aim at explaining to a user the predictions of a trained decision model. Integrating prior knowledge into such interpretability methods aims at improving the explanation understandability and allowing for personalised explanations adapted to each user. In this paper, we propose to define a cost function that explicitly integrates prior knowledge into the interpretability objectives: we present a general framework for the optimization problem of post-hoc interpretability methods, and show that user knowledge can thus be integrated to any method by adding a compatibility term in the cost function. We instantiate the proposed formalization in the case of counterfactual explanations and propose a new interpretability method called Knowledge Integration in Counterfactual Explanation (KICE) to optimize it. The paper performs an experimental study on several benchmark data sets to characterize the counterfactual instances generated by KICE, as compared to reference methods.

翻译:在可移植人工智能(XAI)领域,应用后的解释方法旨在向用户解释对经过培训的决定模型的预测。将先前的知识纳入这种可解释方法的目的是改进解释的可理解性,并允许针对每个用户进行个性化的解释。在本文件中,我们提议界定成本功能,明确将先前的知识纳入可解释目标:我们为采用后可解释方法的优化问题提出了一个总体框架,并表明用户的知识可以通过在成本函数中增加一个兼容性术语而与任何方法相结合。我们在反事实解释中同时提出正式化的建议,并提出一种新的可解释性方法,称为 " 反事实解释中的知识整合(KICE) ",以优化该方法。本文件对若干基准数据集进行了实验研究,以比较参考方法,描述由KICE产生的反事实实例。