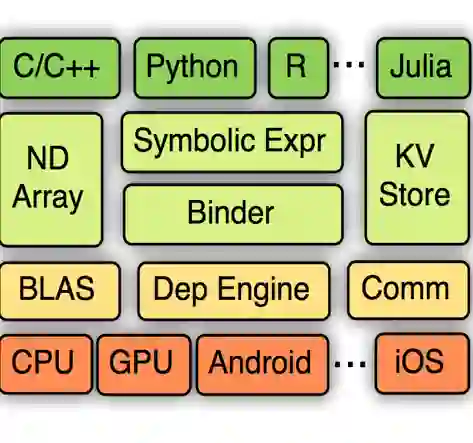

In recent years, neural networks have been extensively deployed for computer vision tasks, particularly visual classification problems, where new algorithms reported to achieve or even surpass the human performance. Recent studies have shown that they are all vulnerable to the attack of adversarial examples. Small and often imperceptible perturbations to the input images are sufficient to fool the most powerful neural networks. \emph{Advbox} is a toolbox to generate adversarial examples that fool neural networks in PaddlePaddle, PyTorch, Caffe2, MxNet, Keras, TensorFlow and it can benchmark the robustness of machine learning models. Compared to previous work, our platform supports black box attacks on Machine-Learning-as-a-service, as well as more attack scenarios, such as Face Recognition Attack, Stealth T-shirt, and DeepFake Face Detect. The code is licensed under the Apache 2.0 and is openly available at https://github.com/advboxes/AdvBox. Advbox now supports Python 3.

翻译:近些年来,神经网络被广泛用于计算机视觉任务,特别是视觉分类问题,据报告新的算法能够达到甚至超过人类的性能。最近的研究显示,它们都容易受到对抗性实例的攻击。对输入图像的微小而且往往无法察觉的扰动足以愚弄最强大的神经网络。 \ emph{Advbox}是一个工具箱,可以产生对抗性的例子,例如PaddlePaddddle、PyTorch、Cafe2、MxNet、Keras、TensorFlow的愚昧神经网络,可以衡量机器学习模型的坚固性。与以往的工作相比,我们的平台支持黑箱攻击机器学习服务,以及更多的攻击情景,如面对面承认攻击、偷窃T恤和深Fake Face Face 探测器。代码在阿帕奇2.0下获得许可,并在https://github.com/advbox/Advbox公开查阅。Advbox现在支持Python 3。Advbox支持Python。