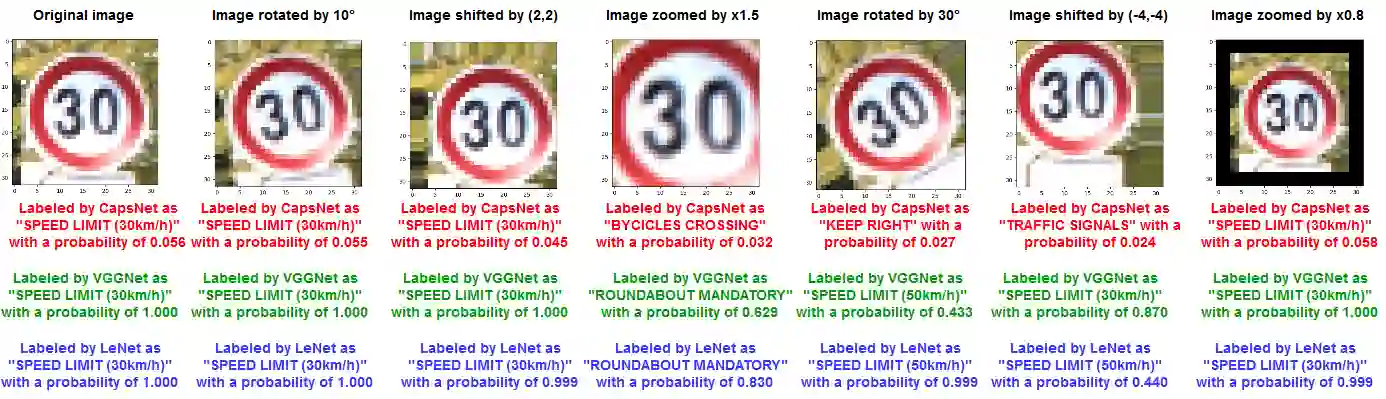

Capsule Networks preserve the hierarchical spatial relationships between objects, and thereby bears a potential to surpass the performance of traditional Convolutional Neural Networks (CNNs) in performing tasks like image classification. A large body of work has explored adversarial examples for CNNs, but their effectiveness on Capsule Networks has not yet been well studied. In our work, we perform an analysis to study the vulnerabilities in Capsule Networks to adversarial attacks. These perturbations, added to the test inputs, are small and imperceptible to humans, but can fool the network to mispredict. We propose a greedy algorithm to automatically generate targeted imperceptible adversarial examples in a black-box attack scenario. We show that this kind of attacks, when applied to the German Traffic Sign Recognition Benchmark (GTSRB), mislead Capsule Networks. Moreover, we apply the same kind of adversarial attacks to a 5-layer CNN and a 9-layer CNN, and analyze the outcome, compared to the Capsule Networks to study differences in their behavior.

翻译:Capsule 网络维护天体之间的等级空间关系,从而有可能超越传统进化神经网络(CNNs)在进行图像分类等任务方面的表现。 大量的工作探索了CNN的对抗性例子,但还没有很好地研究其在Capsule 网络上的效力。 在我们的工作中,我们进行了一项分析,研究Capsule 网络对对抗性攻击的脆弱性。 这些干扰加上了测试输入,对人类来说是小的和不可察觉的,但可能愚弄网络错误。 我们提出贪婪的算法,以便在黑箱攻击情景中自动生成有针对性的、不可察觉的对抗性例子。 我们显示,当德国交通信号识别基准(GTSRB)应用时,这种攻击误导了Capsule 网络。 此外,我们对5级CNN和9级CNN应用同样的对抗性攻击,并分析结果,与Capsule 网络相比,我们用来研究其行为上的差异。