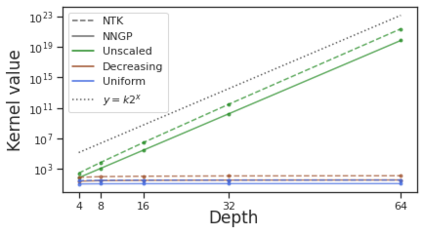

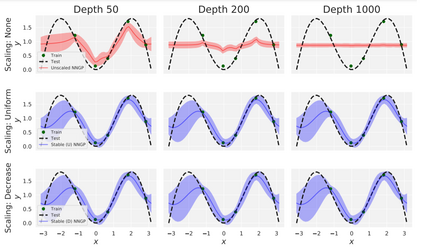

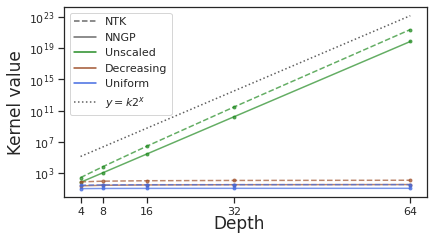

Deep ResNet architectures have achieved state of the art performance on many tasks. While they solve the problem of gradient vanishing, they might suffer from gradient exploding as the depth becomes large (Yang et al. 2017). Moreover, recent results have shown that ResNet might lose expressivity as the depth goes to infinity (Yang et al. 2017, Hayou et al. 2019). To resolve these issues, we introduce a new class of ResNet architectures, called Stable ResNet, that have the property of stabilizing the gradient while ensuring expressivity in the infinite depth limit.

翻译:深 ResNet 架构在许多任务中取得了最新表现。 虽然它们解决了梯度消失的问题, 但随着深度的扩大,它们可能会受到梯度爆炸的影响(Yang等人,2017年)。此外,最近的结果显示,随着深度的扩大,ResNet可能会失去表达性(Yang等人,2017年,Hayou等人,2019年)。为了解决这些问题,我们引入了一种新的 ResNet 架构类别,称为Stair ResNet, 其属性是稳定梯度,同时确保无限深度限制的表达性。