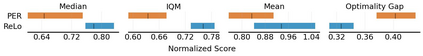

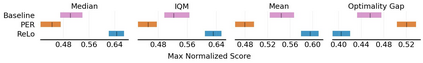

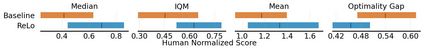

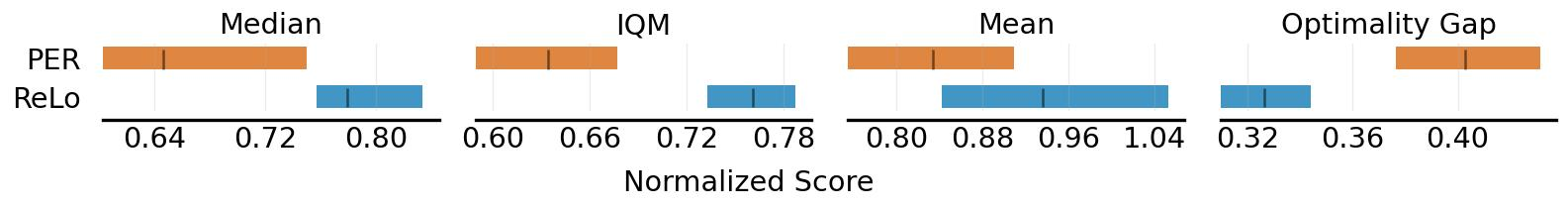

Most reinforcement learning algorithms take advantage of an experience replay buffer to repeatedly train on samples the agent has observed in the past. This prevents catastrophic forgetting, however simply assigning equal importance to each of the samples is a naive strategy. In this paper, we propose a method to prioritize samples based on how much we can learn from a sample. We define the learn-ability of a sample as the steady decrease of the training loss associated with this sample over time. We develop an algorithm to prioritize samples with high learn-ability, while assigning lower priority to those that are hard-to-learn, typically caused by noise or stochasticity. We empirically show that our method is more robust than random sampling and also better than just prioritizing with respect to the training loss, i.e. the temporal difference loss, which is used in vanilla prioritized experience replay.

翻译:多数强化学习算法利用经验重现缓冲,反复进行该物剂过去观察到的样本培训。这防止了灾难性的遗忘,尽管只是简单地赋予每个样本同等重要性是一种天真的策略。在本文中,我们建议了一种方法,根据我们从样本中可以学到的多少来对样本进行优先排序。我们将样本的学习能力定义为与该样本相关的培训损失随着时间推移而稳步减少。我们开发了一种对高学习能力样本进行优先排序的算法,同时对通常由噪音或杂乱造成的难以阅读的样本给予较低优先。 我们从经验上表明,我们的方法比随机抽样更为有力,而且比仅仅优先处理培训损失(即香草优先经验重现中使用的时间差损失)还要好。