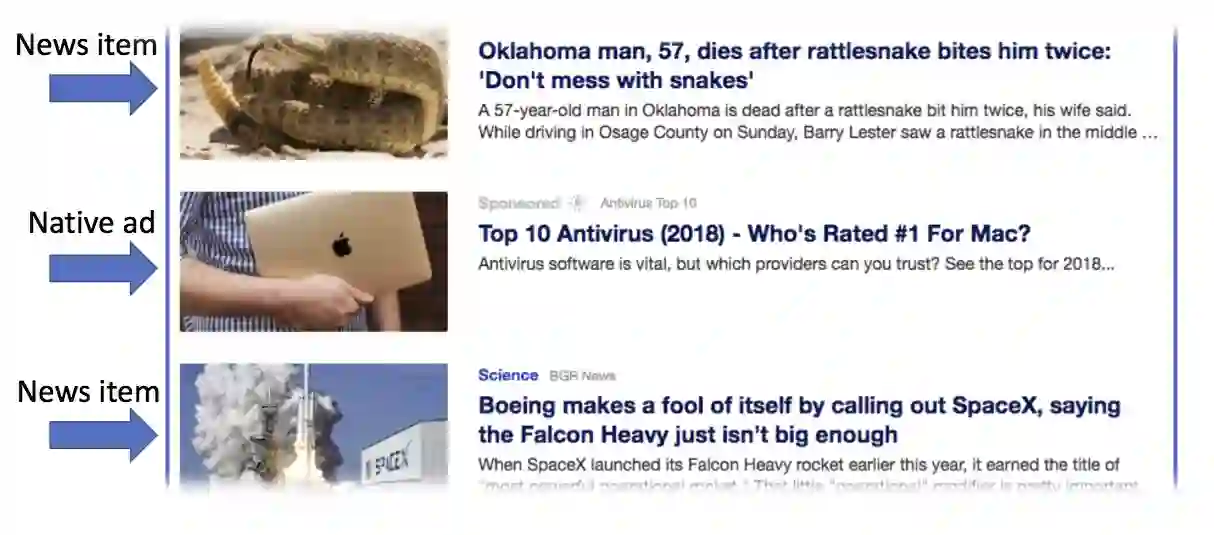

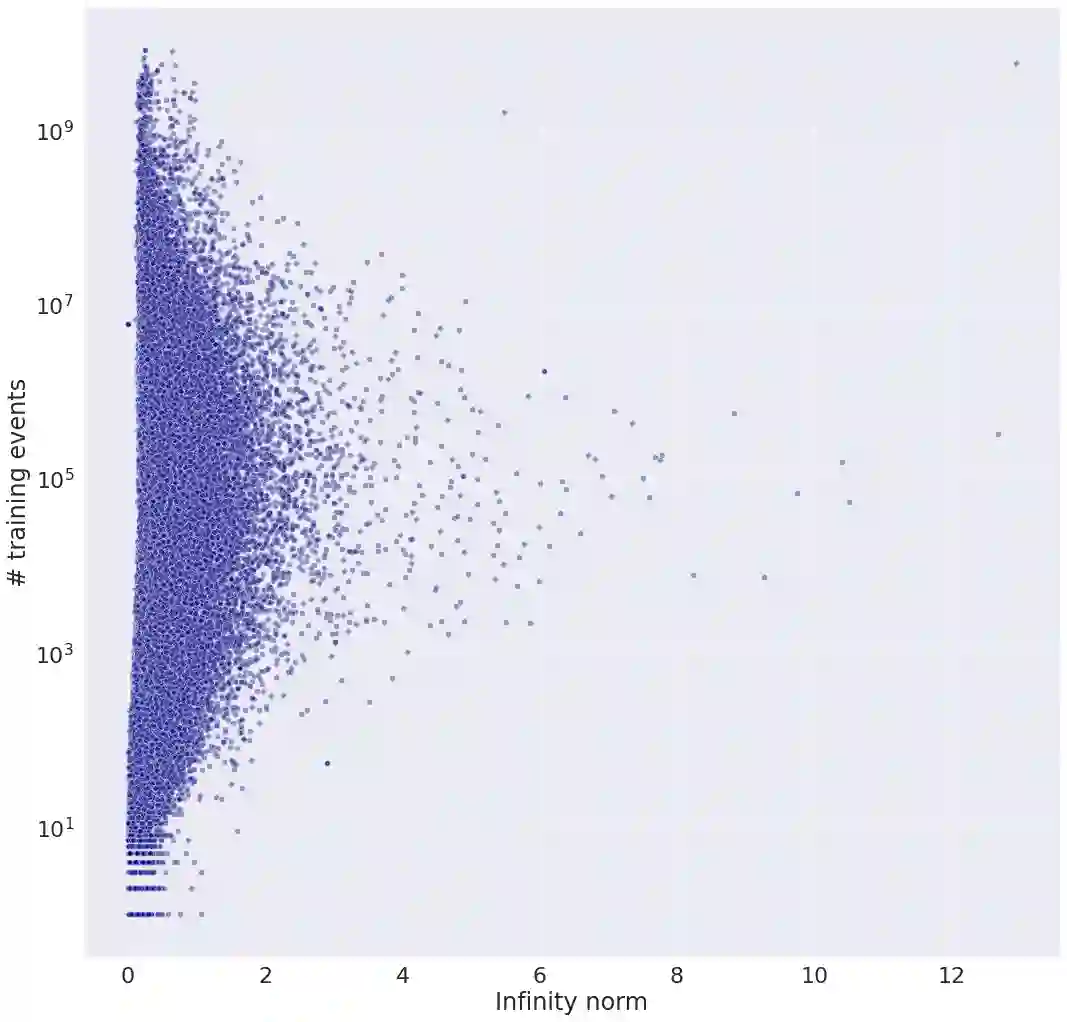

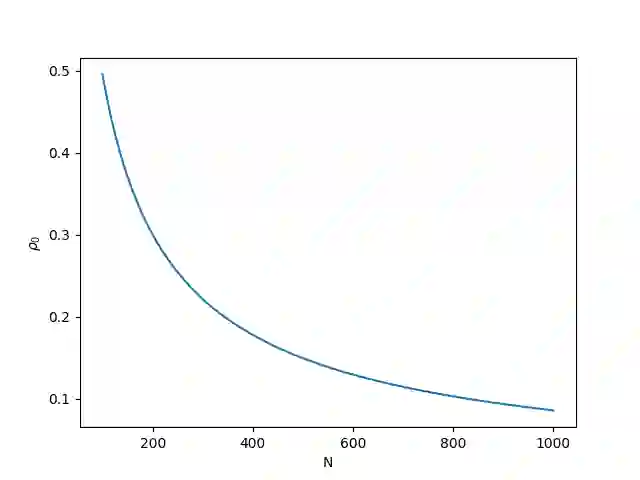

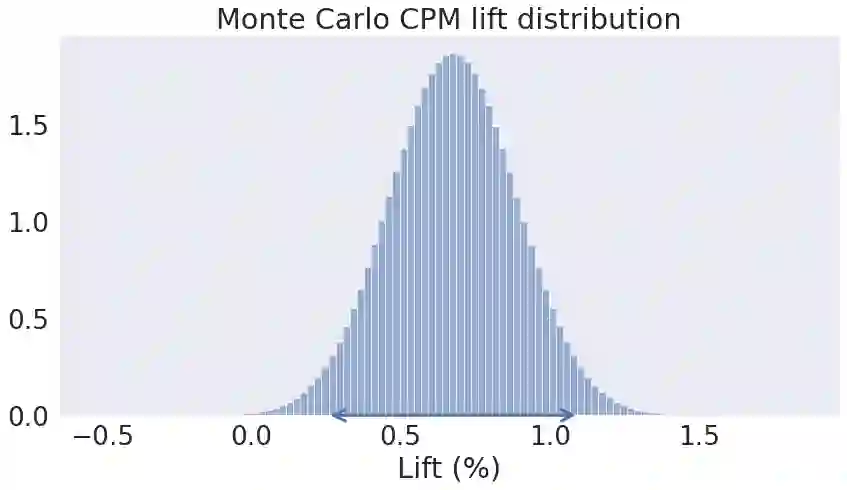

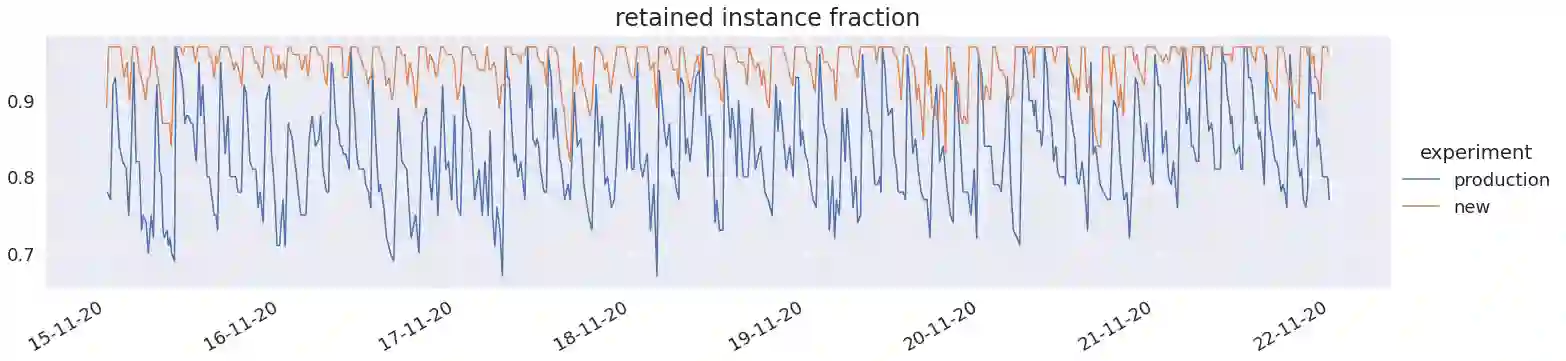

Real-world content recommendation marketplaces exhibit certain behaviors and are imposed by constraints that are not always apparent in common static offline data sets. One example that is common in ad marketplaces is swift ad turnover. New ads are introduced and old ads disappear at high rates every day. Another example is ad discontinuity, where existing ads may appear and disappear from the market for non negligible amounts of time due to a variety of reasons (e.g., depletion of budget, pausing by the advertiser, flagging by the system, and more). These behaviors sometimes cause the model's loss surface to change dramatically over short periods of time. To address these behaviors, fresh models are highly important, and to achieve this (and for several other reasons) incremental training on small chunks of past events is often employed. These behaviors and algorithmic optimizations occasionally cause model parameters to grow uncontrollably large, or \emph{diverge}. In this work present a systematic method to prevent model parameters from diverging by imposing a carefully chosen set of constraints on the model's latent vectors. We then devise a method inspired by primal-dual optimization algorithms to fulfill these constraints in a manner which both aligns well with incremental model training, and does not require any major modifications to the underlying model training algorithm. We analyze, demonstrate, and motivate our method on OFFSET, a collaborative filtering algorithm which drives Yahoo native advertising, which is one of VZM's largest and faster growing businesses, reaching a run-rate of many hundreds of millions USD per year. Finally, we conduct an online experiment which shows a substantial reduction in the number of diverging instances, and a significant improvement to both user experience and revenue.

翻译:现实世界内容建议市场呈现出某些行为,并受到一些限制,而这些限制在共同的静态离线数据集中并不总见得明显。在市场中常见的一个例子是快速的更替。新广告被推出,旧广告每天以很高的速率消失。另一个例子是不连续,现有的广告可能会出现,并且由于各种原因(例如预算耗竭、广告商暂停、系统旗号等),在相当长的时间里,在市场中消失。这些行径有时导致模型的损失表面在短期内发生急剧变化。为了应对这些行为,新模型非常重要,为了达到这个目的(以及其他原因),往往会采用关于过去小片事件的递增培训。这些动作和算法的优化有时会导致模型参数变得无法控制地大得多(例如预算耗尽、广告商广告员推推敲、系统加亮亮等等 ) 。在这个模型中,通过对模型的潜在矢量的改进来防止模型参数出现差异,我们仔细选择了数以百万计的损表表面矢量矢量矢量变化。我们随后设计了一种方法,即根据原始-演算法来大幅地调整一个基础的系统化的系统化,我们不断递增的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的模型, 也需要一种方法,一个系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统, 。在不断递减税税法,在不断变压法,在不断变压法,在不断递减法式的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的模型,在不断进的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化的系统化