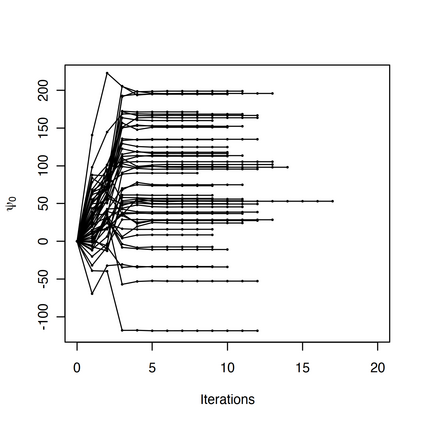

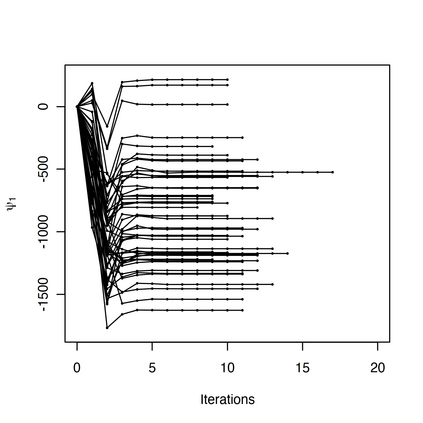

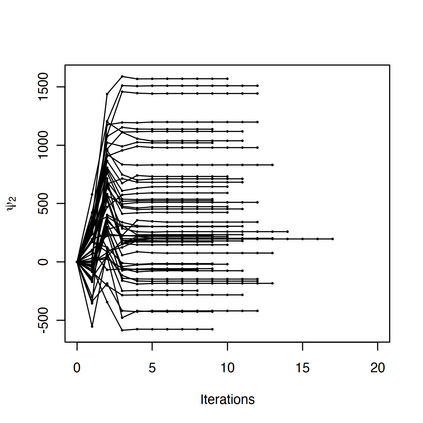

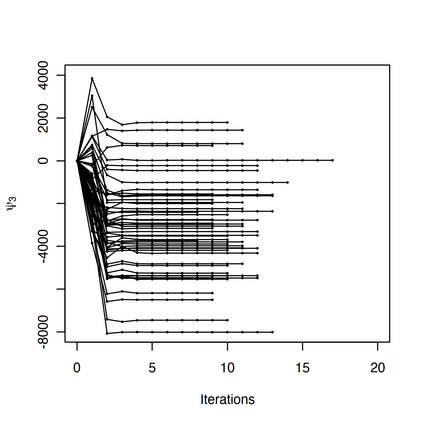

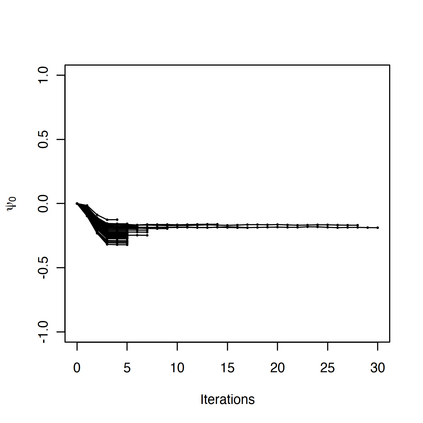

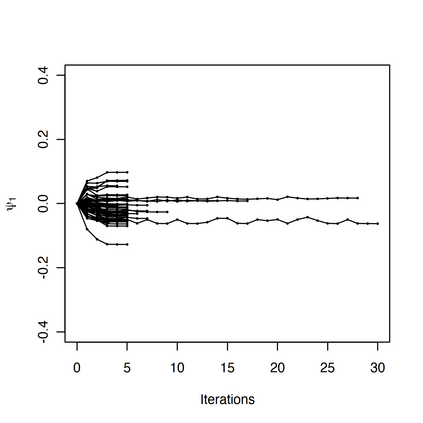

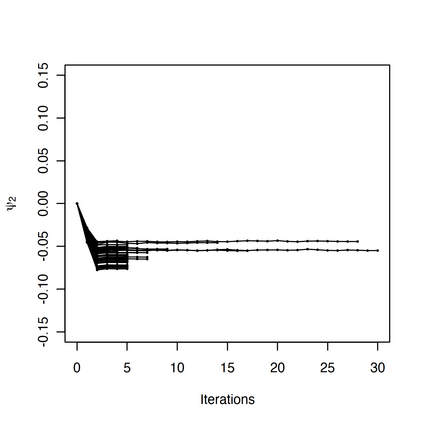

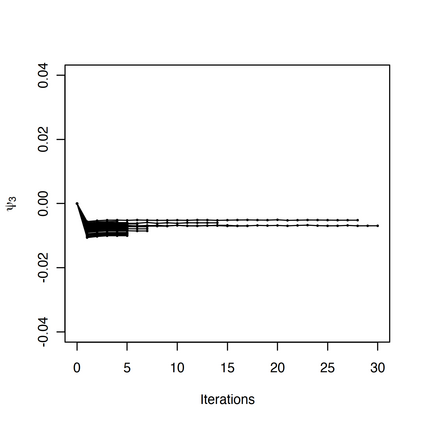

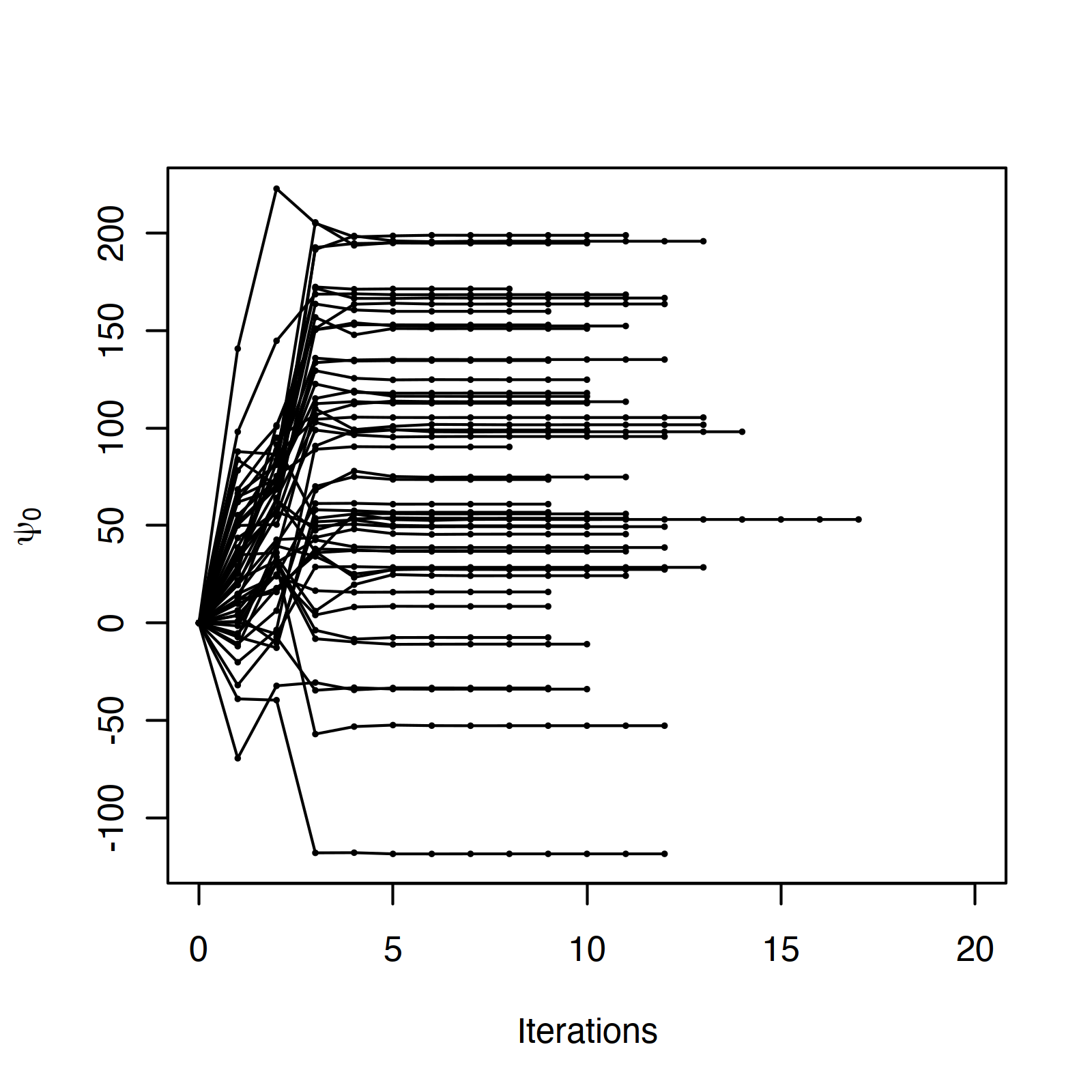

A dynamic treatment regimen (DTR) is a set of decision rules to personalize treatments for an individual using their medical history. The Q-learning based Q-shared algorithm has been used to develop DTRs that involve decision rules shared across multiple stages of intervention. We show that the existing Q-shared algorithm can suffer from non-convergence due to the use of linear models in the Q-learning setup, and identify the condition in which Q-shared fails. Leveraging properties from expansion-constrained ordinary least-squares, we give a penalized Q-shared algorithm that not only converges in settings that violate the condition, but can outperform the original Q-shared algorithm even when the condition is satisfied. We give evidence for the proposed method in a real-world application and several synthetic simulations.

翻译:动态处理法(DTR)是一套决定规则,用以利用个人的医疗史将治疗个人化。基于Q-学习的Q-共享算法被用于开发DTR,其中涉及在干预的多个阶段共有的决定规则。我们表明,现有的Q-共享算法可能由于在Q-学习设置中使用线性模型而出现非趋同现象,并查明Q-共享失败的条件。从扩张限制的普通最不合格方位中利用属性,我们给出了一种受处罚的Q-共享算法,这种算法不仅在违反条件的环境中汇合,而且即使在条件得到满足时也能够超过最初的Q-共享算法。我们在现实世界应用和若干合成模拟中提供了拟议方法的证据。