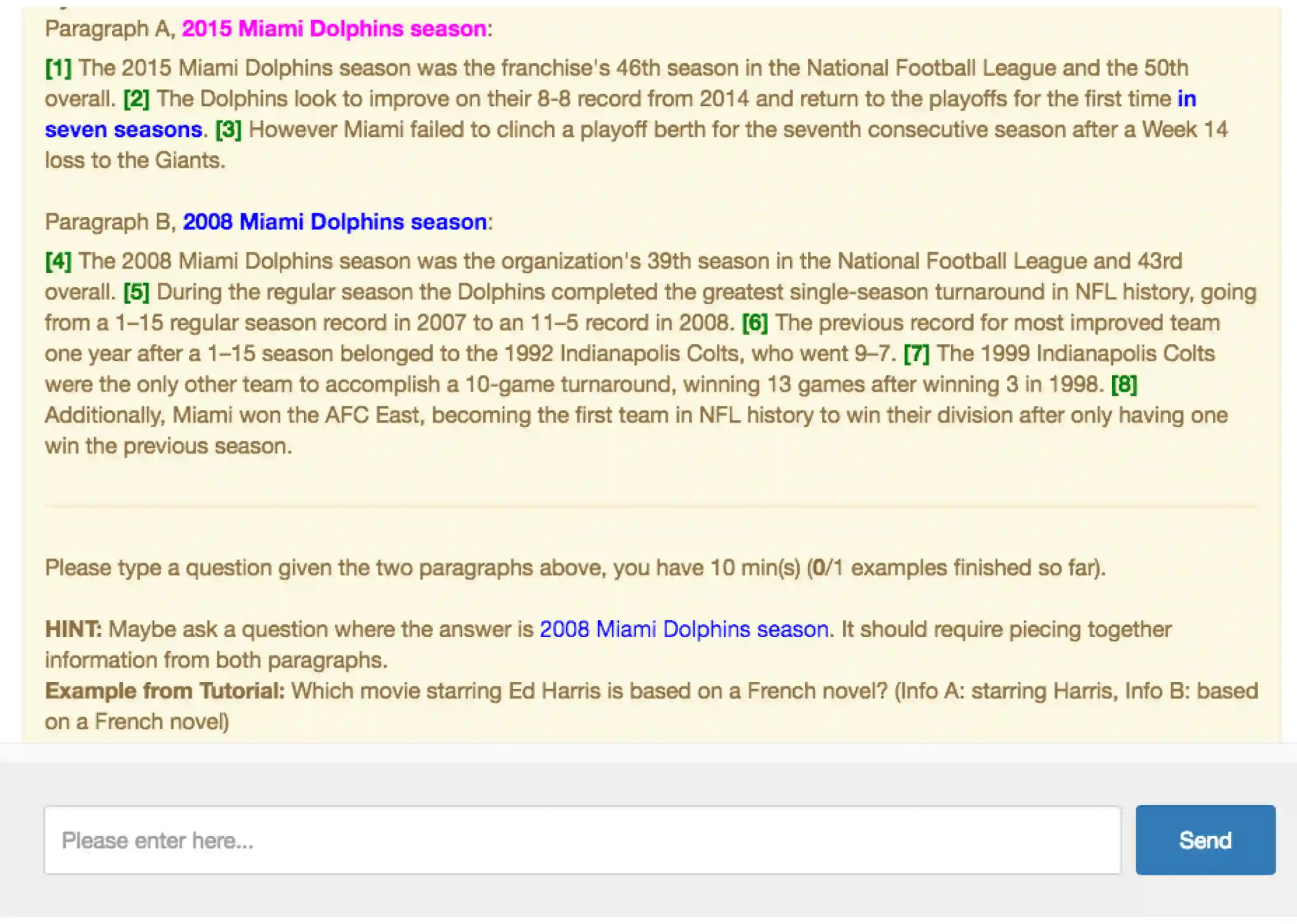

Existing question answering (QA) datasets fail to train QA systems to perform complex reasoning and provide explanations for answers. We introduce HotpotQA, a new dataset with 113k Wikipedia-based question-answer pairs with four key features: (1) the questions require finding and reasoning over multiple supporting documents to answer; (2) the questions are diverse and not constrained to any pre-existing knowledge bases or knowledge schemas; (3) we provide sentence-level supporting facts required for reasoning, allowing QA systems to reason with strong supervision and explain the predictions; (4) we offer a new type of factoid comparison questions to test QA systems' ability to extract relevant facts and perform necessary comparison. We show that HotpotQA is challenging for the latest QA systems, and the supporting facts enable models to improve performance and make explainable predictions.

翻译:现有的回答问题(QA)数据集未能培训质量评估系统来进行复杂的推理和解释答案。我们引入了热锅QA,这是一个新的数据集,配有113k维基百科的问答对齐,有四个关键特征:(1) 问题需要找到和推理多种辅助文件才能回答;(2) 问题多种多样,不局限于先前存在的任何知识基础或知识体系;(3) 我们提供推理所需的判决级支持事实,使质量评估系统能够在强有力的监督下解释预测;(4) 我们提供一种新的事实类比较问题,以测试质量评估系统提取相关事实和进行必要的比较的能力。我们表明,热锅QA对最新的质量保证系统提出了挑战,而支持事实使模型能够改进性能和作出可解释的预测。