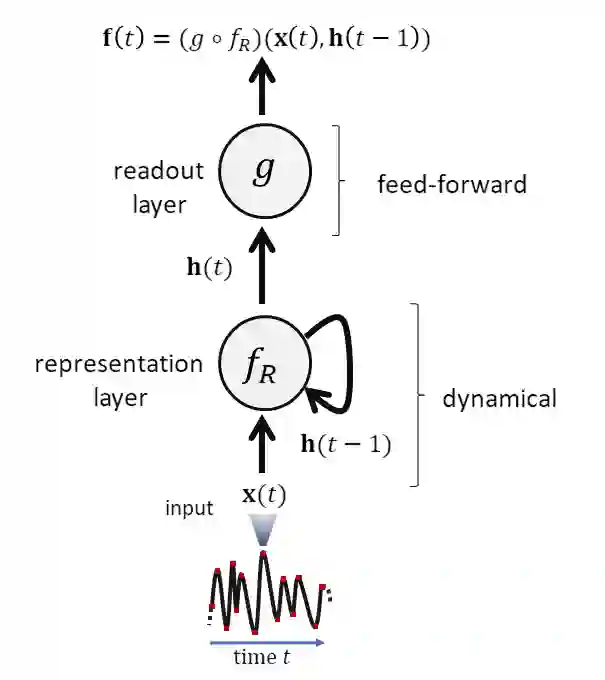

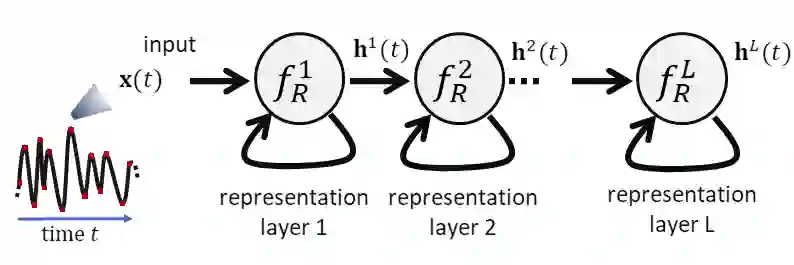

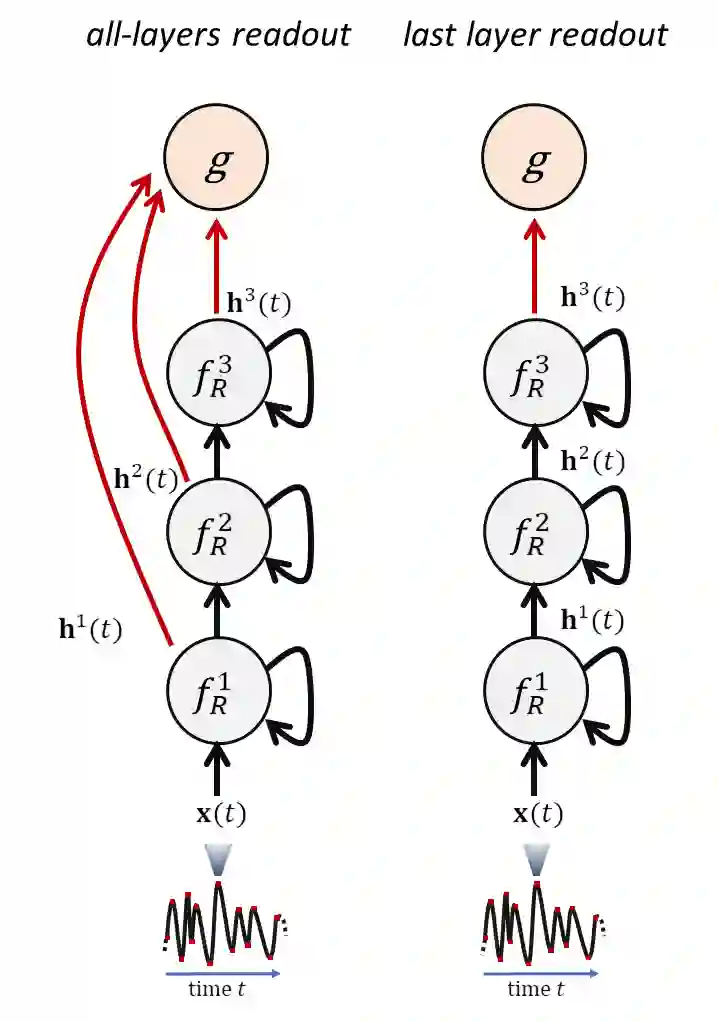

Randomized Neural Networks explore the behavior of neural systems where the majority of connections are fixed, either in a stochastic or a deterministic fashion. Typical examples of such systems consist of multi-layered neural network architectures where the connections to the hidden layer(s) are left untrained after initialization. Limiting the training algorithms to operate on a reduced set of weights inherently characterizes the class of Randomized Neural Networks with a number of intriguing features. Among them, the extreme efficiency of the resulting learning processes is undoubtedly a striking advantage with respect to fully trained architectures. Besides, despite the involved simplifications, randomized neural systems possess remarkable properties both in practice, achieving state-of-the-art results in multiple domains, and theoretically, allowing to analyze intrinsic properties of neural architectures (e.g. before training of the hidden layers' connections). In recent years, the study of Randomized Neural Networks has been extended towards deep architectures, opening new research directions to the design of effective yet extremely efficient deep learning models in vectorial as well as in more complex data domains. This chapter surveys all the major aspects regarding the design and analysis of Randomized Neural Networks, and some of the key results with respect to their approximation capabilities. In particular, we first introduce the fundamentals of randomized neural models in the context of feed-forward networks (i.e., Random Vector Functional Link and equivalent models) and convolutional filters, before moving to the case of recurrent systems (i.e., Reservoir Computing networks). For both, we focus specifically on recent results in the domain of deep randomized systems, and (for recurrent models) their application to structured domains.

翻译:随机随机神经网络 探索神经系统的行为, 大部分连接都是固定的神经系统, 无论是以随机随机还是确定式的方式。 这种系统的典型例子包括多层神经网络结构, 与隐藏层的连接在初始化后没有经过任何培训。 将培训算法限制在数量减少的一组重量上操作, 其内在特征是随机神经网络的等级, 具有一些令人感兴趣的特点。 其中, 由此形成的学习过程的极端效率, 无疑是充分培训的架构的惊人优势。 此外, 尽管涉及到简化, 随机的经常神经系统在实践上都具有显著的特性, 在多个领域实现最先进的结果, 在理论上, 能够分析神经结构的内在特性( 例如, 在培训隐藏层连接之前) 。 近几年来, 随机化神经网络的研究已经扩展到了深层结构, 开启了新的研究方向, 在矢量的、 以及一些更复杂的数据域域的内, 有效的、 快速的不断深层次学习模式的设计方向。 本章中, 将所有主要的域域域,, 引入了我们的主要域域网 设计结果分析,,,, 具体地, 进入了它们的关键,, 以及 方向,, 的 直系 直系, 直系的, 直系 直系的 直系 直系的 直系, 直系 直系 直系 直系 。