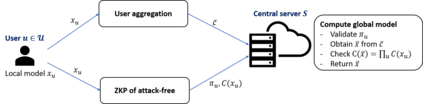

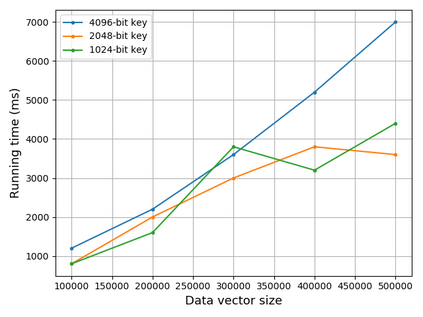

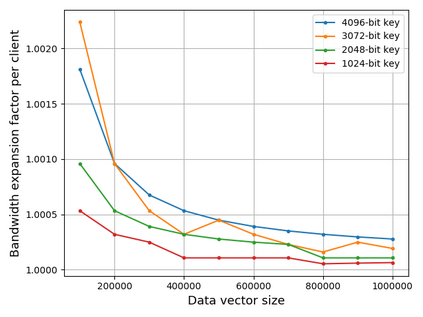

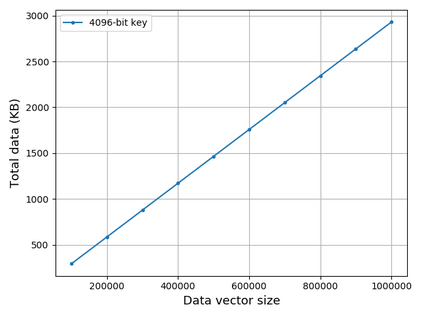

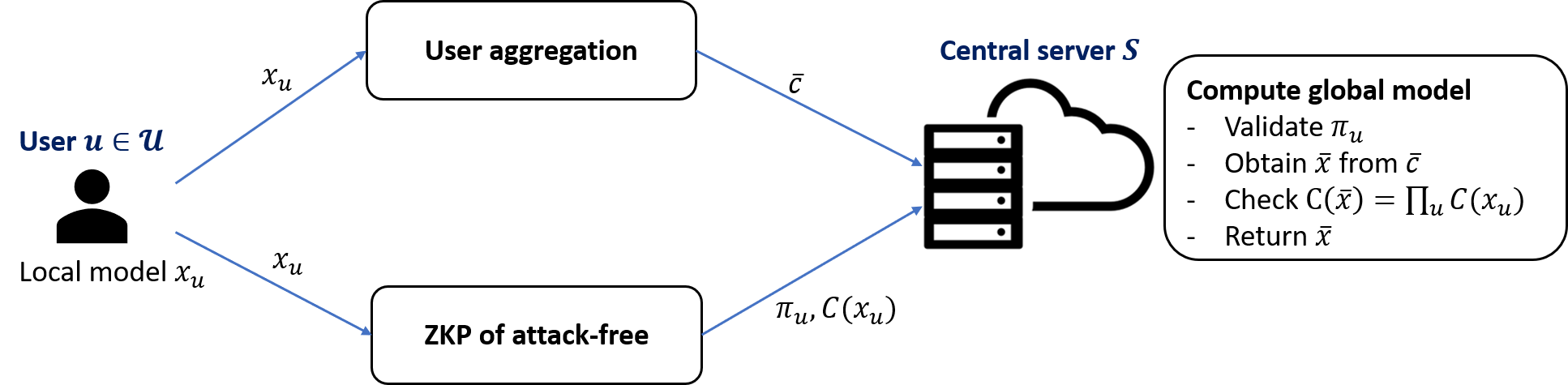

Federated learning is known to be vulnerable to security and privacy issues. Existing research has focused either on preventing poisoning attacks from users or on protecting user privacy of model updates. However, integrating these two lines of research remains a crucial challenge since they often conflict with one another with respect to the threat model. In this work, we develop a framework to combine secure aggregation with defense mechanisms against poisoning attacks from users, while maintaining their respective privacy guarantees. We leverage zero-knowledge proof protocol to let users run the defense mechanisms locally and attest the result to the central server without revealing any information about their model updates. Furthermore, we propose a new secure aggregation protocol for federated learning using homomorphic encryption that is robust against malicious users. Our framework enables the central server to identify poisoned model updates without violating the privacy guarantees of secure aggregation. Finally, we analyze the computation and communication complexity of our proposed solution and benchmark its performance.

翻译:众所周知,联邦学习容易受到安全和隐私问题的影响。现有研究的重点要么是防止用户的中毒袭击,要么是保护模型更新的用户隐私。然而,将这两条研究线结合起来仍是一项重大挑战,因为它们在威胁模式方面经常相互冲突。在这项工作中,我们制定了一个框架,将安全合并与防范用户中毒袭击的防御机制结合起来,同时维护各自的隐私保障。我们利用零知识验证协议让用户在当地管理防御机制,并向中央服务器证明结果,而不透露任何有关其模型更新的信息。此外,我们提出一个新的安全聚合协议,用于使用对恶意用户强有力的同质加密进行联合学习。我们的框架使中央服务器能够在不违反安全聚合的隐私保障的情况下确定有毒的模型更新。最后,我们分析了我们拟议解决方案的计算和通信复杂性,并确定了其性能的基准。