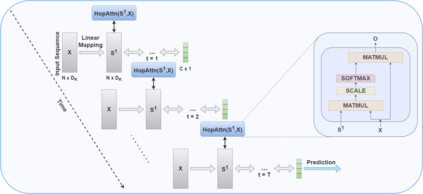

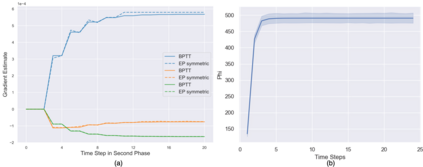

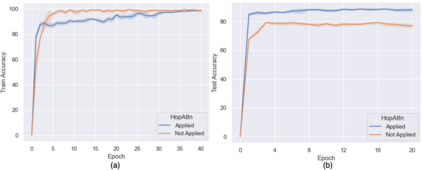

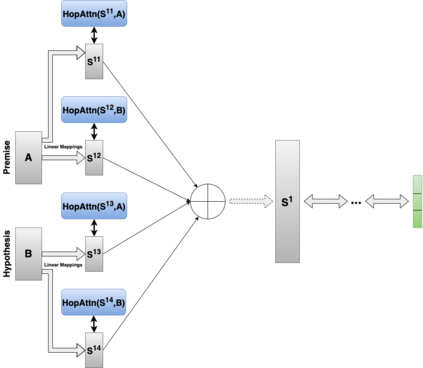

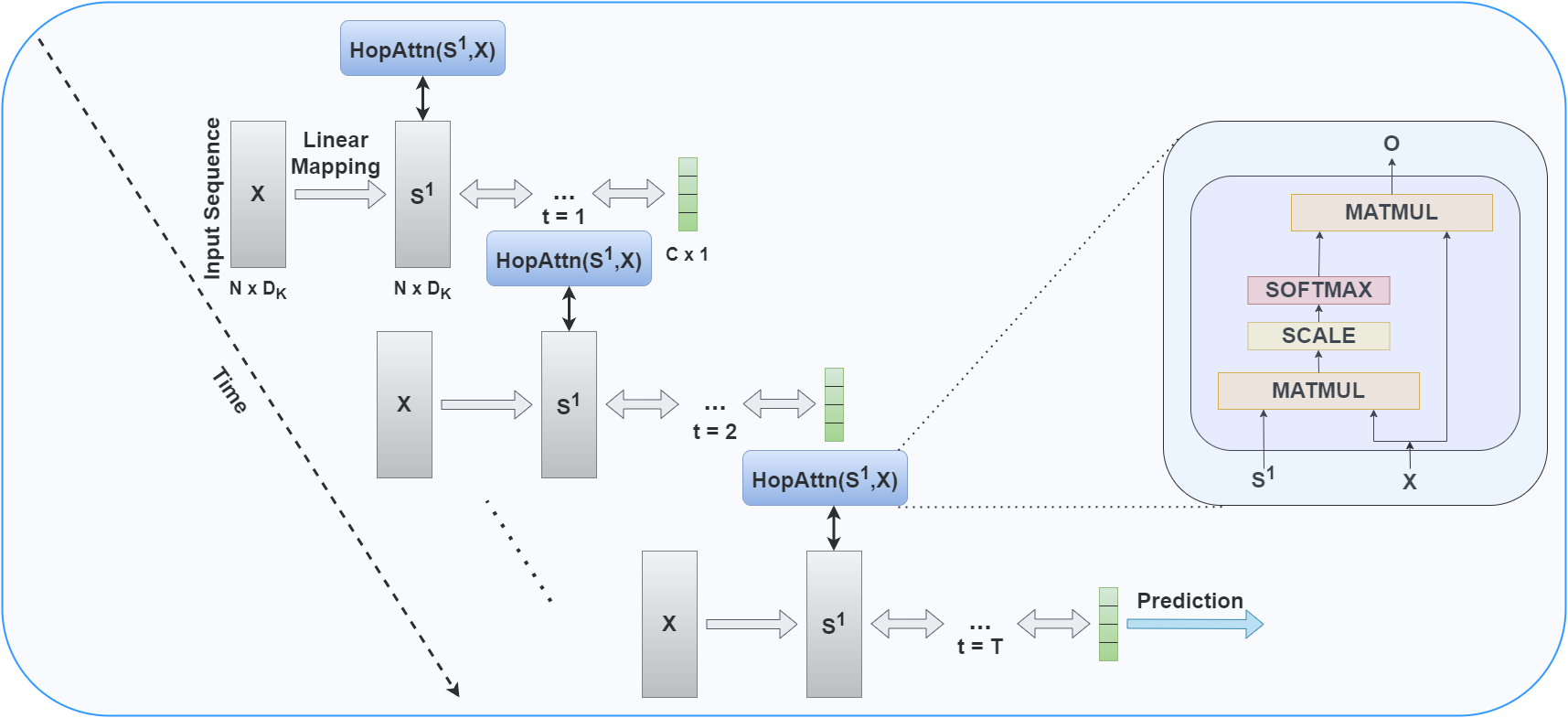

Equilibrium Propagation (EP) is a powerful and more bio-plausible alternative to conventional learning frameworks such as backpropagation. The effectiveness of EP stems from the fact that it relies only on local computations and requires solely one kind of computational unit during both of its training phases, thereby enabling greater applicability in domains such as bio-inspired neuromorphic computing. The dynamics of the model in EP is governed by an energy function and the internal states of the model consequently converge to a steady state following the state transition rules defined by the same. However, by definition, EP requires the input to the model (a convergent RNN) to be static in both the phases of training. Thus it is not possible to design a model for sequence classification using EP with an LSTM or GRU like architecture. In this paper, we leverage recent developments in modern hopfield networks to further understand energy based models and develop solutions for complex sequence classification tasks using EP while satisfying its convergence criteria and maintaining its theoretical similarities with recurrent backpropagation. We explore the possibility of integrating modern hopfield networks as an attention mechanism with convergent RNN models used in EP, thereby extending its applicability for the first time on two different sequence classification tasks in natural language processing viz. sentiment analysis (IMDB dataset) and natural language inference (SNLI dataset).

翻译:平衡性神经形态计算(EP)是一个强大和更具生物可变性的替代方法,可以替代常规学习框架,如反向适应。EP的有效性来自这样一个事实,即它只依靠当地计算,在两个培训阶段只要求一种计算单位,从而在生物激励型神经形态计算等领域能够更大适用性。EP中模型的动态受能源功能的制约,因此模型的内部状态与同一定义的州过渡规则一致,稳定状态。然而,根据定义,EP要求在培训的两个阶段,对模型的投入(趋同式 RNNN)必须固定不变。因此,不可能设计一个模型,用于使用LSTM或GRU这样的结构的EP分类序列分类。在本文中,我们利用现代跳地网络的最新发展来进一步理解以能源为基础的模型,并开发使用EPA的复杂序列分类任务的解决办法,同时满足其趋同标准,并保持其理论与经常反向调整的相似性。我们探讨了将现代跳地网络作为第一个关注机制的可能性,作为Convenent Rnent RNNNNN) 的自然语言适应性分析模型中的第一个关注机制。