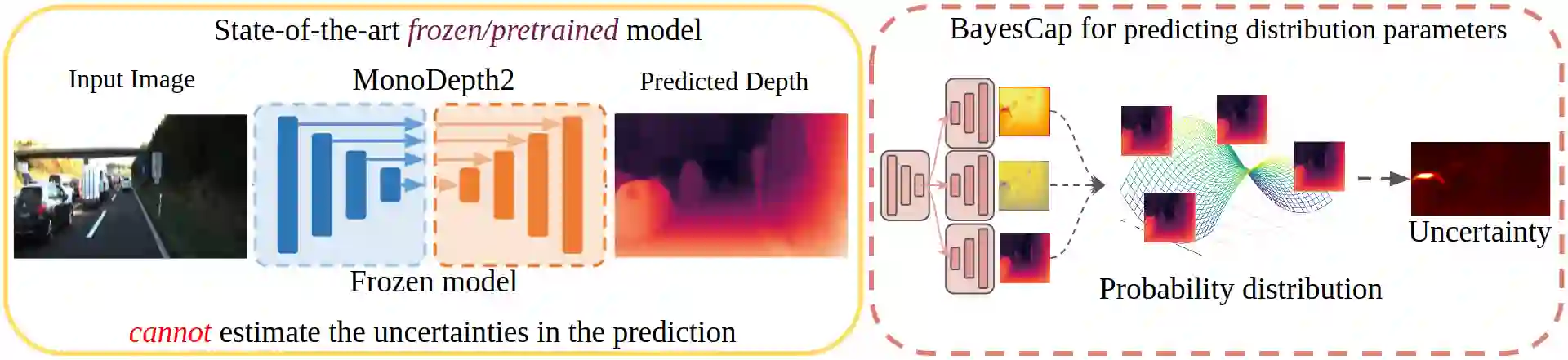

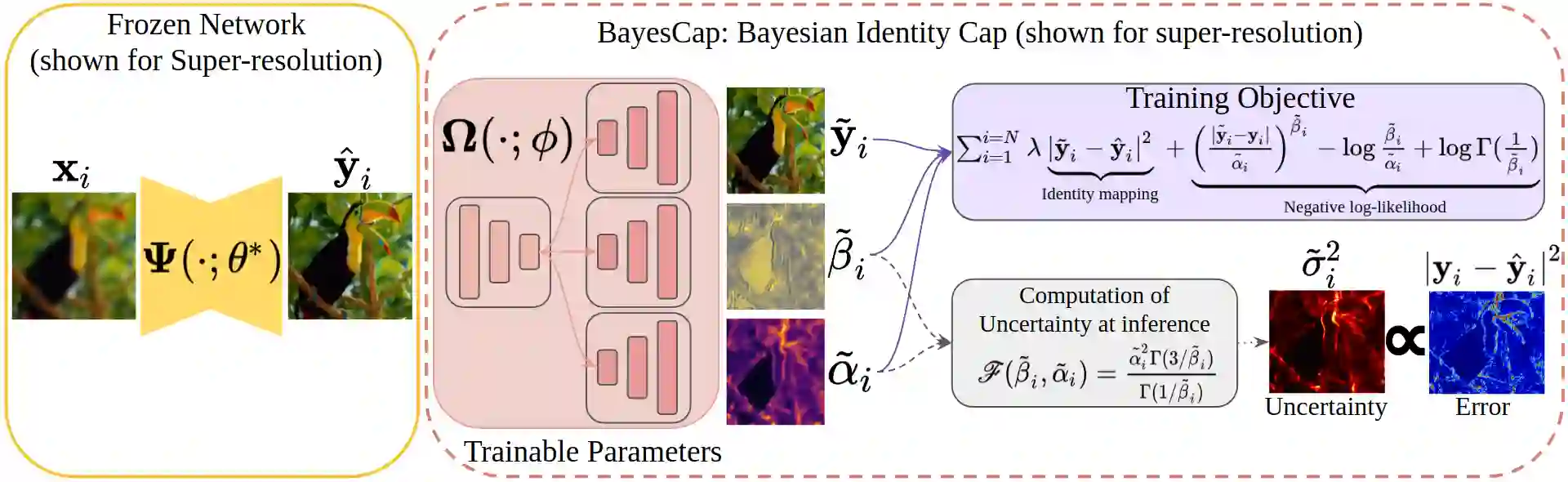

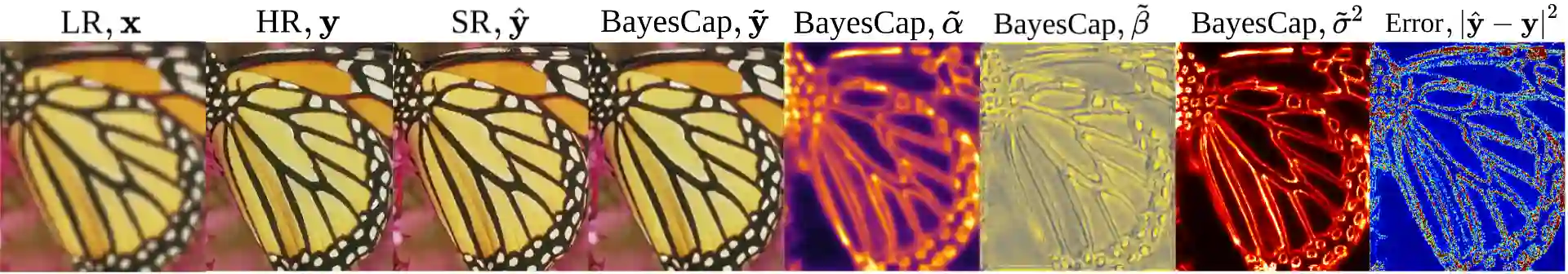

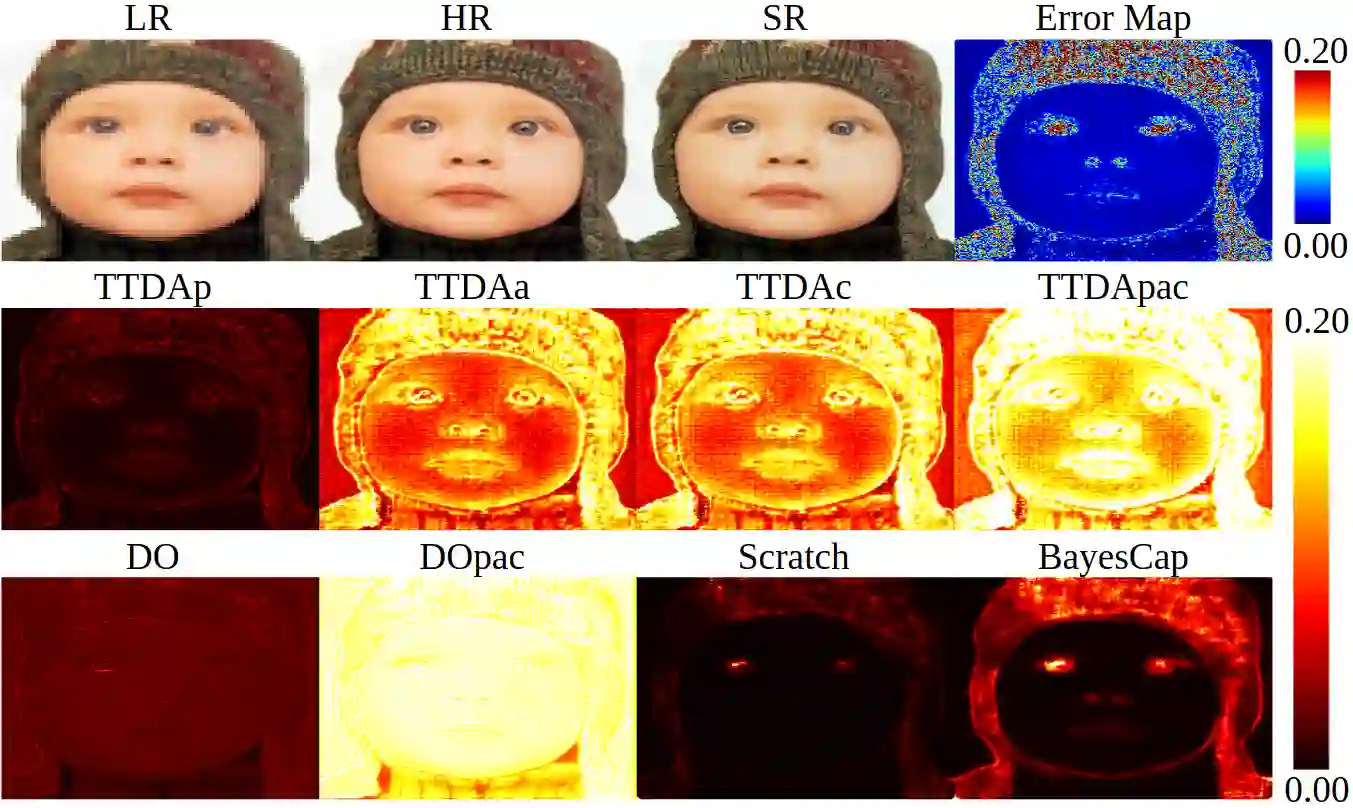

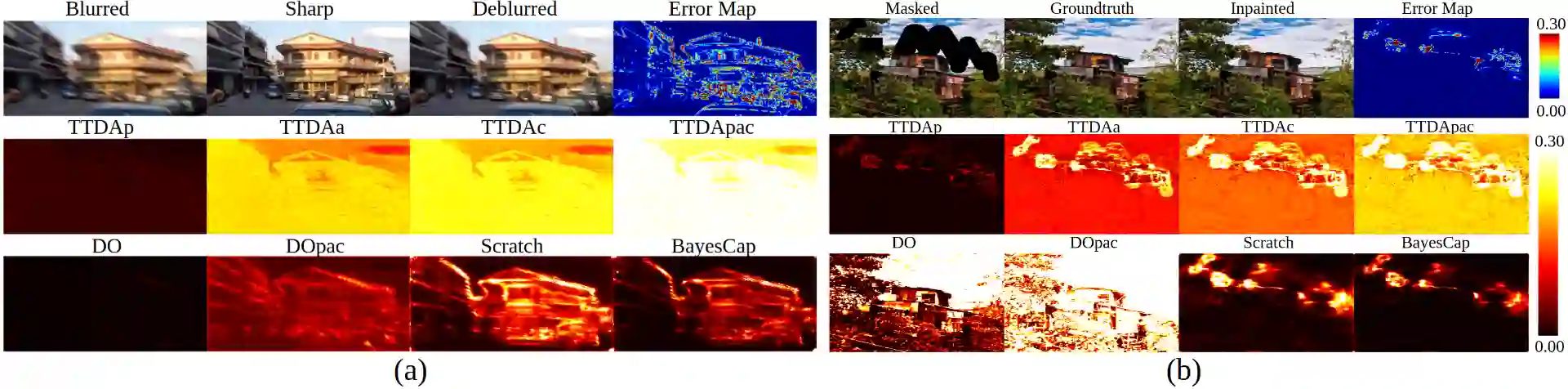

High-quality calibrated uncertainty estimates are crucial for numerous real-world applications, especially for deep learning-based deployed ML systems. While Bayesian deep learning techniques allow uncertainty estimation, training them with large-scale datasets is an expensive process that does not always yield models competitive with non-Bayesian counterparts. Moreover, many of the high-performing deep learning models that are already trained and deployed are non-Bayesian in nature and do not provide uncertainty estimates. To address these issues, we propose BayesCap that learns a Bayesian identity mapping for the frozen model, allowing uncertainty estimation. BayesCap is a memory-efficient method that can be trained on a small fraction of the original dataset, enhancing pretrained non-Bayesian computer vision models by providing calibrated uncertainty estimates for the predictions without (i) hampering the performance of the model and (ii) the need for expensive retraining the model from scratch. The proposed method is agnostic to various architectures and tasks. We show the efficacy of our method on a wide variety of tasks with a diverse set of architectures, including image super-resolution, deblurring, inpainting, and crucial application such as medical image translation. Moreover, we apply the derived uncertainty estimates to detect out-of-distribution samples in critical scenarios like depth estimation in autonomous driving. Code is available at https://github.com/ExplainableML/BayesCap.

翻译:高品质的经校准的不确定性估计对于许多现实世界应用,特别是对于深层次的基于学习的部署ML系统来说至关重要。虽然贝耶斯深层学习技术允许对不确定性进行估计,但用大规模数据集培训他们是一个昂贵的过程,并不一定能产生与非拜耶对应方具有竞争力的模型;此外,许多已经培训和部署的高绩效深层次学习模式在性质上不是拜耶斯,不能提供不确定性估计。为了解决这些问题,我们提议贝耶斯峰为冷冻模型学习巴伊西亚身份制图,允许进行不确定性估计。贝伊斯峰是一种记忆效率高的方法,可以在原始数据集的一小部分方面接受培训,通过提供经过事先训练的非巴耶斯计算机视觉模型,在不(一) 妨碍模型的性能和(二) 需要从头到尾对模型进行昂贵的再培训。为了解决这些问题,我们建议的方法对各种结构和任务都具有效力,包括图像解析、降压/降压等高记忆效率方法,可以用来对原始数据集进行训练,同时加强预先训练的非巴耶斯的计算机视觉模型模型模型模型,在深度中进行测量和关键应用。我们可以进行精确的模型的模型的模型分析。在分析中进行。在分析时,在分析/再分析时,在分析/再分析中进行。在分析时,在分析时要变压的精确的模型中进行。