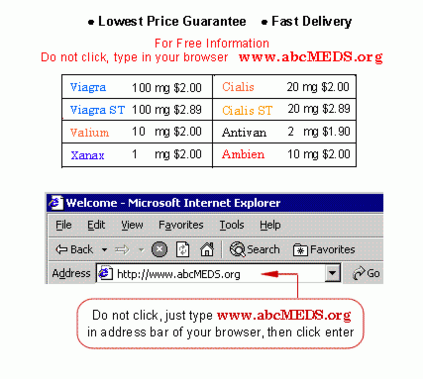

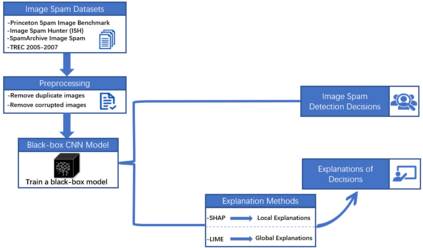

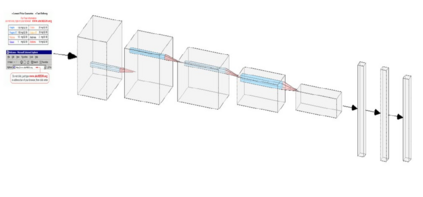

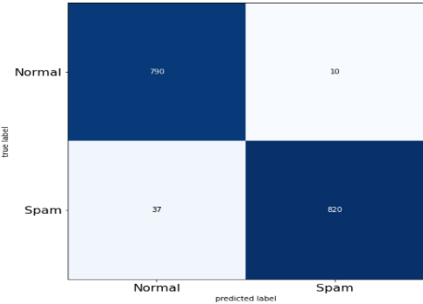

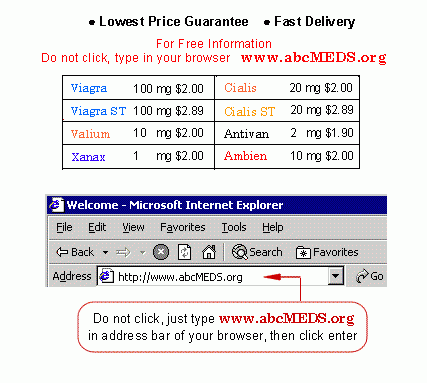

Image spam threat detection has continually been a popular area of research with the internet's phenomenal expansion. This research presents an explainable framework for detecting spam images using Convolutional Neural Network(CNN) algorithms and Explainable Artificial Intelligence (XAI) algorithms. In this work, we use CNN model to classify image spam respectively whereas the post-hoc XAI methods including Local Interpretable Model Agnostic Explanation (LIME) and Shapley Additive Explanations (SHAP) were deployed to provide explanations for the decisions that the black-box CNN models made about spam image detection. We train and then evaluate the performance of the proposed approach on a 6636 image dataset including spam images and normal images collected from three different publicly available email corpora. The experimental results show that the proposed framework achieved satisfactory detection results in terms of different performance metrics whereas the model-independent XAI algorithms could provide explanations for the decisions of different models which could be utilized for comparison for the future study.

翻译:由于互联网的惊人扩张,图像垃圾威胁探测一直是一个受欢迎的研究领域。这一研究为利用进化神经网络(CNN)算法和可解释的人工智能算法(XAI)算法探测垃圾图像提供了一个可解释的框架。在这项工作中,我们使用CNN模型对图像垃圾检测分别进行分类,而后热XAI方法,包括当地解释模型Agnistic Rescription(LIME)和Shapley Additive解释(SHAP)被采用是为了解释黑盒CNN模型对垃圾邮件图像探测所作的决定。我们培训并随后评价了6636图像数据集的拟议方法的性能,包括垃圾图像和从三种公开的电子邮件体收集的正常图像。实验结果显示,拟议的框架在不同性能指标方面取得了令人满意的检测结果,而依赖模型的XAI算法可以解释不同模型的决定,这些模型可用于未来研究的比较。