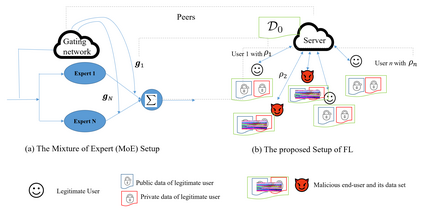

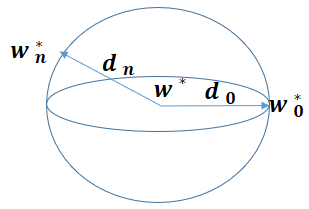

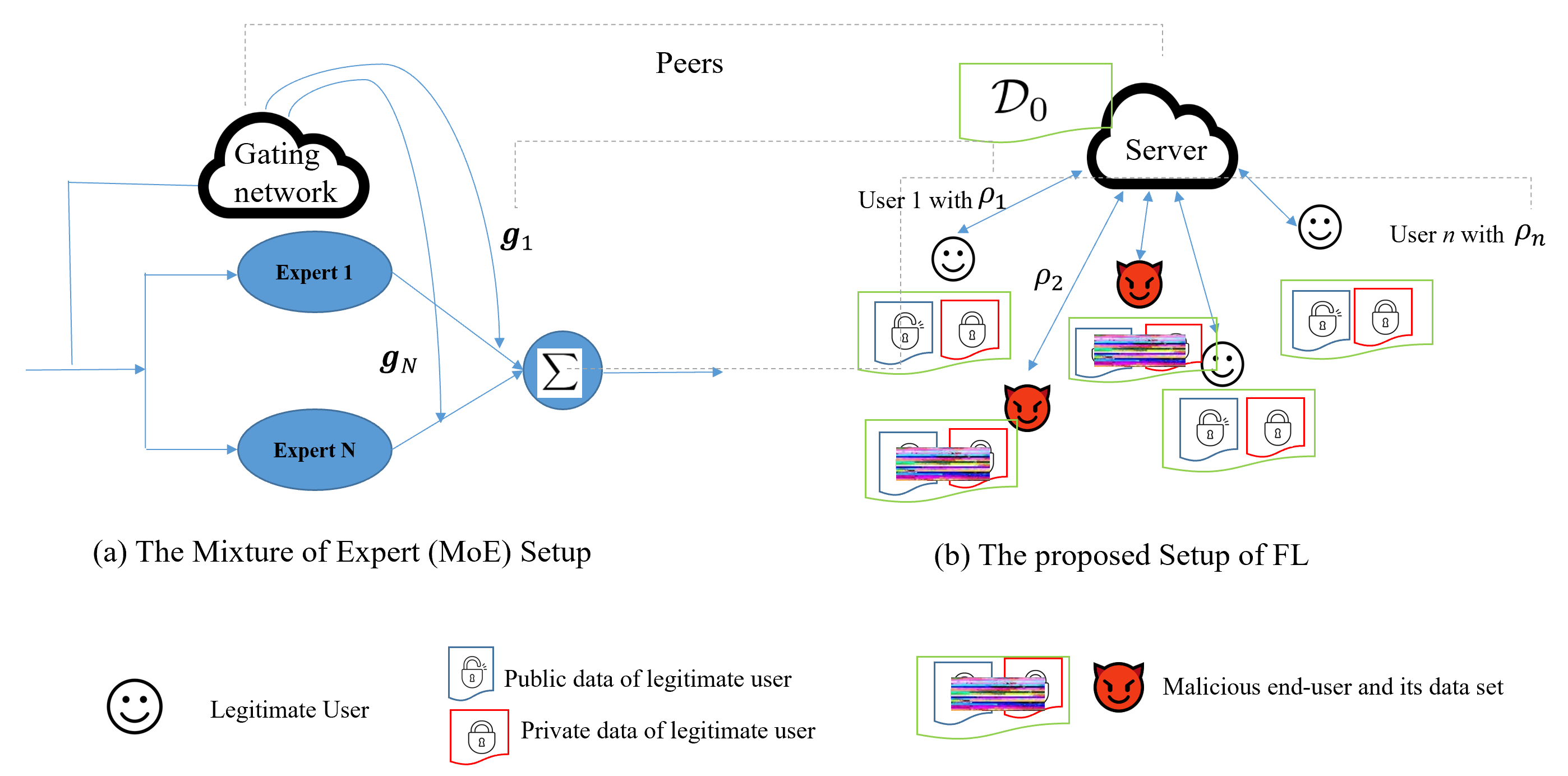

We present a novel weighted average model based on the mixture of experts (MoE) concept to provide robustness in Federated learning (FL) against the poisoned/corrupted/outdated local models. These threats along with the non-IID nature of data sets can considerably diminish the accuracy of the FL model. Our proposed MoE-FL setup relies on the trust between users and the server where the users share a portion of their public data sets with the server. The server applies a robust aggregation method by solving the optimization problem or the Softmax method to highlight the outlier cases and to reduce their adverse effect on the FL process. Our experiments illustrate that MoE-FL outperforms the performance of the traditional aggregation approach for high rate of poisoned data from attackers.

翻译:我们提出了基于专家混合概念的新颖加权平均模型,以在联邦学习中针对有毒/破坏/过时的地方模型提供强健性,这些威胁以及数据集的非二维性质可大大降低FL模型的准确性。我们提议的MOE-FL设置依靠用户与服务器之间的信任,用户在服务器上分享其部分公共数据集。服务器通过解决优化问题或软体法,采用强力汇总方法突出外部案例并减少其对FL进程的不利影响。我们的实验表明,ME-FL超出了传统汇总方法的性能,因为攻击者提供了高毒性数据。