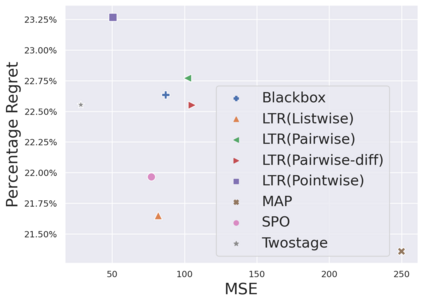

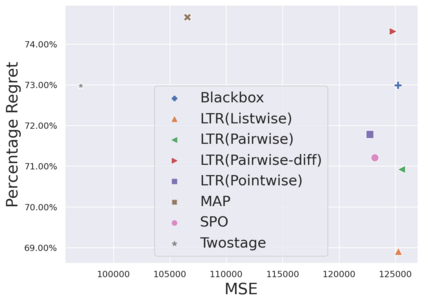

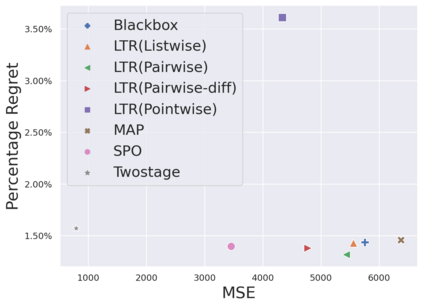

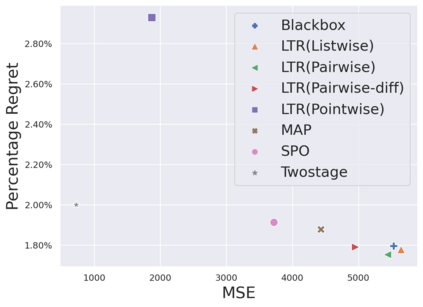

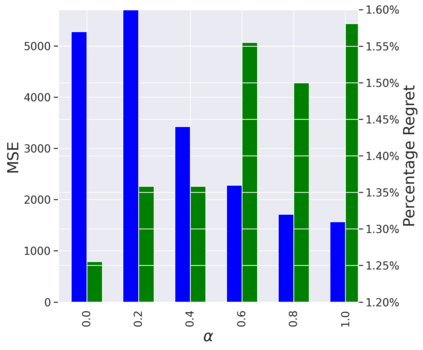

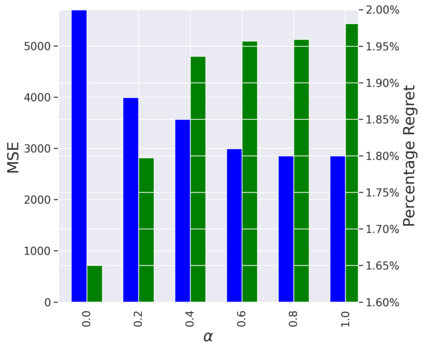

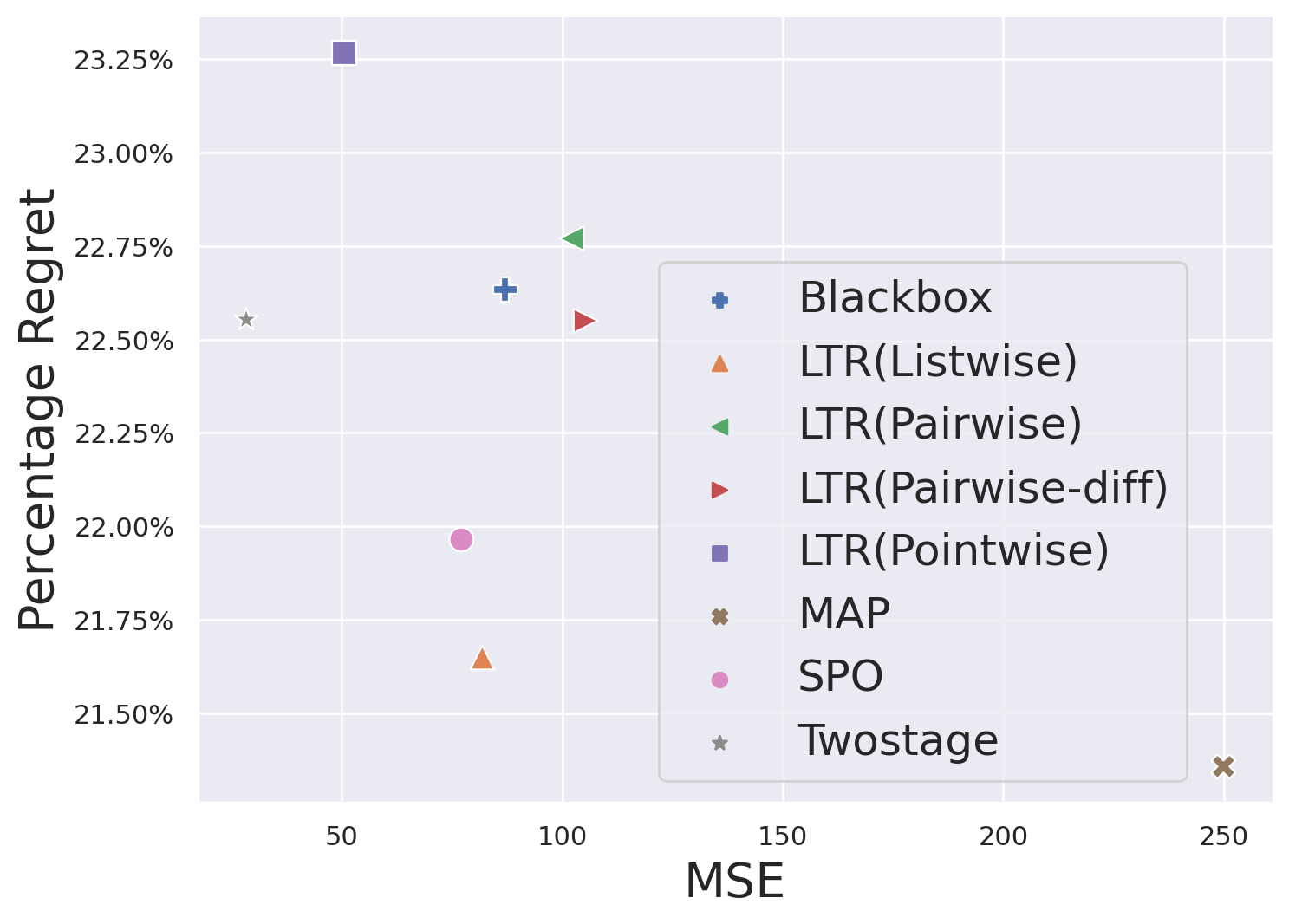

In the last years predict-and-optimize approaches, also known as decision-focussed learning, have received increasing attention. In this setting, the predictions of machine learning models are used as estimated cost coefficients in the objective function of a discrete combinatorial optimization problems for decision making. Predict-and-optimize approaches propose to train the ML models, often neural network models, by directly optimizing the quality of decisions made by the optimization solvers. Based on recent work that proposed a Noise Contrastive Estimation loss over a subset of the solution space, we observe that predict-and-optimize can more generally be seen as a learning-to-rank problem. That is, the goal is to learn an objective function that ranks the feasible points correctly. This approach is independent of the optimization method used and of the form of the objective function. We develop pointwise, pairwise and listwise ranking loss functions, which can be differentiated in closed form given a subset of solutions. We empirically investigate the quality of our generic method compared to existing predict-and-optimize approaches with competitive results. Furthermore, controlling the subset of solutions allows controlling the runtime considerably, with limited effect on regret.

翻译:在过去几年里,预测和优化方法,又称决策重点学习,受到越来越多的关注。在这一背景下,机器学习模型的预测被作为决策的单独组合优化问题客观功能中的成本估计系数。预测和优化方法建议通过直接优化优化优化优化解决者所作决定的质量,来培训ML模型,通常是神经网络模型。根据最近提出的对解决方案空间中一部分的噪音对比估计损失的建议,我们观察到,预测和优化可被更普遍地视为一个从学到学的问题。目标是学习一种客观的功能,正确排列可行的点。这种方法独立于所使用的优化方法和目标功能的形式。我们开发了点性、对等和列表性损失排序功能,这些功能可以以封闭的形式加以区分,并有一定的解决方案。我们从经验上调查了我们通用方法的质量,与现有的预测和优化方法相比,并具有竞争性效果。此外,控制子类解决方案的高度控制了具有竞争性效果的分数。此外,对子类控制了具有竞争性效果的分数控制,并在很大程度上控制了分数解决方案。