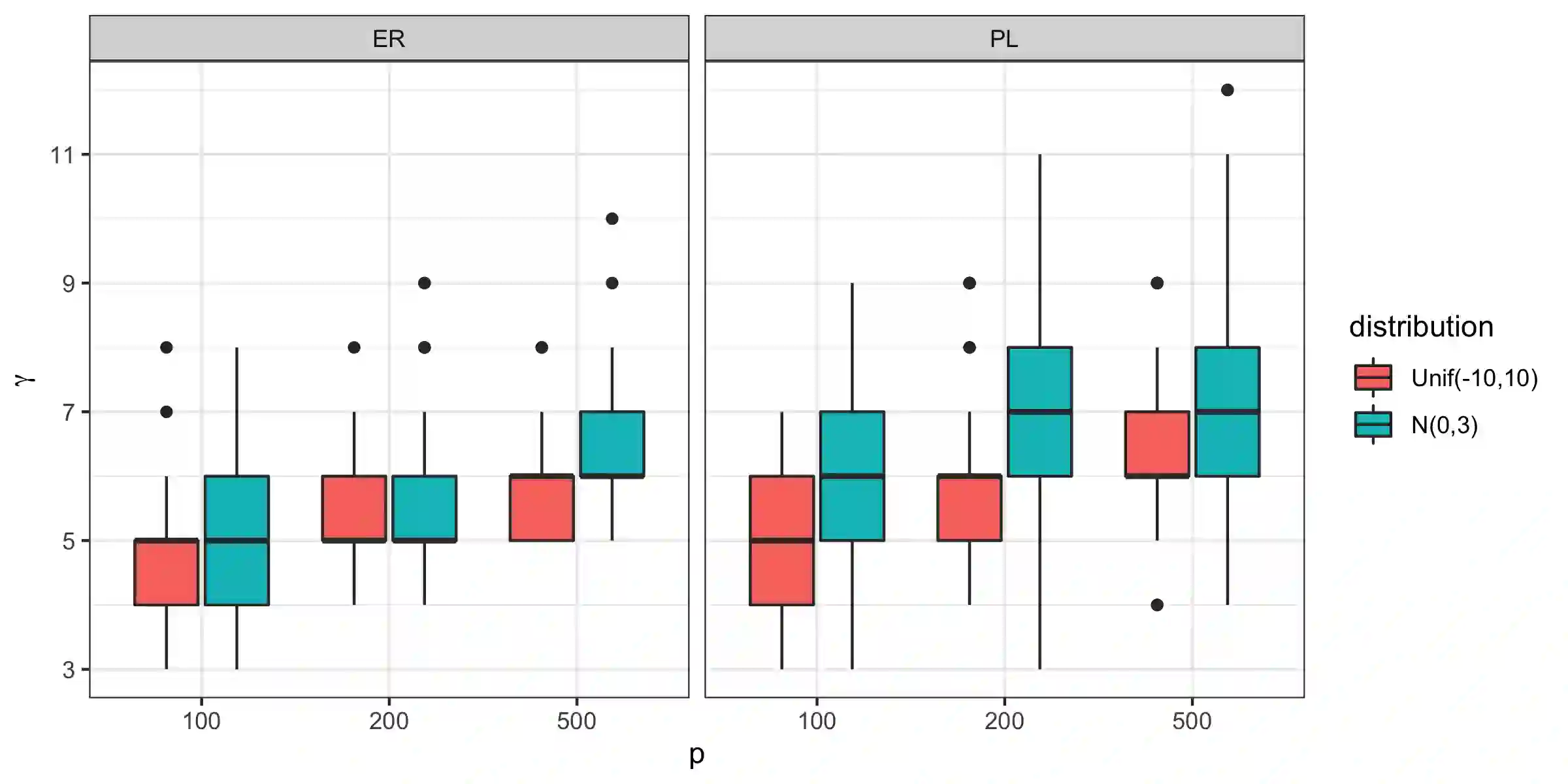

We consider the problem of learning causal structures in sparse high-dimensional settings that may be subject to the presence of (potentially many) unmeasured confounders, as well as selection bias. Based on the structure found in common families of large random networks and examining the representation of local structures in linear structural equation models (SEM), we propose a new local notion of sparsity for consistent structure learning in the presence of latent and selection variables, and develop a new version of the Fast Causal Inference (FCI) algorithm with reduced computational and sample complexity, which we refer to as local FCI (lFCI). The new notion of sparsity allows the presence of highly connected hub nodes, which are common in real-world networks, but problematic for existing methods. Our numerical experiments indicate that the lFCI algorithm achieves state-of-the-art performance across many classes of large random networks, and its performance is superior to that of existing methods for networks containing hub nodes.

翻译:我们考虑了在稀少的高维环境中学习可能受制于(可能很多)无法测量的混乱者存在的因果结构以及选择偏差的问题。根据大型随机网络共同家族中发现的结构以及线性结构方程模型(SEM)中地方结构的表述,我们提出了一种新的局部宽度概念,以便在存在潜在和选择变量的情况下进行连贯的结构学习,并开发了一种具有较低计算和抽样复杂性的快速剖析算法(FCI)新版本,我们称之为本地FCI(LFCI ) 。 新的宽度概念允许存在高度连接的中心节点,这些节点在现实世界网络中很常见,但对现有方法有问题。 我们的数字实验表明,LFCI算法在许多类型的大型随机网络中达到最新水平的性能,其性能优于包含中枢节点的现有网络方法。