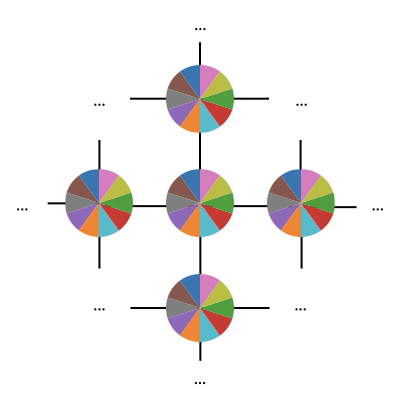

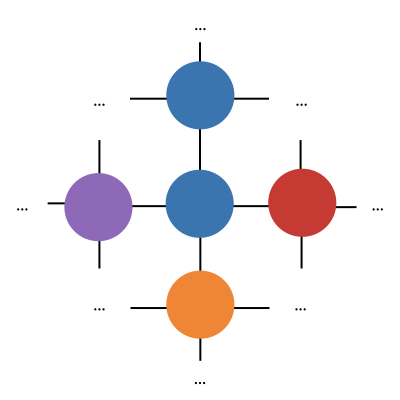

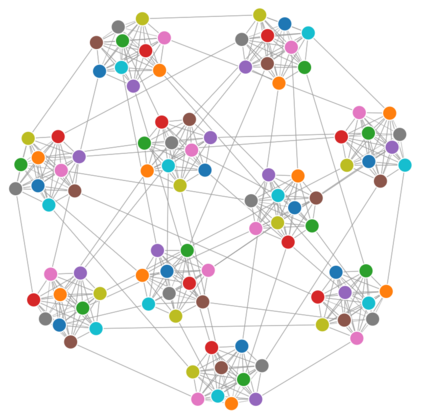

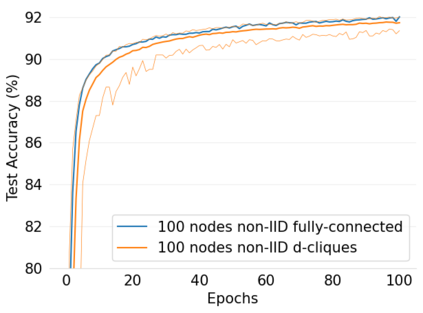

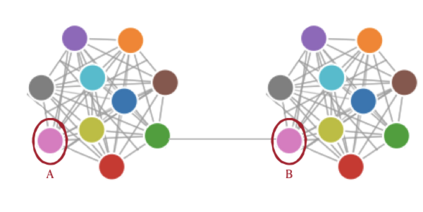

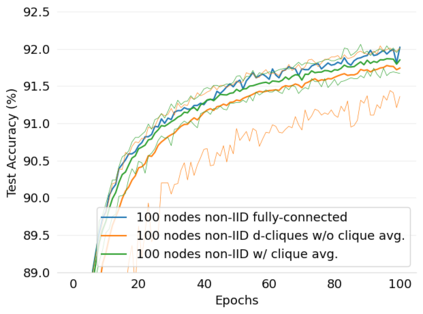

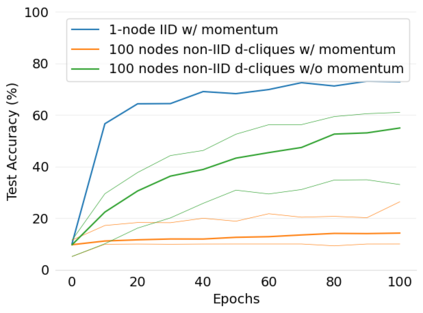

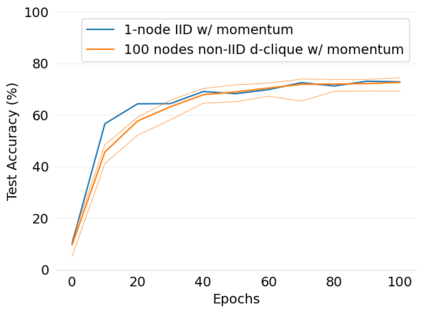

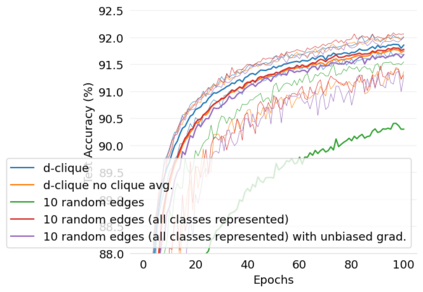

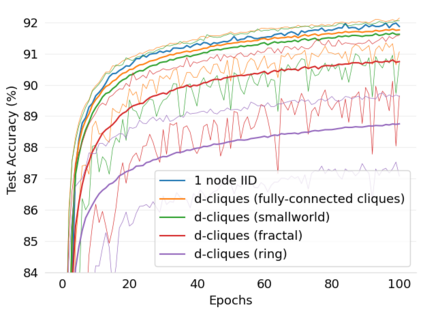

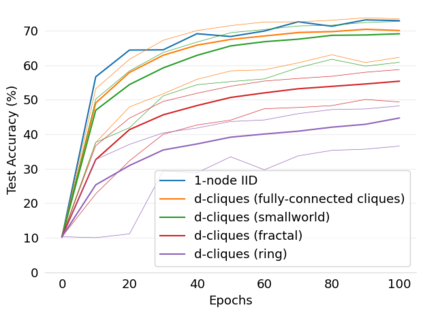

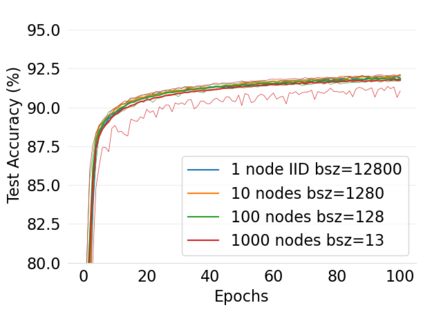

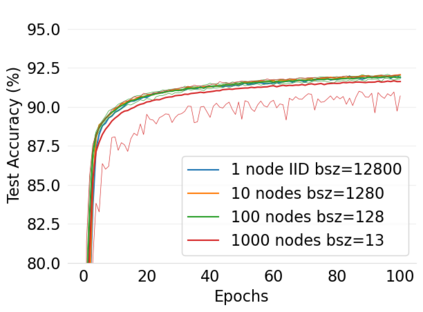

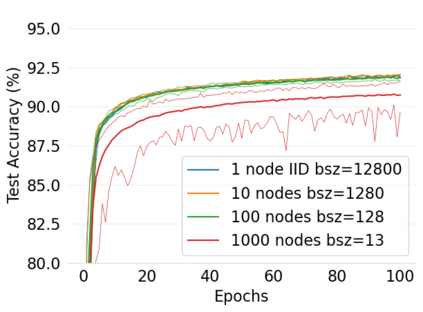

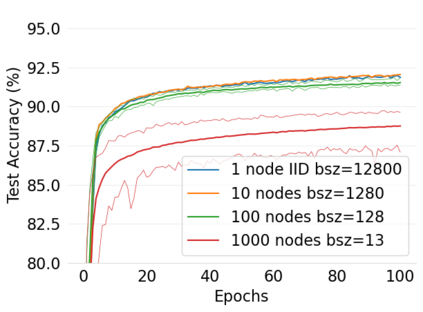

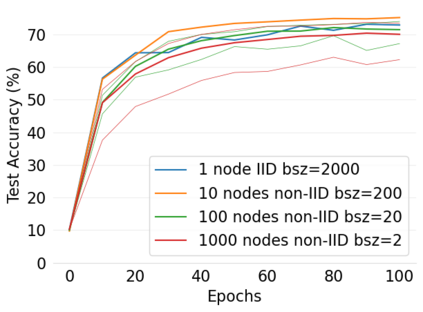

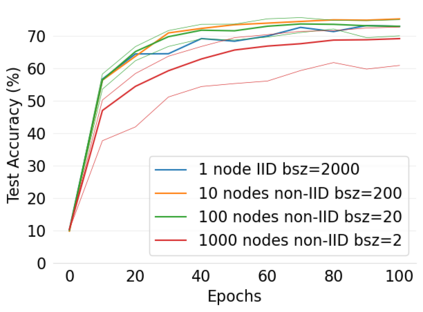

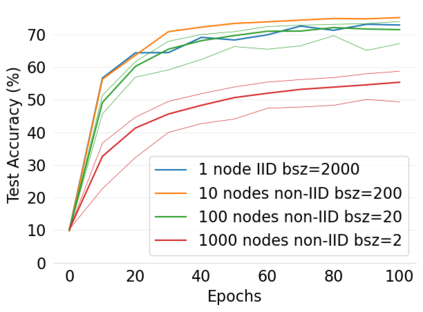

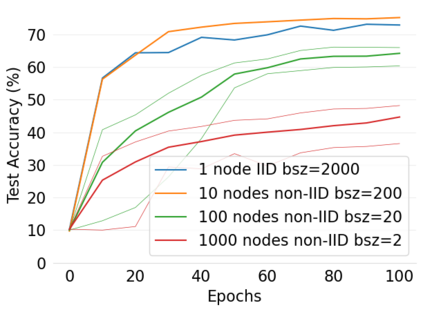

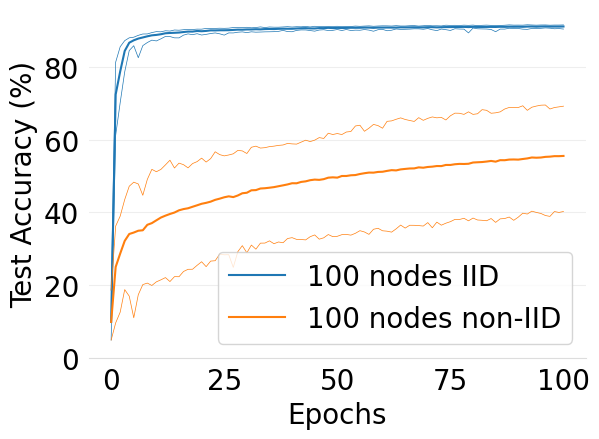

The convergence speed of machine learning models trained with Federated Learning is significantly affected by non-independent and identically distributed (non-IID) data partitions, even more so in a fully decentralized setting without a central server. In this paper, we show that the impact of local class bias, an important type of data non-IIDness, can be significantly reduced by carefully designing the underlying communication topology. We present D-Cliques, a novel topology that reduces gradient bias by grouping nodes in interconnected cliques such that the local joint distribution in a clique is representative of the global class distribution. We also show how to adapt the updates of decentralized SGD to obtain unbiased gradients and implement an effective momentum with D-Cliques. Our empirical evaluation on MNIST and CIFAR10 demonstrates that our approach provides similar convergence speed as a fully-connected topology with a significant reduction in the number of edges and messages. In a 1000-node topology, D-Cliques requires 98% less edges and 96% less total messages, with further possible gains using a small-world topology across cliques.

翻译:与联邦学习联合会培训的机器学习模式的趋同速度受到非独立和同样分布(非IID)数据分割的重大影响,在完全分散的环境中,没有中央服务器,情况尤其如此。在本文中,我们表明,通过仔细设计基本的通信地形,可以大大降低当地阶级偏差的影响,这种重要的非IID数据类型是一个重要的非IID数据类型。我们介绍了D-Cliques,这是一种新型的地形学,通过将结点组合在一起,减少了梯度偏差,因此在一个分区内的地方联合分布代表了全球等级分布。我们还展示了如何调整分散的 SGD的更新,以获得公正的梯度,并与D-Cliques形成有效的势头。我们对MNIST和CIFAR10的经验评估表明,我们的方法提供了类似的趋同速度,作为完全相连的地形学,边缘和信息数量大幅减少。在1000个诺德的地形学中,D-Cliques需要减少98%的边缘和96%的总信息,同时利用跨文化的小世界地形学进一步取得收益。