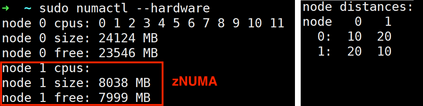

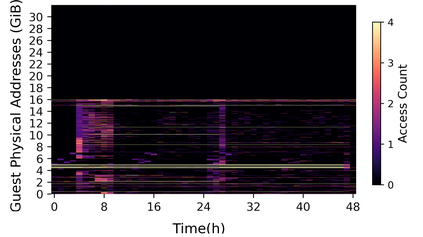

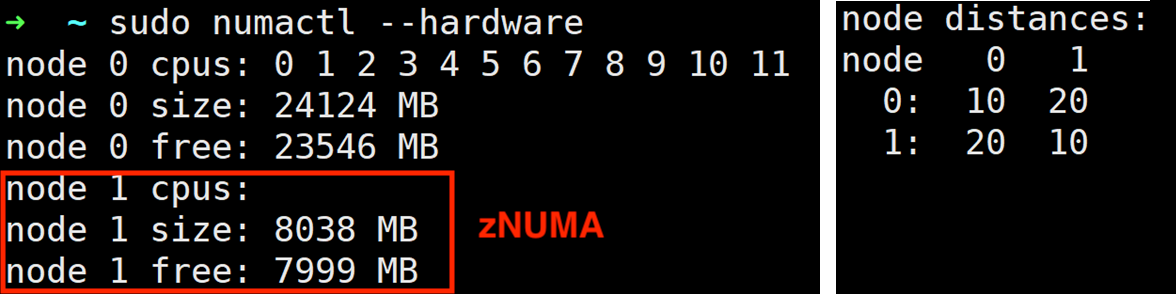

Public cloud providers seek to meet stringent performance requirements and low hardware cost. A key driver of performance and cost is main memory. Memory pooling promises to improve DRAM utilization and thereby reduce costs. However, pooling is challenging under cloud performance requirements. This paper proposes Pond, the first memory pooling system that both meets cloud performance goals and significantly reduces DRAM cost. Pond builds on the Compute Express Link (CXL) standard for load/store access to pool memory and two key insights. First, our analysis of cloud production traces shows that pooling across 8-16 sockets is enough to achieve most of the benefits. This enables a small-pool design with low access latency. Second, it is possible to create machine learning models that can accurately predict how much local and pool memory to allocate to a virtual machine (VM) to resemble same-NUMA-node memory performance. Our evaluation with 158 workloads shows that Pond reduces DRAM costs by 7% with performance within 1-5% of same-NUMA-node VM allocations.

翻译:公共云供应商寻求满足严格的性能要求和低硬件成本。 主要的性能和成本驱动因素是主要记忆。 记忆集合可以改善DRAM的利用, 从而降低成本。 然而, 集合在云性性要求下具有挑战性。 本文提出Pond, 这是第一个既满足云性业绩目标又大幅降低 DRAM 成本的记忆集合系统。 Pond 以载荷/储量存存存取的计算快递链接( CXL) 标准和两个关键洞察标准为基础。 首先, 我们对云生成痕迹的分析表明, 汇集在8-16 个插座上就足以实现大部分的效益。 这使得小资源库设计能够使用低的耐久性。 其次, 可以创建机器学习模型, 准确预测有多少本地和集合的记忆可以分配给虚拟机器( VM) 以类似 NUMA- node 记忆性能。 我们的158个工作量评估显示, Pond将DRAM 成本降低7%, 在相同NUMA- node VM 分配的15%范围内的性能 。