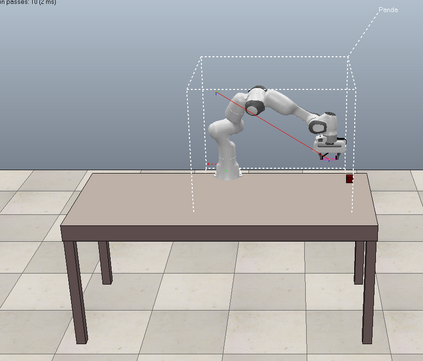

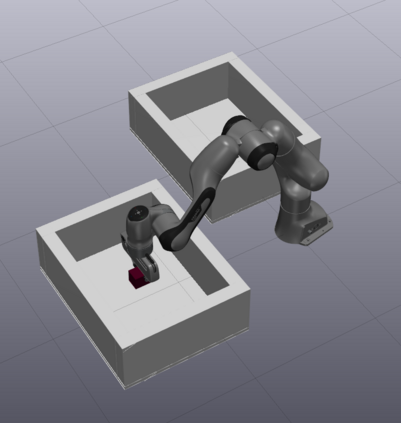

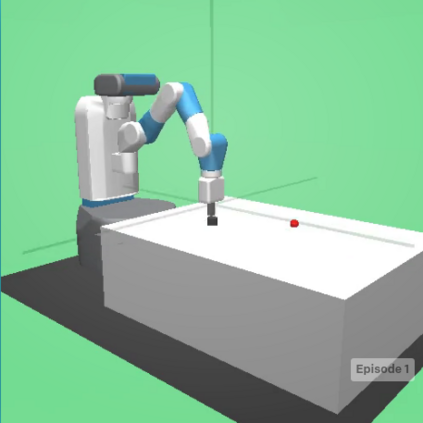

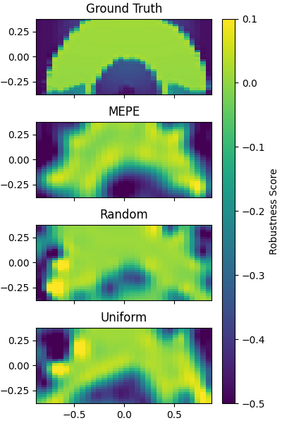

Many robot control scenarios involve assessing system robustness against a task specification. If either the controller or environment are composed of "black-box" components with unknown dynamics, we cannot rely on formal verification to assess our system. Assessing robustness via exhaustive testing is also often infeasible if the space of environments is large compared to experiment cost. Given limited budget, we provide a method to choose experiment inputs which give greatest insight into system performance against a given specification across the domain. By combining smooth robustness metrics for signal temporal logic with techniques from adaptive experiment design, our method chooses the most informative experimental inputs by incrementally constructing a surrogate model of the specification robustness. This model then chooses the next experiment to be in an area where there is either high prediction error or uncertainty. Our experiments show how this adaptive experimental design technique results in sample-efficient descriptions of system robustness. Further, we show how to use the model built via the experiment design process to assess the behaviour of a data-driven control system under domain shift.

翻译:许多机器人控制设想方案涉及根据任务规格评估系统的稳健性。 如果控制器或环境由“黑盒”组件组成,且动态未知, 我们不能依靠正式的核查来评估我们的系统。 如果环境空间与实验成本相比大,则通过详尽的测试来评估稳健性也往往不可行。 鉴于预算有限, 我们提供一种方法来选择实验投入, 以便根据整个域的特定规格对系统性能产生最大的洞察力。 通过将信号信号时间逻辑的顺畅稳健性指标与适应性实验设计的技术结合起来, 我们的方法选择了最丰富的实验投入, 方法是逐步构建一个说明规格稳健性的替代模型。 这个模型然后选择下一个实验, 在一个要么预测错误高, 或者是不确定性大的领域进行。 我们的实验展示了这种适应性实验设计技术如何导致对系统稳健性进行抽样高效描述。 此外, 我们展示了如何使用通过实验设计过程构建的模型来评估在域变换中的数据驱动控制系统的行为。