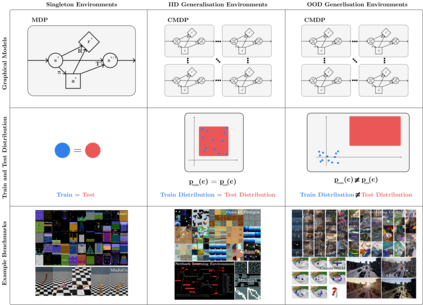

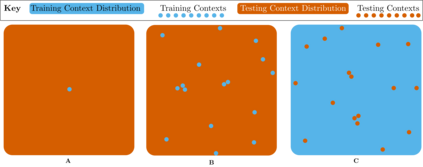

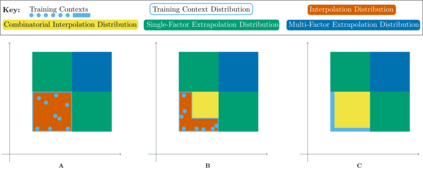

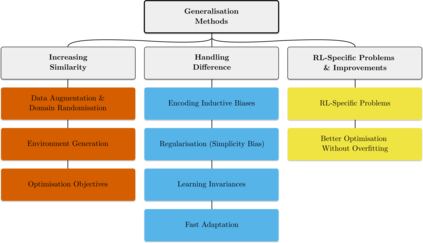

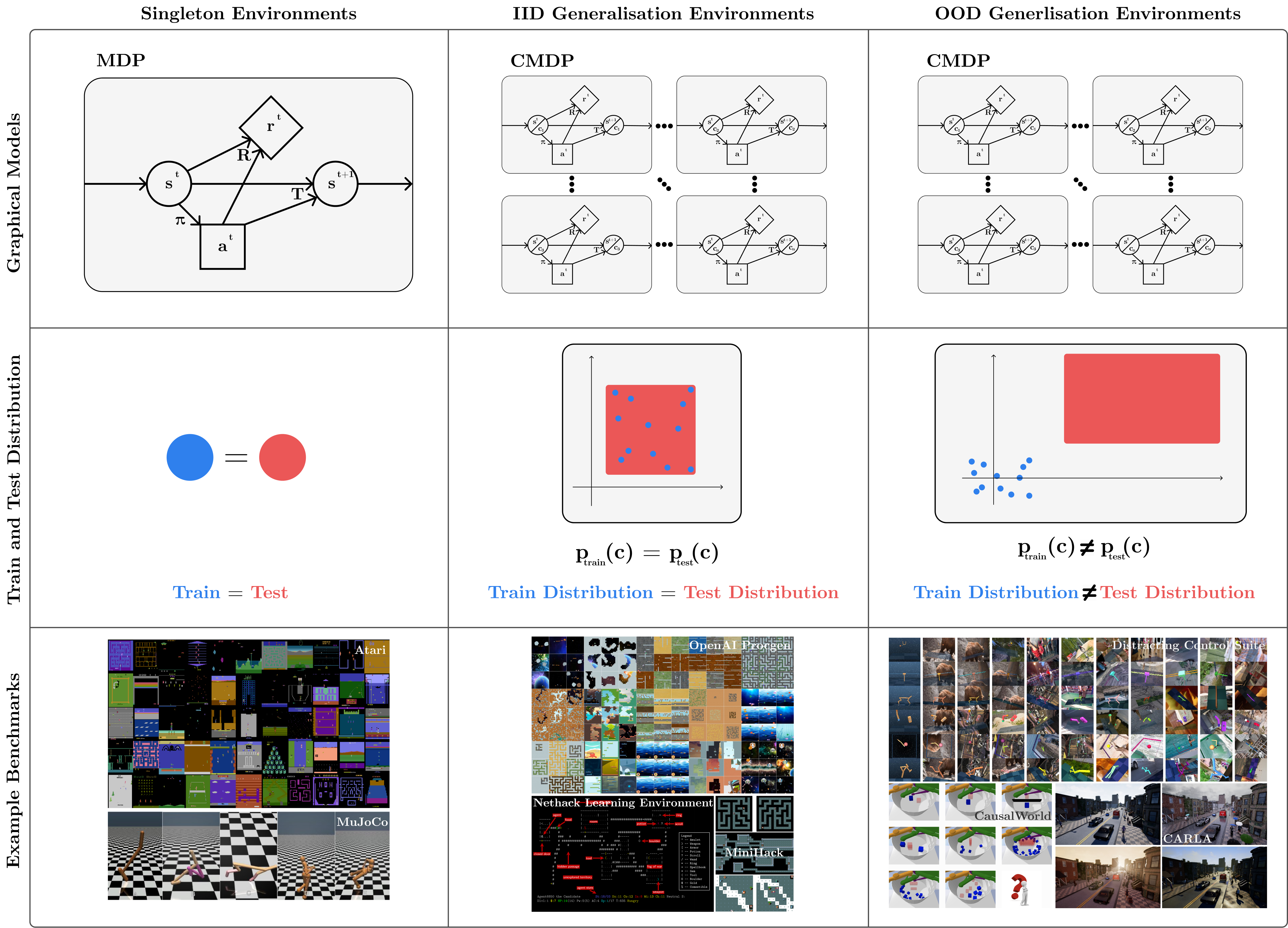

The study of generalisation in deep Reinforcement Learning (RL) aims to produce RL algorithms whose policies generalise well to novel unseen situations at deployment time, avoiding overfitting to their training environments. Tackling this is vital if we are to deploy reinforcement learning algorithms in real world scenarios, where the environment will be diverse, dynamic and unpredictable. This survey is an overview of this nascent field. We provide a unifying formalism and terminology for discussing different generalisation problems, building upon previous works. We go on to categorise existing benchmarks for generalisation, as well as current methods for tackling the generalisation problem. Finally, we provide a critical discussion of the current state of the field, including recommendations for future work. Among other conclusions, we argue that taking a purely procedural content generation approach to benchmark design is not conducive to progress in generalisation, we suggest fast online adaptation and tackling RL-specific problems as some areas for future work on methods for generalisation, and we recommend building benchmarks in underexplored problem settings such as offline RL generalisation and reward-function variation.

翻译:深强化学习(RL)的普及研究旨在产生RL算法,其政策在部署时非常概括地概括出新的无形情况,避免过度适应其培训环境。如果我们要在现实世界情景中,在环境将多样化、充满活力和不可预测的情况下,采用强化学习算法,这一点至关重要。这项调查是对这一新生领域的概览。我们以以前的工作为基础,为讨论不同的普及问题提供了统一的形式主义和术语。我们接着将现有的普及基准和当前解决普遍性问题的方法分类。最后,我们对实地现状进行了批判性讨论,包括未来工作的建议。除其他结论外,我们认为,采用纯粹程序化的内容生成方法来制定基准不利于总体化的进展。我们建议快速在线适应和解决特定RL问题,将其作为今后普及方法工作的一些领域。我们建议在未得到充分探讨的问题环境中建立基准,例如离线的普及和奖励功能变化。