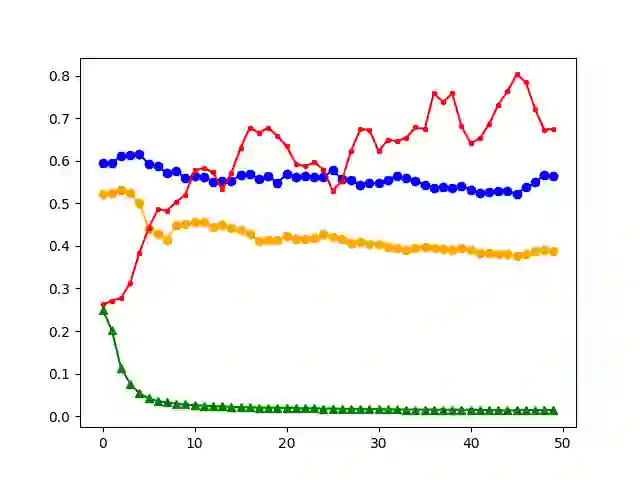

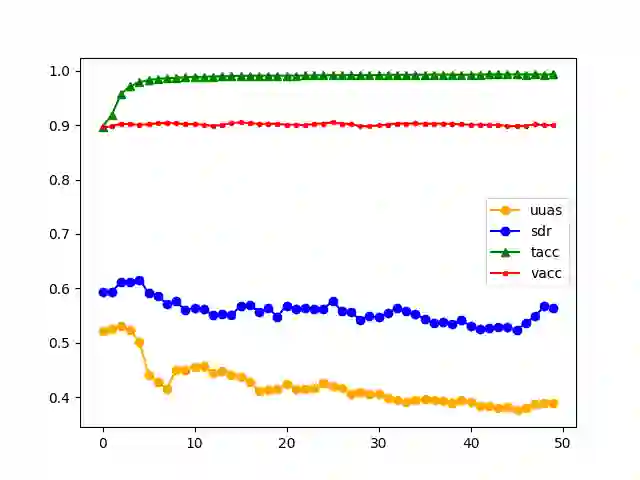

Large language models (LLMs) have been reported to have strong performance on natural language processing tasks. However, performance metrics such as accuracy do not measure the quality of the model in terms of its ability to robustly represent complex linguistic structure. In this work, we propose a framework to evaluate the robustness of linguistic representations using probing tasks. We leverage recent advances in extracting emergent linguistic constructs from LLMs and apply syntax-preserving perturbations to test the stability of these constructs in order to better understand the representations learned by LLMs. Empirically, we study the performance of four LLMs across six different corpora on the proposed robustness measures. We provide evidence that context-free representation (e.g., GloVe) are in some cases competitive with context-dependent representations from modern LLMs (e.g., BERT), yet equally brittle to syntax-preserving manipulations. Emergent syntactic representations in neural networks are brittle, thus our work poses the attention on the risk of comparing such structures to those that are object of a long lasting debate in linguistics.

翻译:据报道,大型语言模型(LLMS)在自然语言处理任务方面表现良好,但是,准确性等业绩计量无法衡量该模型的质量,因为它能够强有力地代表复杂的语言结构;在这项工作中,我们提出一个框架,用以评估语言表述的稳健性;我们利用最近从LLMS中提取的新兴语言结构的进展,并运用语法保护干扰来测试这些结构的稳定性,以便更好地了解LMS所学到的表述。 简而言之,我们研究六个不同的公司在拟议稳健措施方面的4个LMS的绩效。我们提供的证据表明,在某些情况下,无背景代表(例如GloVe)与现代LMS(例如BERT)的基于背景的表述具有竞争力,但同样难以对保留语法的操控。神经网络中新兴的合成表述很不稳定,因此我们的工作有可能将这种结构与语言上长期辩论的对象相比较。