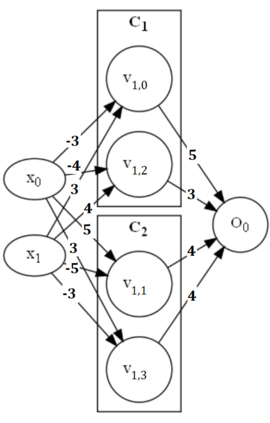

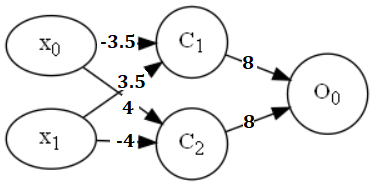

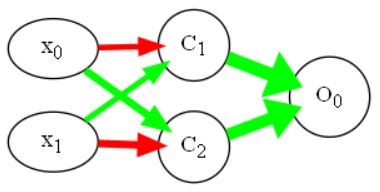

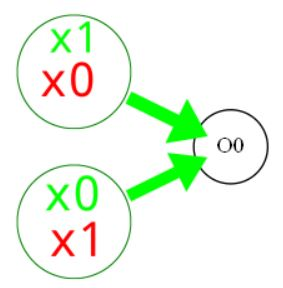

Neural networks (NNs) have various applications in AI, but explaining their decision process remains challenging. Existing approaches often focus on explaining how changing individual inputs affects NNs' outputs. However, an explanation that is consistent with the input-output behaviour of an NN is not necessarily faithful to the actual mechanics thereof. In this paper, we exploit relationships between multi-layer perceptrons (MLPs) and quantitative argumentation frameworks (QAFs) to create argumentative explanations for the mechanics of MLPs. Our SpArX method first sparsifies the MLP while maintaining as much of the original mechanics as possible. It then translates the sparse MLP into an equivalent QAF to shed light on the underlying decision process of the MLP, producing global and/or local explanations. We demonstrate experimentally that SpArX can give more faithful explanations than existing approaches, while simultaneously providing deeper insights into the actual reasoning process of MLPs.

翻译:在AI中,神经网络(NNs)有各种应用,但解释其决策程序仍然具有挑战性。现有方法往往侧重于解释个人投入的变化如何影响NNs的产出。然而,与NN的输入-产出行为相一致的解释不一定忠实于其实际结构。在本文中,我们利用多层感应器(MLPs)和定量论证框架(QAFs)之间的关系,为MLPs的机理提供论据解释。我们的SpArX方法首先对MLP进行解释,同时尽可能多地保持原始的机理。然后,将稀有的MLP转换为相应的QAFF,以阐明MLP的基本决策过程,提出全球和/或当地的解释。我们实验性地证明SpX公司能够比现有方法更忠实地解释,同时更深入地了解MLPs的实际推理过程。