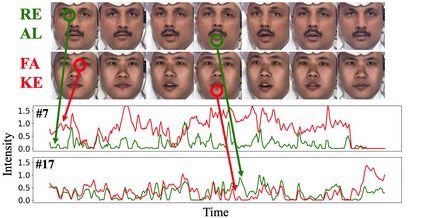

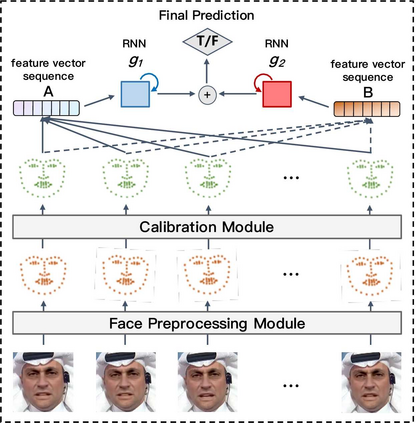

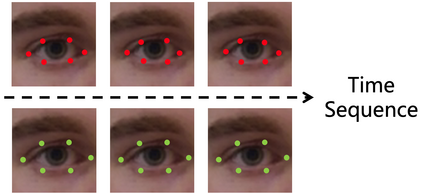

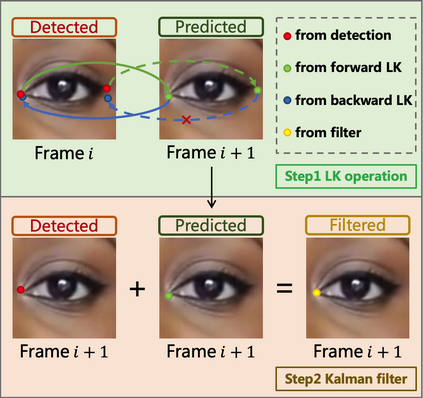

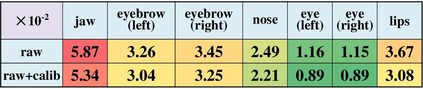

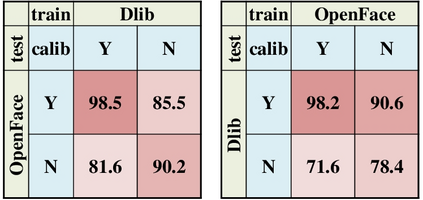

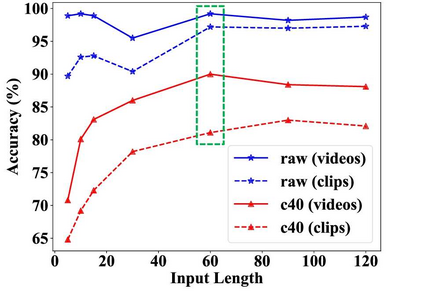

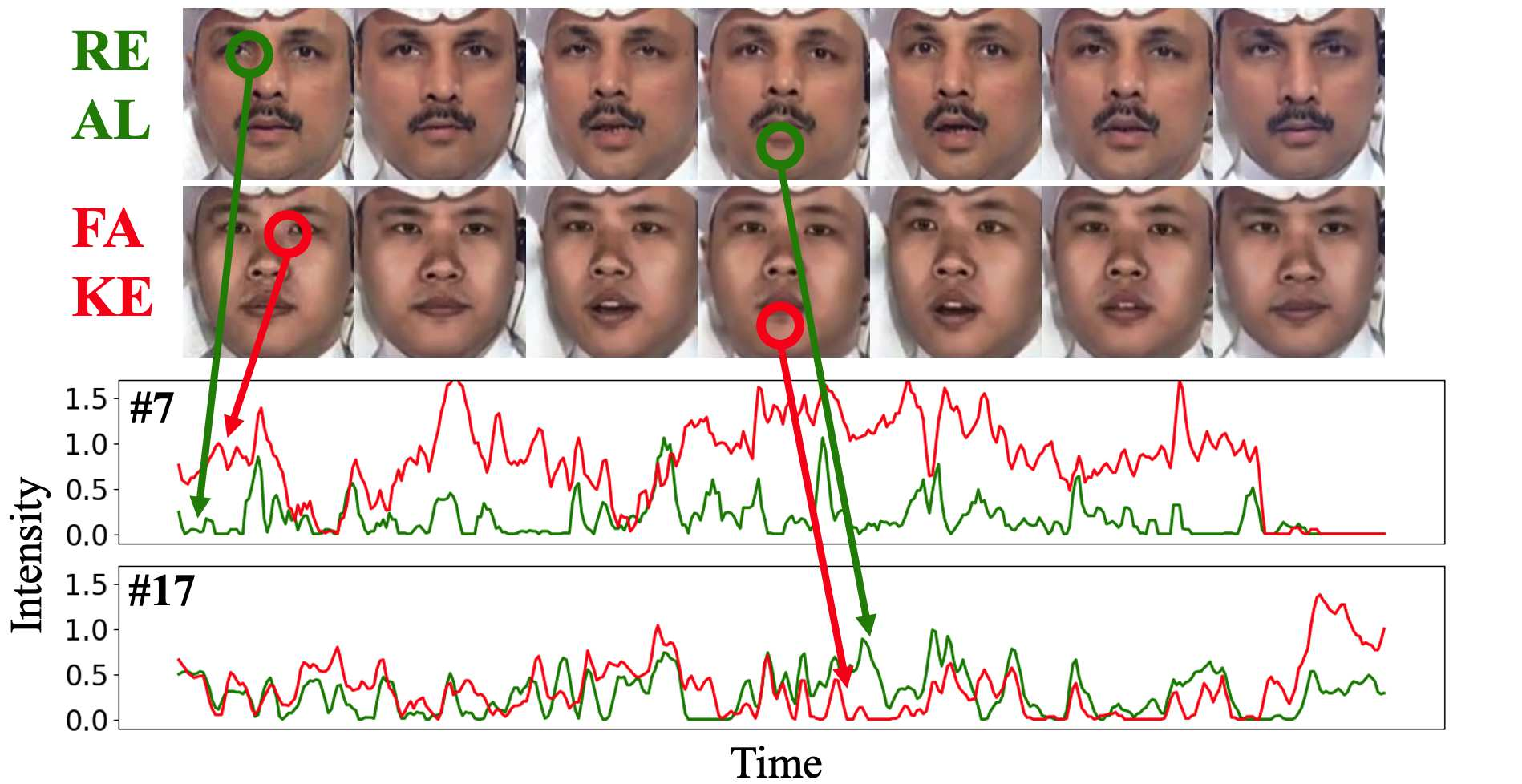

Deepfakes is a branch of malicious techniques that transplant a target face to the original one in videos, resulting in serious problems such as infringement of copyright, confusion of information, or even public panic. Previous efforts for Deepfakes videos detection mainly focused on appearance features, which have a risk of being bypassed by sophisticated manipulation, also resulting in high model complexity and sensitiveness to noise. Besides, how to mine the temporal features of manipulated videos and exploit them is still an open question. We propose an efficient and robust framework named LRNet for detecting Deepfakes videos through temporal modeling on precise geometric features. A novel calibration module is devised to enhance the precision of geometric features, making it more discriminative, and a two-stream Recurrent Neural Network (RNN) is constructed for sufficient exploitation of temporal features. Compared to previous methods, our proposed method is lighter-weighted and easier to train. Moreover, our method has shown robustness in detecting highly compressed or noise corrupted videos. Our model achieved 0.999 AUC on FaceForensics++ dataset. Meanwhile, it has a graceful decline in performance (-0.042 AUC) when faced with highly compressed videos.

翻译:深假是一种恶意技术的分支,它将目标面部移植到视频中的原始面部,从而产生严重的问题,例如侵犯版权、混淆信息、甚至公众恐慌等。以前对深假视频的探测工作主要侧重于外观特征,这些特征有可能被复杂的操纵所绕过,还导致对噪音的高度模型复杂性和敏感度。此外,如何对被操纵视频的时空特征进行埋设地雷并加以利用仍然是一个尚未解决的问题。我们提议了一个名为LRNet的高效而有力的框架,通过精确的几何特征的时间模型来探测深海视频。我们设计了一个新型校准模块,以提高几何特征的精确度,使其更具歧视性,并且为充分利用时间特征而建造了双流常规神经网络(RNN) 。与以往的方法相比,我们拟议的方法较轻、较易培训。此外,我们的方法显示在探测高压缩或噪声腐败的视频方面非常稳健。我们的模型在FaceFaceForensics+数据集上实现了0.99 AUC。与此同时,在面临高压缩视频时,其性能(0.042 AUC)。