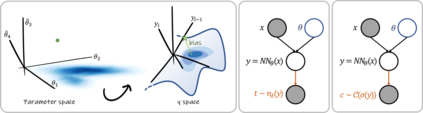

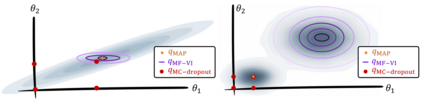

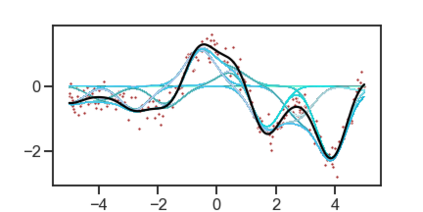

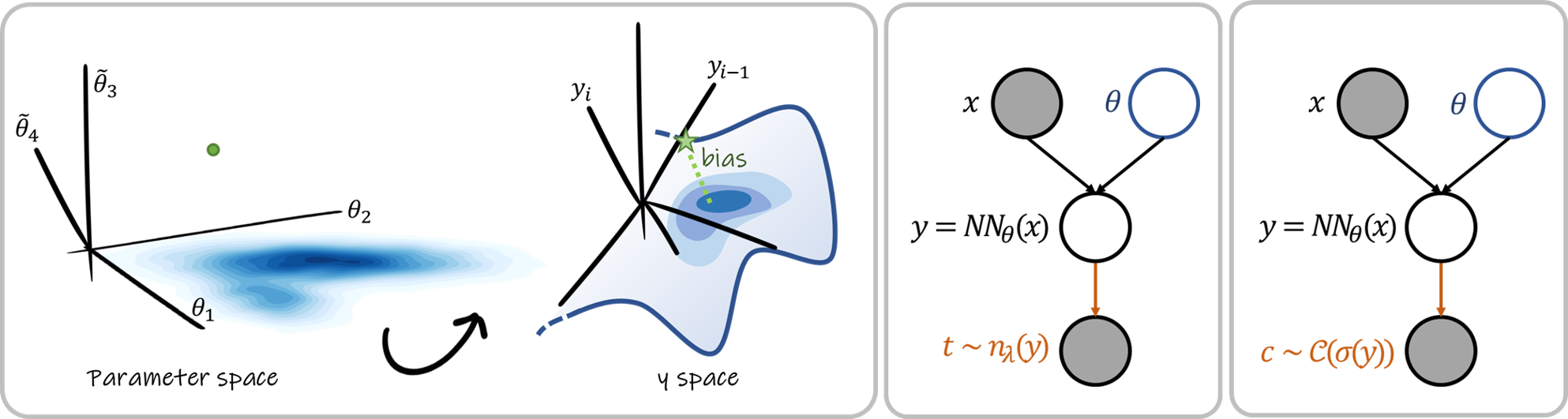

MC Dropout is a mainstream "free lunch" method in medical imaging for approximate Bayesian computations (ABC). Its appeal is to solve out-of-the-box the daunting task of ABC and uncertainty quantification in Neural Networks (NNs); to fall within the variational inference (VI) framework; and to propose a highly multimodal, faithful predictive posterior. We question the properties of MC Dropout for approximate inference, as in fact MC Dropout changes the Bayesian model; its predictive posterior assigns $0$ probability to the true model on closed-form benchmarks; the multimodality of its predictive posterior is not a property of the true predictive posterior but a design artefact. To address the need for VI on arbitrary models, we share a generic VI engine within the pytorch framework. The code includes a carefully designed implementation of structured (diagonal plus low-rank) multivariate normal variational families, and mixtures thereof. It is intended as a go-to no-free-lunch approach, addressing shortcomings of mean-field VI with an adjustable trade-off between expressivity and computational complexity.

翻译:MC 辍学是一种主流的“免费午餐”方法,用于近似巴伊西亚计算(ABC)的医疗成像。它的吸引力是解决ABC的艰巨任务和神经网络(NNS)的不确定性量化的不成熟问题;属于变式推断(VI)框架的范围之内;提出一种高度多式的、忠实预测的子孙;我们对MC 辍学的特性提出质疑,以获得大致的推断力,因为事实上MC 辍学改变了巴伊西亚模式;它的预测后继体为封闭式基准的真正模型分配了0.0美元的可能性;它的预测后继体的多式联运不是真正预测远方的外延的属性,而是设计艺术。为了解决六国对任意模型的需要,我们在Pytoch框架内共用了通用的VI引擎。该守则包括精心设计的结构化(直径加低级)的多变式正常家庭及其混合物的实施。该守则旨在作为无穷无穷的外延法方法,解决在可调整的复杂度和可变法性之间对六国货币的变式的缺陷。