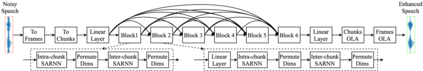

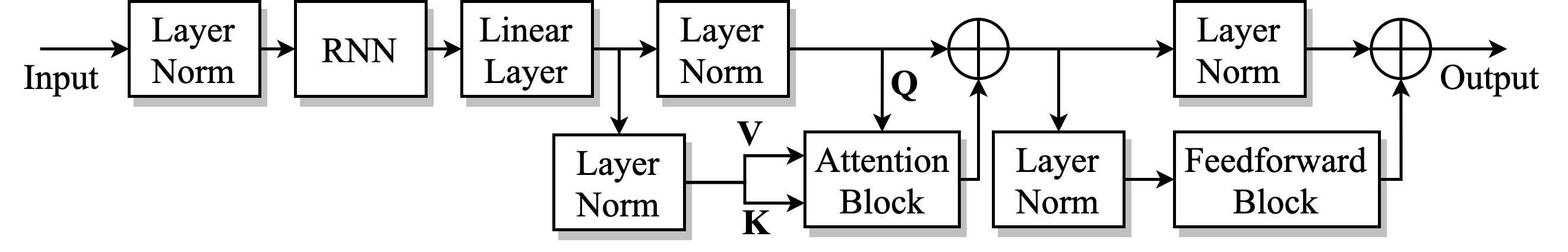

We propose a dual-path self-attention recurrent neural network (DP-SARNN) for time-domain speech enhancement. We improve dual-path RNN (DP-RNN) by augmenting inter-chunk and intra-chunk RNN with a recently proposed efficient attention mechanism. The combination of inter-chunk and intra-chunk attention improves the attention mechanism for long sequences of speech frames. DP-SARNN outperforms a baseline DP-RNN by using a frame shift four times larger than in DP-RNN, which leads to a substantially reduced computation time per utterance. As a result, we develop a real-time DP-SARNN by using long short-term memory (LSTM) RNN and causal attention in inter-chunk SARNN. DP-SARNN significantly outperforms existing approaches to speech enhancement, and on average takes 7.9 ms CPU time to process a signal chunk of 32 ms.

翻译:我们建议采用双路径自我关注的经常性神经网络(DP-SARNNN)来增强时空语音。我们通过最近提议的高效关注机制,改进双路径 RNNN(DP-RNNN),增加Chunk和Chunk内部的注意力,从而改进双路径RNN(DP-SARNNN),增加最近提议的高效关注机制。Chunk之间和Chunk内部的注意力相结合,改善了长语音框序列的注意机制。DP-SARNN使用比DP-RNN大四倍的框架转换比DP-RNNN(DP-RNN)的基线DP-RNNN,从而大大缩短了每次发言的计算时间。因此,我们通过使用长期记忆(LSTM)RNNNN和C之间因果关注,开发了实时的DP-SARNNNN。DP-SSANN大大超越了现有的增强语音框方法,平均需要7.9 ms CPU时间处理32 ms信号块。