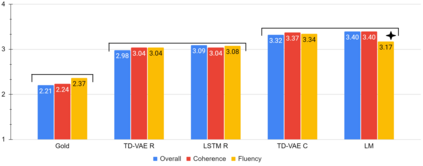

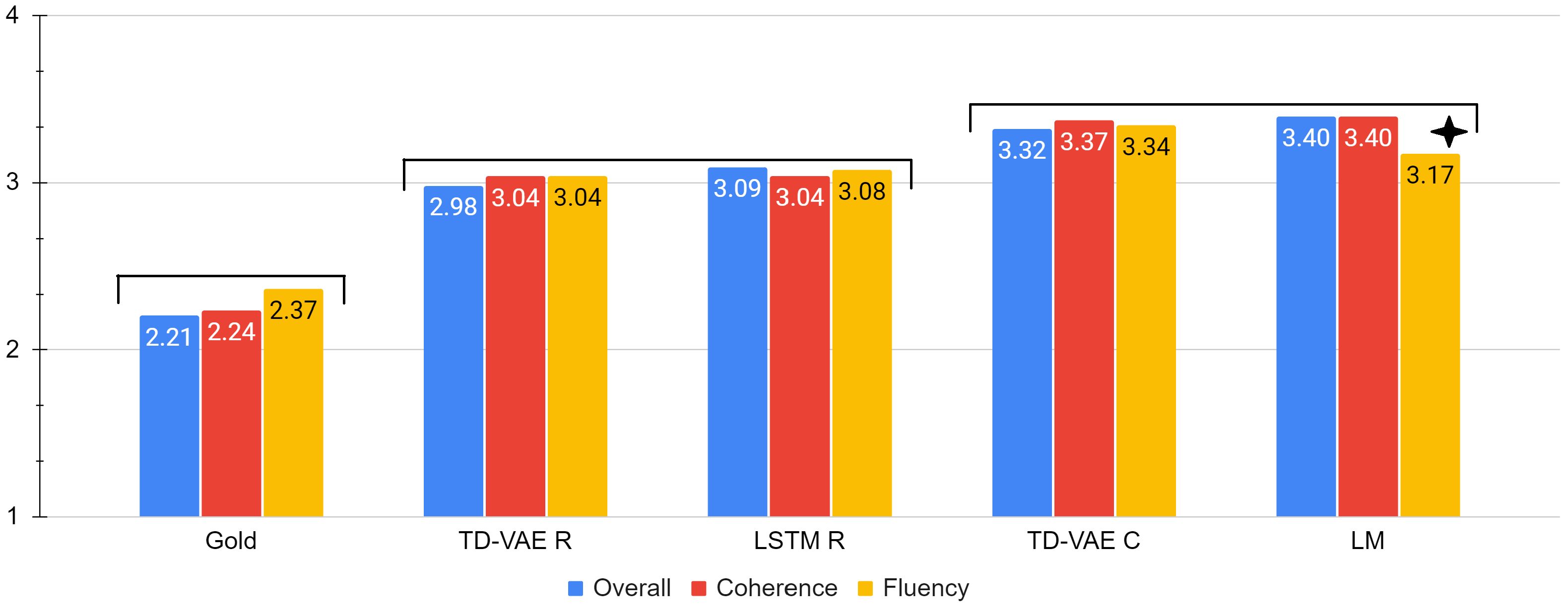

Recent language models can generate interesting and grammatically correct text in story generation but often lack plot development and long-term coherence. This paper experiments with a latent vector planning approach based on a TD-VAE (Temporal Difference Variational Autoencoder), using the model for conditioning and reranking for text generation. The results demonstrate strong performance in automatic cloze and swapping evaluations. The human judgments show stories generated with TD-VAE reranking improve on a GPT-2 medium baseline and show comparable performance to a hierarchical LSTM reranking model. Conditioning on the latent vectors proves disappointing and deteriorates performance in human evaluation because it reduces the diversity of generation, and the models don't learn to progress the narrative. This highlights an important difference between technical task performance (e.g. cloze) and generating interesting stories.

翻译:最近的语言模型可以在故事生成过程中产生有趣和语法正确的文字,但往往缺乏绘图开发和长期一致性。本文实验采用基于TD-VAE(临时差异变化自动编码)的潜在矢量规划方法,使用文字生成的调节和排序模型;结果显示自动凝固和互换评价的出色性能。人类的判断显示,TD-VAE在GPT-2中位基线上重新排序后产生的故事在GPT-2中等基线上有所改进,并显示与等级LSTM重排模型的类似性能。对潜在矢量的描述证明令人失望,并恶化了人类评估的绩效,因为它减少了生成的多样性,而模型没有学会如何推进描述。这凸显了技术任务性能(例如,Cutze)与产生有趣的故事之间的重要差异。