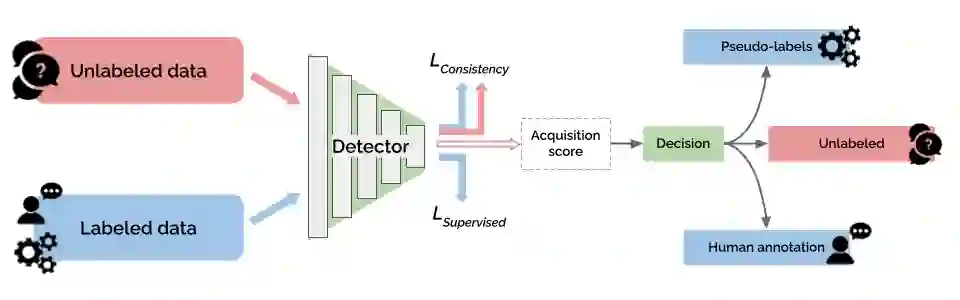

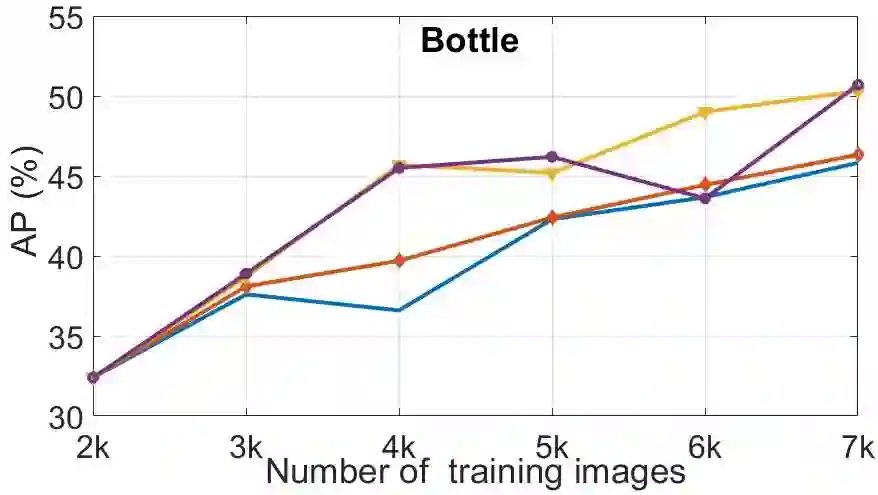

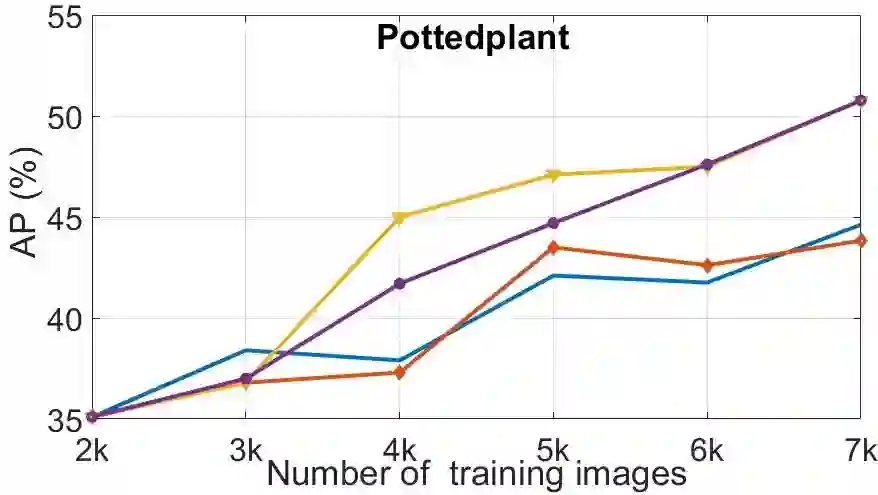

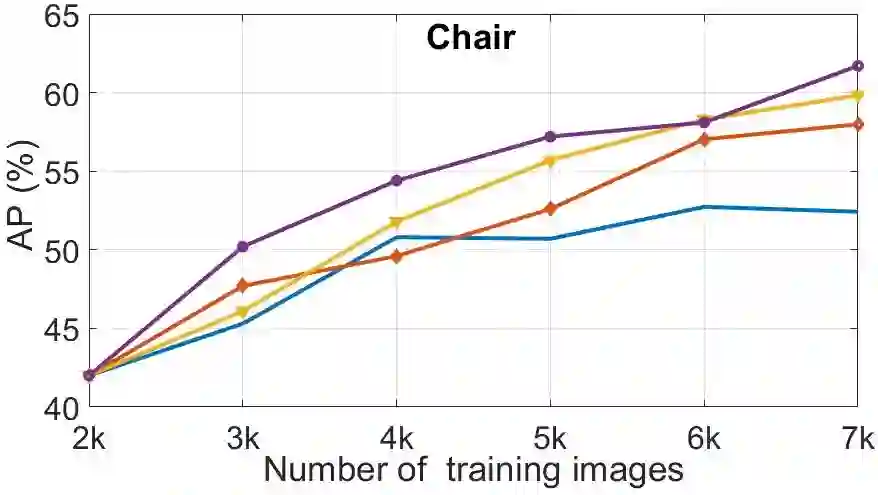

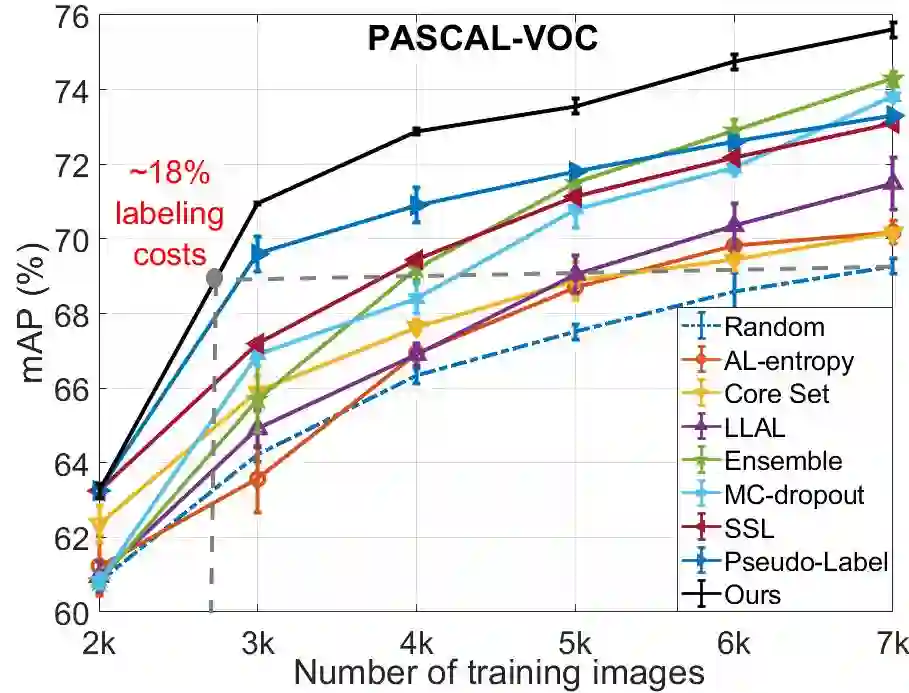

Deep neural networks have reached very high accuracy on object detection but their success hinges on large amounts of labeled data. To reduce the dependency on labels, various active-learning strategies have been proposed, typically based on the confidence of the detector. However, these methods are biased towards best-performing classes and can lead to acquired datasets that are not good representatives of the data in the testing set. In this work, we propose a unified framework for active learning, that considers both the uncertainty and the robustness of the detector, ensuring that the network performs accurately in all classes. Furthermore, our method is able to pseudo-label the very confident predictions, suppressing a potential distribution drift while further boosting the performance of the model. Experiments show that our method comprehensively outperforms a wide range of active-learning methods on PASCAL VOC07+12 and MS-COCO, having up to a 7.7% relative improvement, or up to 82% reduction in labeling cost.

翻译:深神经网络在物体探测方面达到了非常高的精确度,但其成功取决于大量标签数据。为了减少对标签的依赖性,已经提出了各种主动学习战略,通常以探测器的信心为基础。然而,这些方法偏向于表现最佳的类别,并可能导致获得的数据集不能很好地代表测试组的数据。在这项工作中,我们提议了一个积极学习的统一框架,既考虑到探测器的不确定性,又考虑到探测器的坚固性,确保网络在所有类别中运行的准确性。此外,我们的方法能够将非常自信的预测贴上假标签,抑制潜在的分布漂移,同时进一步提高模型的性能。实验表明,我们的方法全面超越了在PACAL VOC07+12和MS-COCO上的广泛主动学习方法,其相对改进率为7.7%,或降低82%的标签成本。