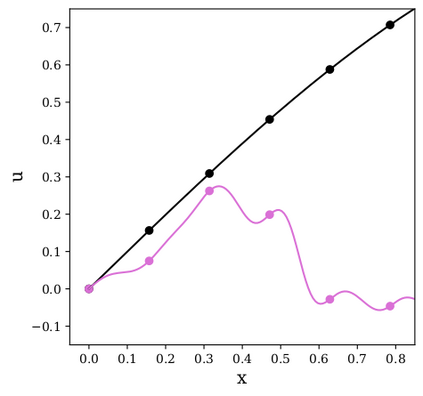

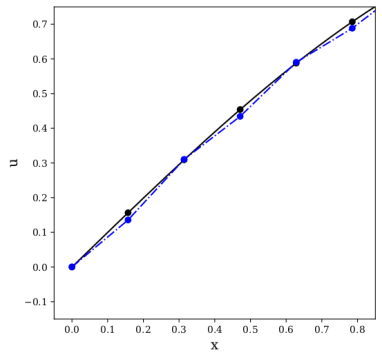

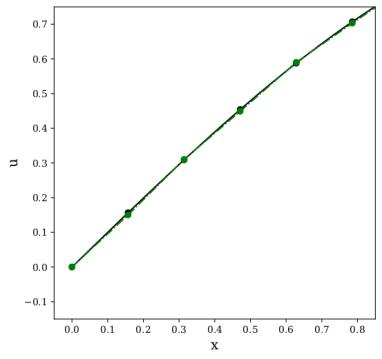

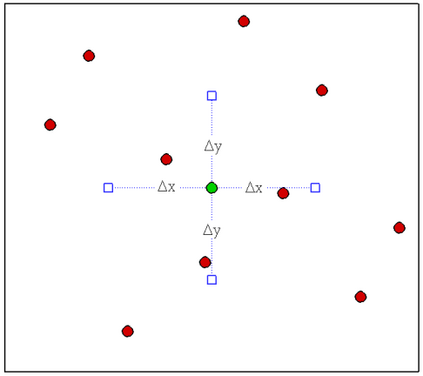

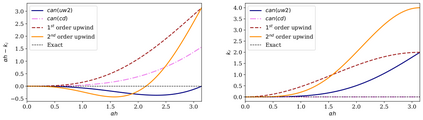

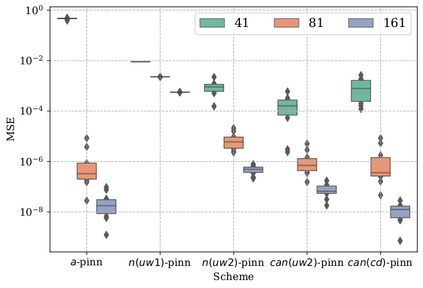

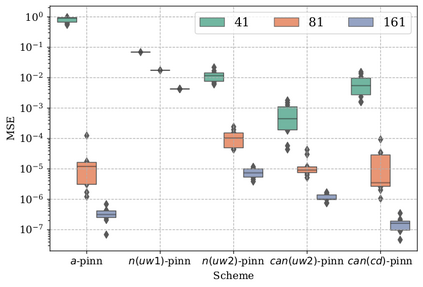

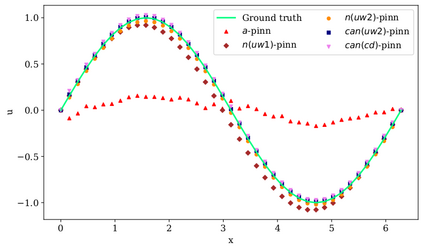

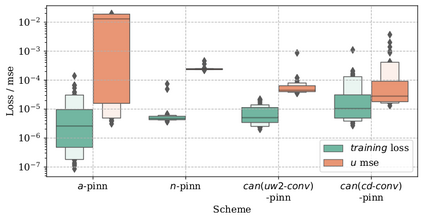

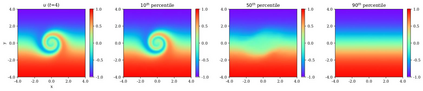

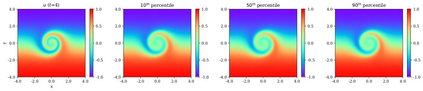

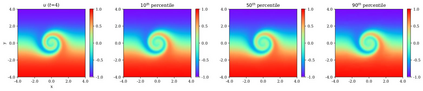

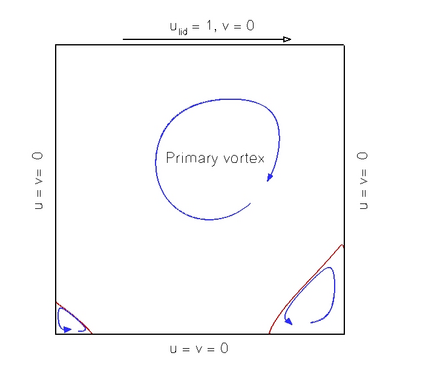

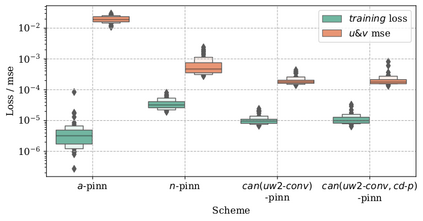

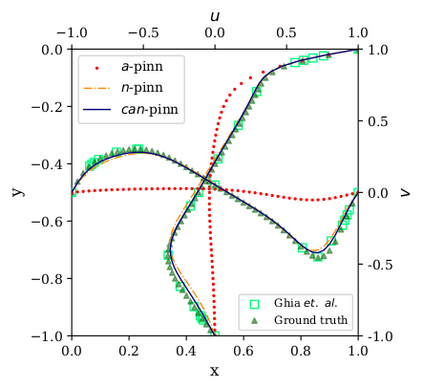

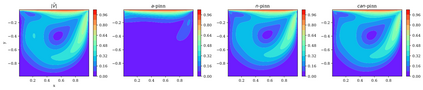

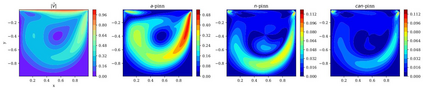

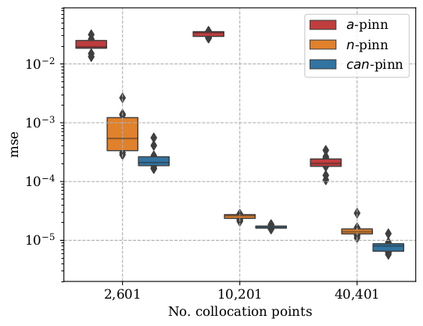

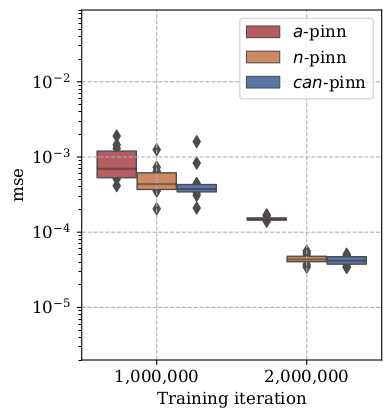

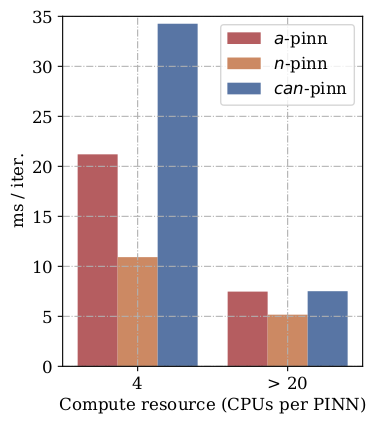

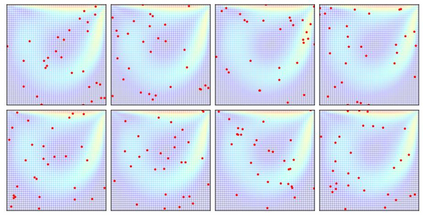

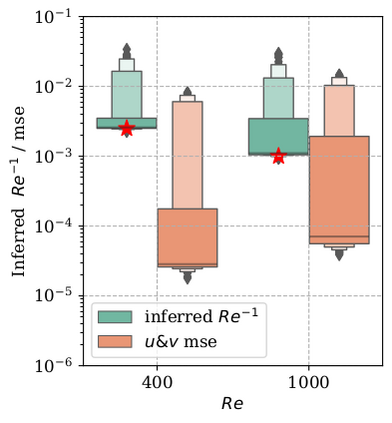

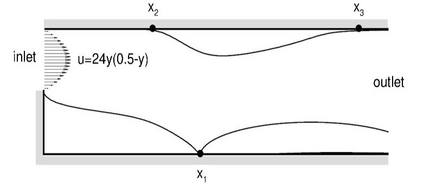

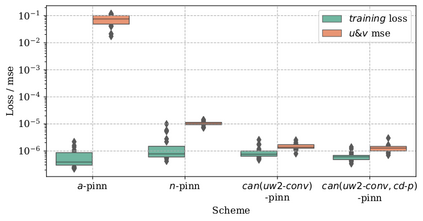

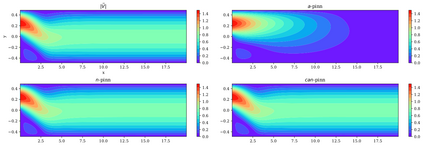

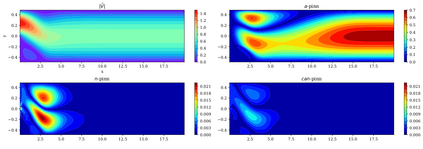

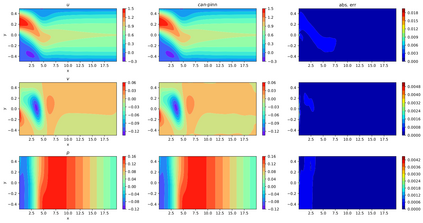

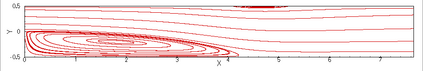

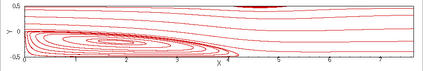

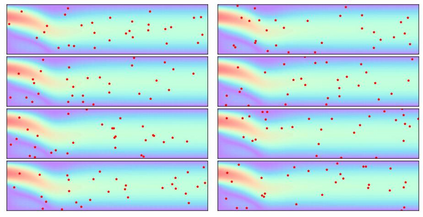

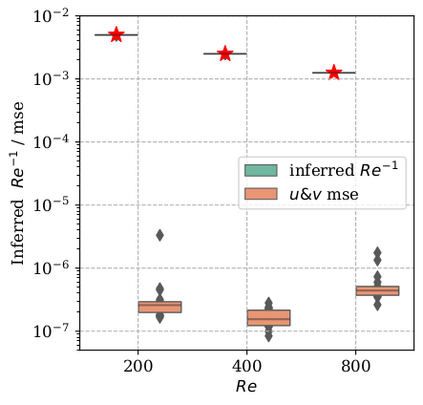

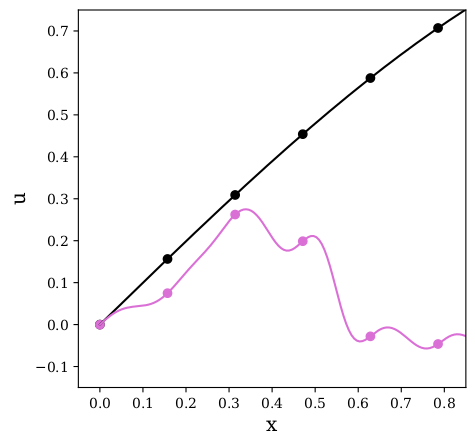

In this study, novel physics-informed neural network (PINN) methods for coupling neighboring support points and automatic differentiation (AD) through Taylor series expansion are proposed to allow efficient training with improved accuracy. The computation of differential operators required for PINNs loss evaluation at collocation points are conventionally obtained via AD. Although AD has the advantage of being able to compute the exact gradients at any point, such PINNs can only achieve high accuracies with large numbers of collocation points, otherwise they are prone to optimizing towards unphysical solution. To make PINN training fast, the dual ideas of using numerical differentiation (ND)-inspired method and coupling it with AD are employed to define the loss function. The ND-based formulation for training loss can strongly link neighboring collocation points to enable efficient training in sparse sample regimes, but its accuracy is restricted by the interpolation scheme. The proposed coupled-automatic-numerical differentiation framework, labeled as can-PINN, unifies the advantages of AD and ND, providing more robust and efficient training than AD-based PINNs, while further improving accuracy by up to 1-2 orders of magnitude relative to ND-based PINNs. For a proof-of-concept demonstration of this can-scheme to fluid dynamic problems, two numerical-inspired instantiations of can-PINN schemes for the convection and pressure gradient terms were derived to solve the incompressible Navier-Stokes (N-S) equations. The superior performance of can-PINNs is demonstrated on several challenging problems, including the flow mixing phenomena, lid driven flow in a cavity, and channel flow over a backward facing step. The results reveal that for challenging problems like these, can-PINNs can consistently achieve very good accuracy whereas conventional AD-based PINNs fail.

翻译:在本研究中,提出了新的物理知情神经网络(PINN)连接周边支持点和通过泰勒系列扩展自动区分(AD)的方法,以便通过提高准确度进行有效培训。计算在合用点进行PINN损失评价所需的不同操作员的方法通常都是通过AD获得的。虽然AD的优点是能够在任何时刻计算精确的梯度,但这种PINN的优点是能够实现大量相近点的高理解度,否则它们就容易优化到无形解决方案。要使PINN培训速度加快,就采用数字差异(ND)激发的方法并将其与AD相联的双重想法来界定损失功能。基于ND的培训损耗点配置方法可以将相近点紧密连接,以便能够在稀少的样本制度中进行有效的培训,但其准确性却受到内插计划的限制。提议的混合式自动和数字差异框架可以被标为PINNPN, 和ND的优势可以比基于AD的 PINNN的流流能更有力和高效的培训,同时通过不断的递增的递增的内压,在PIS的轨中,可以显示一个动态的压级的递进的递变变变变变的系统,可以显示一个动态的压的压的内压的压。