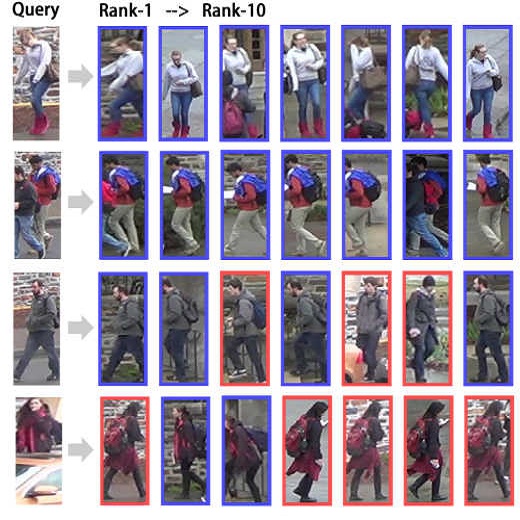

Attention mechanism has demonstrated great potential in fine-grained visual recognition tasks. In this paper, we present a counterfactual attention learning method to learn more effective attention based on causal inference. Unlike most existing methods that learn visual attention based on conventional likelihood, we propose to learn the attention with counterfactual causality, which provides a tool to measure the attention quality and a powerful supervisory signal to guide the learning process. Specifically, we analyze the effect of the learned visual attention on network prediction through counterfactual intervention and maximize the effect to encourage the network to learn more useful attention for fine-grained image recognition. Empirically, we evaluate our method on a wide range of fine-grained recognition tasks where attention plays a crucial role, including fine-grained image categorization, person re-identification, and vehicle re-identification. The consistent improvement on all benchmarks demonstrates the effectiveness of our method. Code is available at https://github.com/raoyongming/CAL

翻译:关注机制在细微的视觉识别任务中表现出了巨大的潜力。在本文中,我们提出了一个反事实关注学习方法,以根据因果关系推断来学习更有效的关注。与大多数根据常规可能性来学习视觉关注的现有方法不同,我们建议以反事实因果关系来学习关注,这为衡量关注质量提供了工具,并为指导学习过程提供了强有力的监督信号。具体地说,我们通过反事实干预来分析所学的视觉关注对网络预测的影响,并最大限度地发挥效果,鼓励网络学习更多关注微微粒图像识别的有用性。我们评估了我们关于广泛微小的识别任务的方法,其中关注起着关键的作用,包括细微的图像分类、人重新识别和车辆再识别。所有基准的不断改进都表明了我们的方法的有效性。代码见https://github.com/raoyongming/CAL。