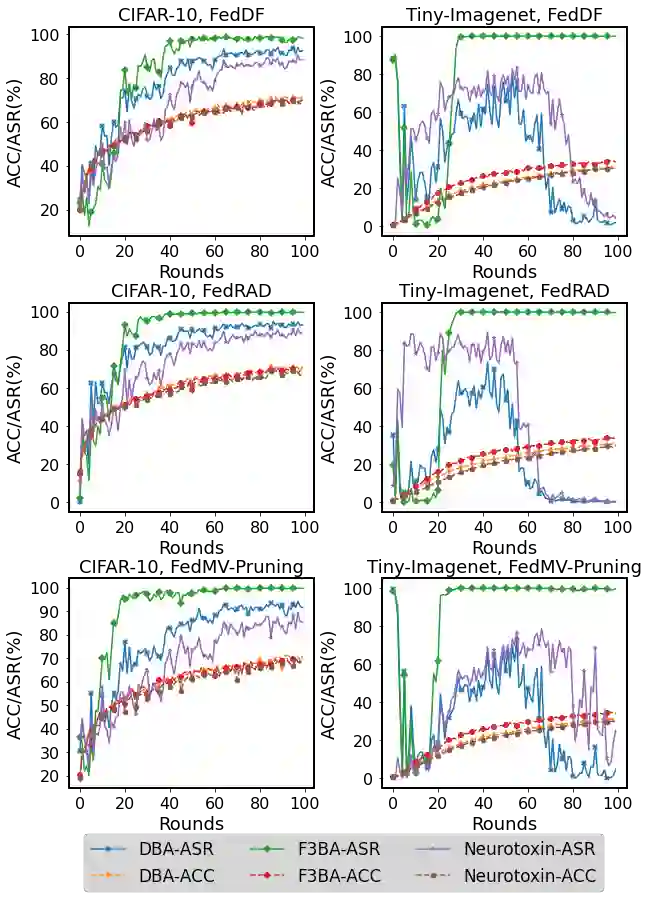

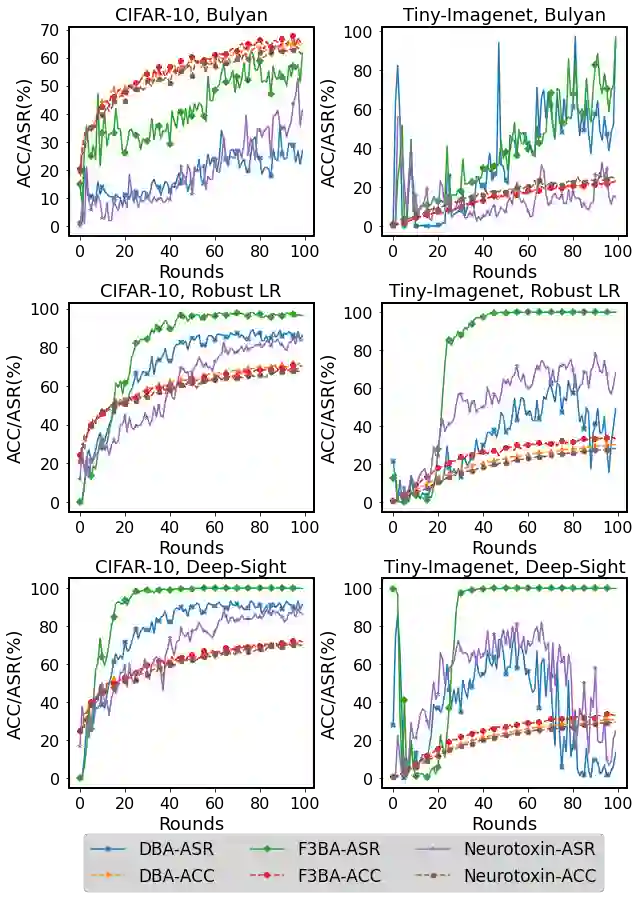

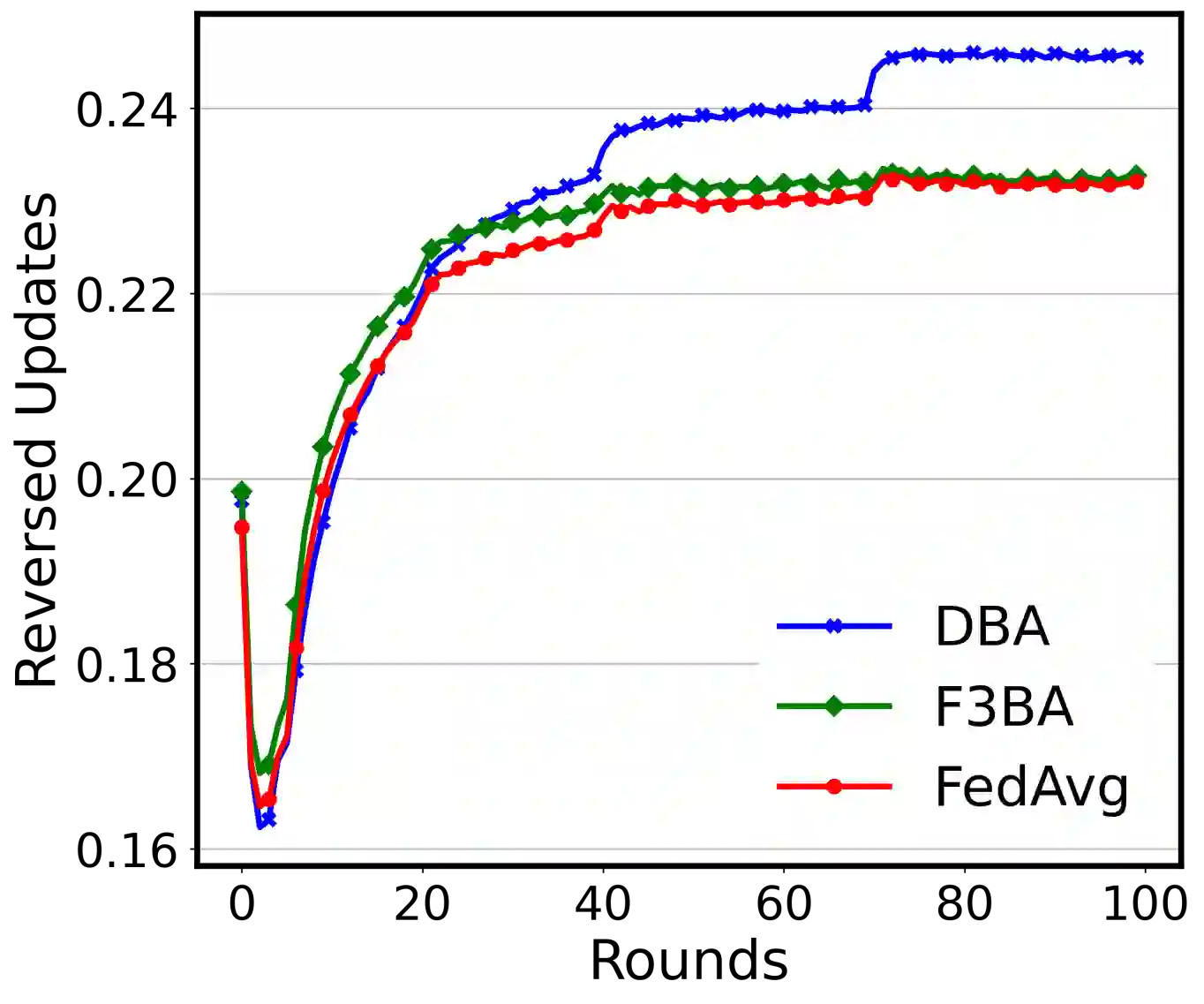

Federated Learning (FL) is a popular distributed machine learning paradigm that enables jointly training a global model without sharing clients' data. However, its repetitive server-client communication gives room for backdoor attacks with aim to mislead the global model into a targeted misprediction when a specific trigger pattern is presented. In response to such backdoor threats on federated learning, various defense measures have been proposed. In this paper, we study whether the current defense mechanisms truly neutralize the backdoor threats from federated learning in a practical setting by proposing a new federated backdoor attack method for possible countermeasures. Different from traditional training (on triggered data) and rescaling (the malicious client model) based backdoor injection, the proposed backdoor attack framework (1) directly modifies (a small proportion of) local model weights to inject the backdoor trigger via sign flips; (2) jointly optimize the trigger pattern with the client model, thus is more persistent and stealthy for circumventing existing defenses. In a case study, we examine the strength and weaknesses of recent federated backdoor defenses from three major categories and provide suggestions to the practitioners when training federated models in practice.

翻译:联邦学习联合会(FL)是一个广受欢迎的分布式机器学习模式,它使得在不分享客户数据的情况下联合培训全球模式能够联合培训一个全球模式,然而,它的重复服务器-客户通信为后门攻击提供了空间,目的是在出现特定触发模式时将全球模式误入有目标的错误;针对对联合会学习的这种后门威胁,提出了各种防御措施。在本文件中,我们研究目前的防御机制是否真正消除了在实际环境中联邦学习的后门威胁,为此提出了一种新的联合后门攻击方法,以备可能采取对策。与传统的培训(关于触发数据)和基于后门注射(恶意客户模式)的后门攻击方法不同,拟议的后门攻击框架(1) 直接修改(一小部分)当地模式重量,以便通过标志转动来输入后门触发器;(2) 与客户模式联合优化触发模式,从而更加持久和隐形地规避现有的防御。在一项案例研究中,我们研究了最近联邦后门防御的强弱之处,从三大类别中研究,并在实践中培训进式模型时向从业人员提出建议。