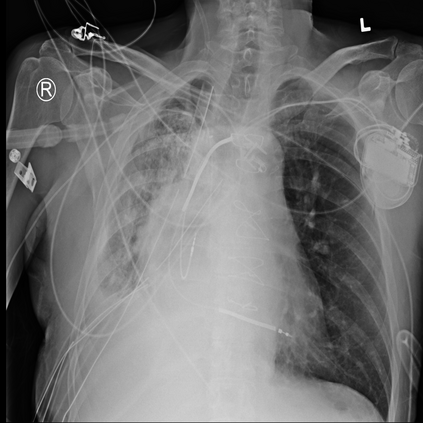

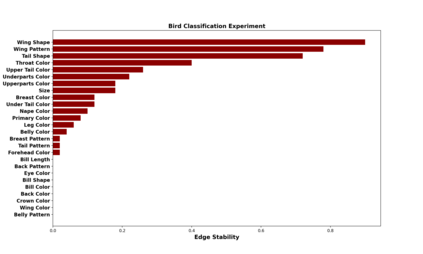

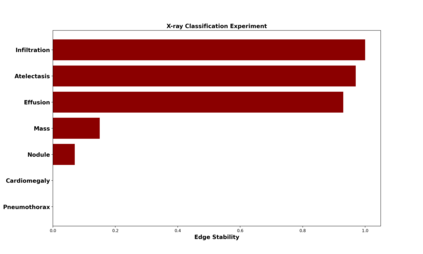

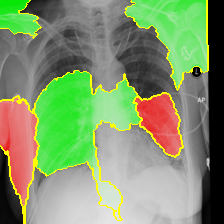

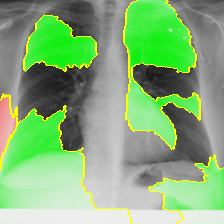

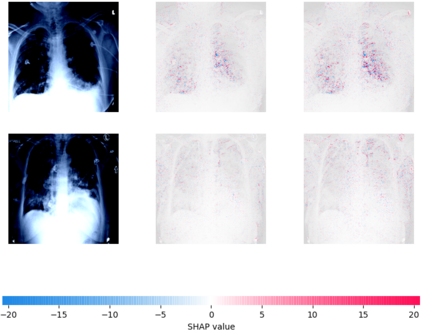

We propose to explain the behavior of black-box prediction methods (e.g., deep neural networks trained on image pixel data) using causal graphical models. Specifically, we explore learning the structure of a causal graph where the nodes represent prediction outcomes along with a set of macro-level "interpretable" features, while allowing for arbitrary unmeasured confounding among these variables. The resulting graph may indicate which of the interpretable features, if any, are possible causes of the prediction outcome and which may be merely associated with prediction outcomes due to confounding. The approach is motivated by a counterfactual theory of causal explanation wherein good explanations point to factors that are "difference-makers" in an interventionist sense. The resulting analysis may be useful in algorithm auditing and evaluation, by identifying features which make a causal difference to the algorithm's output.

翻译:我们建议用因果图形模型解释黑盒预测方法(例如,在图像像素数据方面受过训练的深神经网络)的行为。 具体地说, 我们探索一个因果图表的结构, 节点代表预测结果, 以及一组宏观级的“ 解释” 特征, 同时允许任意的、 无法测量的混杂这些变量。 由此得出的图表可能显示哪些可解释的特征( 如果有的话)是预测结果的可能原因, 并且可能仅仅与预测结果相关, 因为混乱。 这种方法的动机是反事实的因果解释理论, 其中良好的解释指向干预主义意义上的“ 差异制造者 ” 。 由此产生的分析可能对算法审计和评价有用, 其方法是确定对算法输出产生因果关系的特征 。