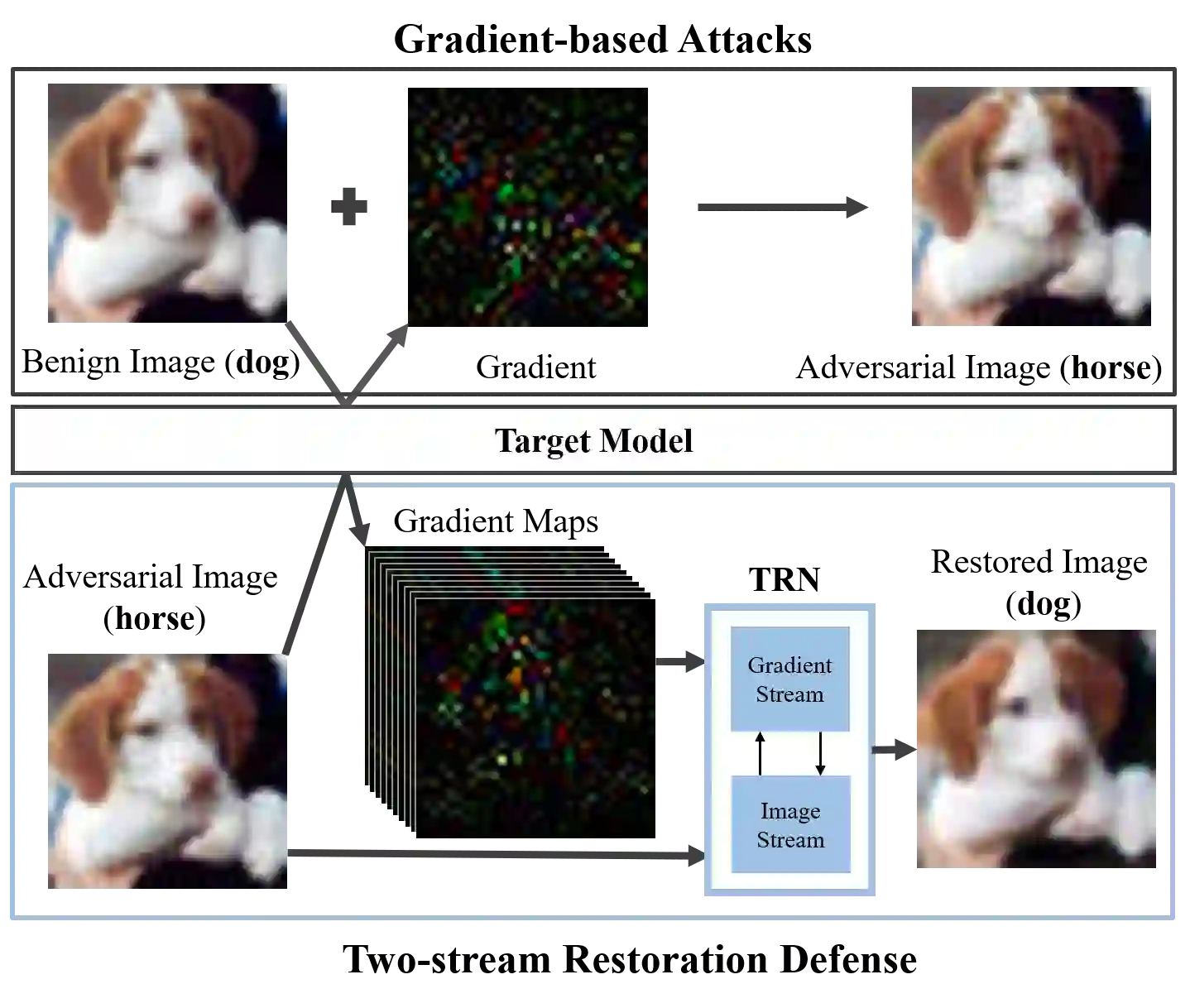

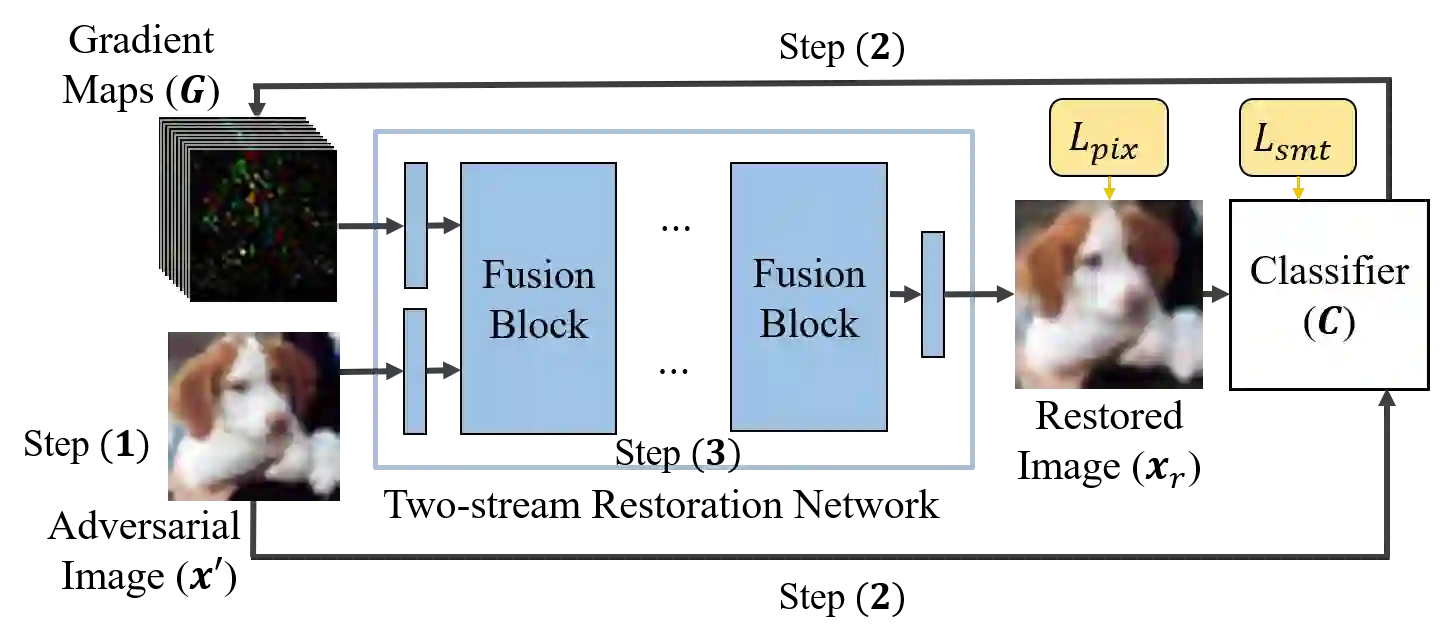

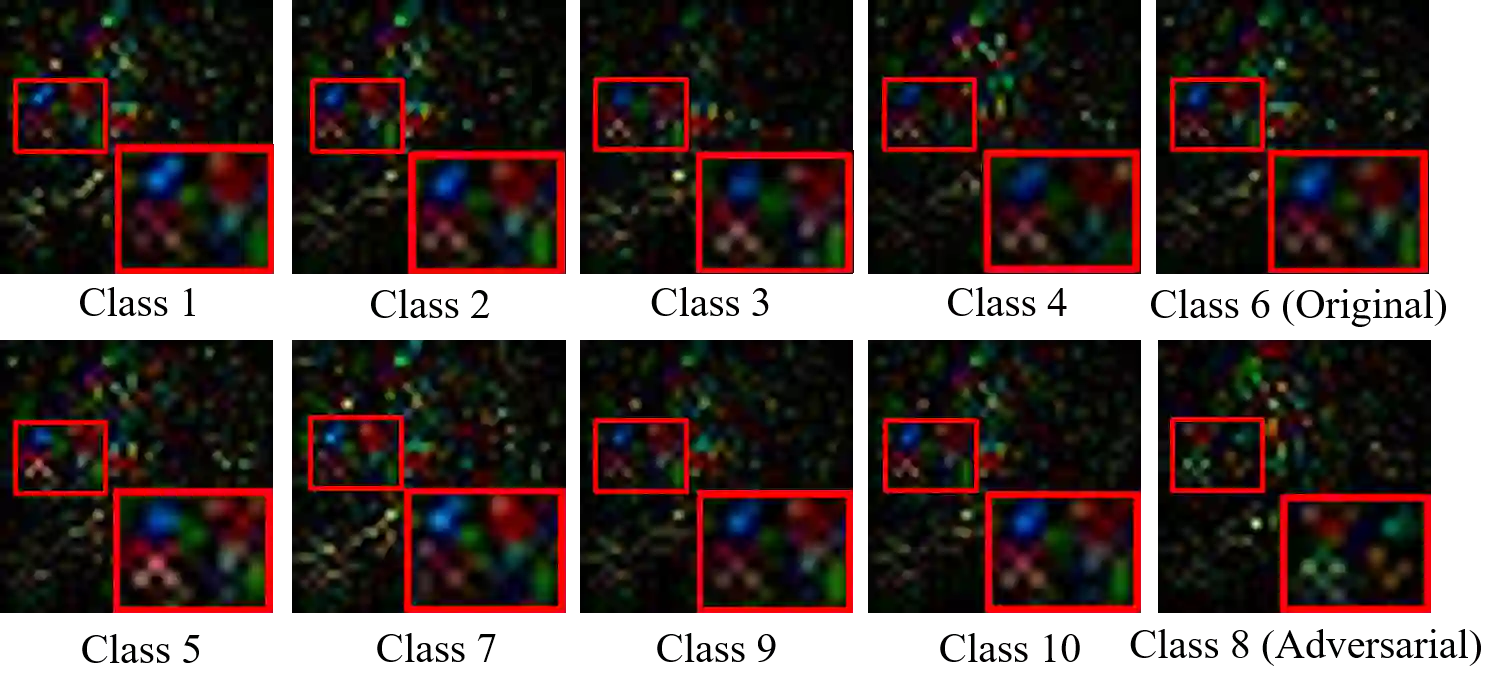

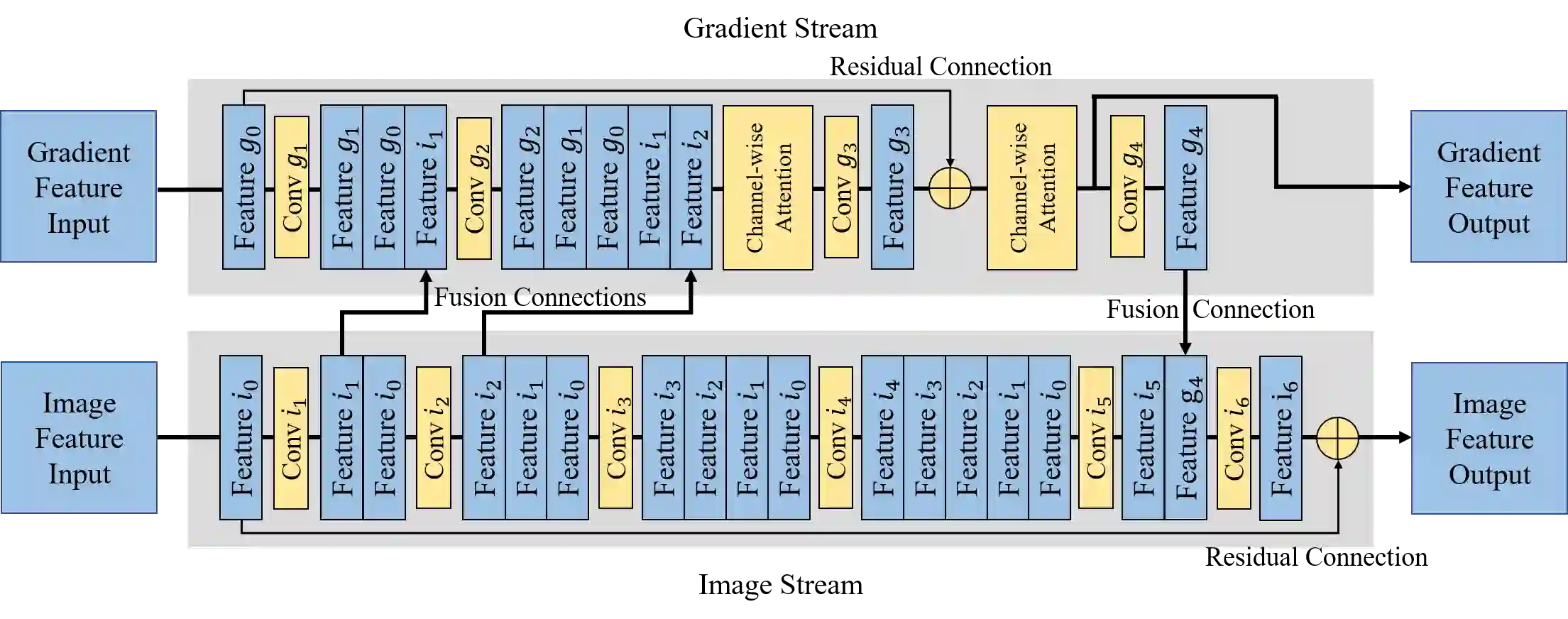

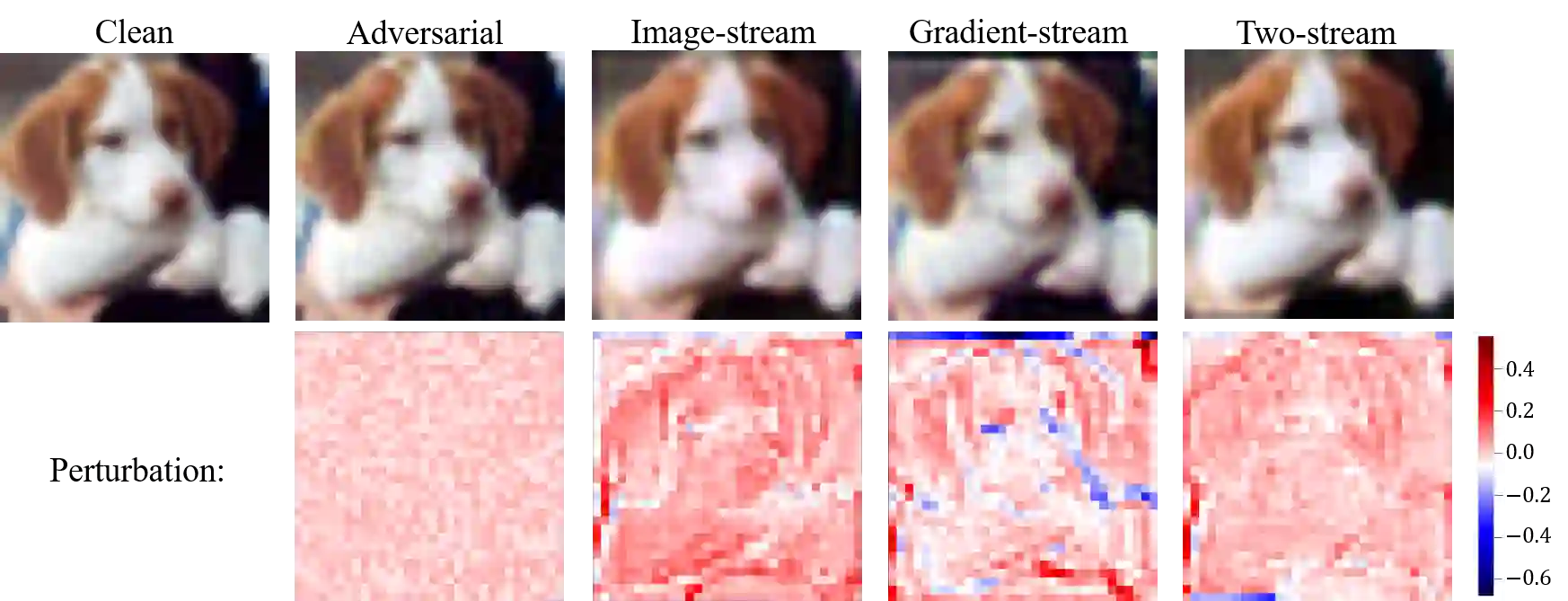

Deep learning models have been shown to be vulnerable to adversarial attacks. In particular, gradient-based attacks have demonstrated high success rates recently. The gradient measures how each image pixel affects the model output, which contains critical information for generating malicious perturbations. In this paper, we show that the gradients can also be exploited as a powerful weapon to defend against adversarial attacks. By using both gradient maps and adversarial images as inputs, we propose a Two-stream Restoration Network (TRN) to restore the adversarial images. To optimally restore the perturbed images with two streams of inputs, a Gradient Map Estimation Mechanism is proposed to estimate the gradients of adversarial images, and a Fusion Block is designed in TRN to explore and fuse the information in two streams. Once trained, our TRN can defend against a wide range of attack methods without significantly degrading the performance of benign inputs. Also, our method is generalizable, scalable, and hard to bypass. Experimental results on CIFAR10, SVHN, and Fashion MNIST demonstrate that our method outperforms state-of-the-art defense methods.

翻译:深层学习模型被证明很容易受到对抗性攻击。 特别是, 梯度攻击最近显示的成功率很高。 梯度测量每个图像像素如何影响模型输出, 模型输出包含产生恶意扰动的关键信息。 在本文中, 我们显示, 梯度也可以被作为一种强大的武器来防御对抗性攻击。 通过使用梯度图和对抗性图像作为投入, 我们建议建立一个双流恢复网络( TRN) 来恢复对抗性图像。 为了以两种输入流最优化地恢复被包围的图像, 提议了一个梯度地图动画机制来估计对抗性图像的梯度, 而在TRN 中设计了一个混凝土块, 以探索和整合两条流的信息。 一旦经过培训, 我们的梯度梯度可以防御广泛的攻击方法, 而不会显著降低良性投入的性能。 此外, 我们的方法是普遍的、可扩展的和难以绕过的。 CIFAR10、 SVHN 和 Fashon MNIST的实验性结果显示, 我们的方法超越了艺术的状态防御方法。