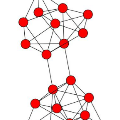

Network embedding represents nodes in a continuous vector space and preserves structure information from the Network. Existing methods usually adopt a "one-size-fits-all" approach when concerning multi-scale structure information, such as first- and second-order proximity of nodes, ignoring the fact that different scales play different roles in the embedding learning. In this paper, we propose an Attention-based Adversarial Autoencoder Network Embedding(AAANE) framework, which promotes the collaboration of different scales and lets them vote for robust representations. The proposed AAANE consists of two components: 1) Attention-based autoencoder effectively capture the highly non-linear network structure, which can de-emphasize irrelevant scales during training. 2) An adversarial regularization guides the autoencoder learn robust representations by matching the posterior distribution of the latent embeddings to given prior distribution. This is the first attempt to introduce attention mechanisms to multi-scale network embedding. Experimental results on real-world networks show that our learned attention parameters are different for every network and the proposed approach outperforms existing state-of-the-art approaches for network embedding.

翻译:网络嵌入是一个连续矢量空间中的节点,并保存着网络的结构信息。在多尺度的结构信息方面,现有方法通常采用“一刀切”的方法,例如节点的一阶和二阶相近,忽略了不同尺度在嵌入学习中扮演不同角色的事实。在本文中,我们建议采用一个基于关注的双向自动编码网络嵌入(AANE)框架,该框架促进不同规模的合作,并让它们投票支持强有力的代表。拟议AANE由两个部分组成:1)基于注意的自动编码有效捕捉高度非线性网络结构,在培训期间可以淡化不相关的尺度。2)对自动编码者进行调节,通过匹配潜在嵌入的外表分布来学习稳健的表达方式。这是首次尝试引入多尺度嵌入网络的注意机制。现实世界网络的实验结果显示,我们所了解的注意参数不同,而且拟议的网络嵌入方式比现有的状态-艺术嵌入方式都不同。