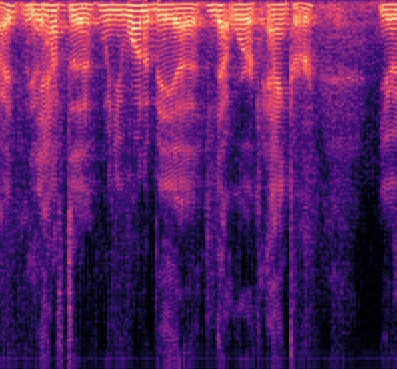

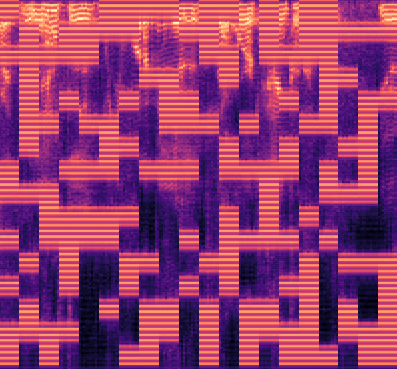

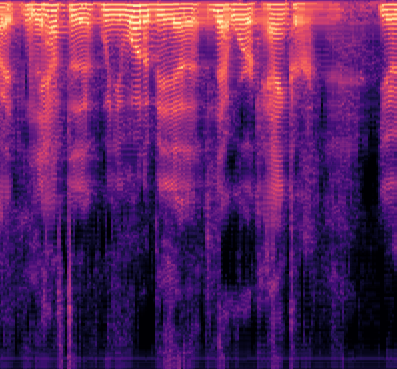

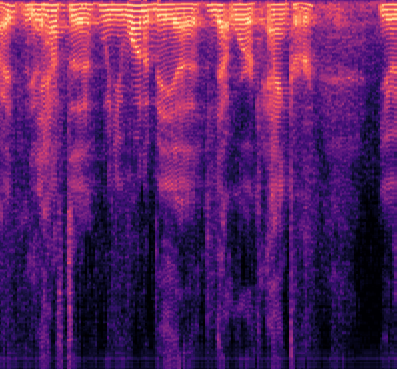

Recent years have seen remarkable progress in speech emotion recognition (SER), thanks to advances in deep learning techniques. However, the limited availability of labeled data remains a significant challenge in the field. Self-supervised learning has recently emerged as a promising solution to address this challenge. In this paper, we propose the vector quantized masked autoencoder for speech (VQ-MAE-S), a self-supervised model that is fine-tuned to recognize emotions from speech signals. The VQ-MAE-S model is based on a masked autoencoder (MAE) that operates in the discrete latent space of a vector-quantized variational autoencoder. Experimental results show that the proposed VQ-MAE-S model, pre-trained on the VoxCeleb2 dataset and fine-tuned on emotional speech data, outperforms an MAE working on the raw spectrogram representation and other state-of-the-art methods in SER.

翻译:近年来,随着深度学习技术的进步,语音情感识别(SER)取得了显著的进展。然而,标注数据的有限性仍然是该领域的重大挑战。最近出现的自监督学习被认为是解决这个挑战的一种有前途的解决方案。在本文中,我们提出了一种基于矢量量化变分自编码器的向量量化掩蔽自编码器(VQ-MAE-S)模型,该模型是特别针对情感语音信号进行微调的自监督模型。VQ-MAE-S模型基于在矢量量化变分自编码器的离散潜在空间中运行的掩蔽自编码器(MAE)构建。实验结果表明,用 VoxCeleb2 数据集预训练并在情感语音数据上进行微调的 VQ-MAE-S 模型在 SER 中优于基于原始频谱表示的 MAE 模型和其他最先进的方法。