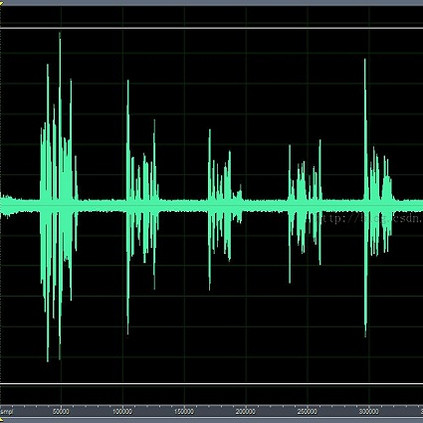

Single-channel deep speech enhancement approaches often estimate a single multiplicative mask to extract clean speech without a measure of its accuracy. Instead, in this work, we propose to quantify the uncertainty associated with clean speech estimates in neural network-based speech enhancement. Predictive uncertainty is typically categorized into aleatoric uncertainty and epistemic uncertainty. The former accounts for the inherent uncertainty in data and the latter corresponds to the model uncertainty. Aiming for robust clean speech estimation and efficient predictive uncertainty quantification, we propose to integrate statistical complex Gaussian mixture models (CGMMs) into a deep speech enhancement framework. More specifically, we model the dependency between input and output stochastically by means of a conditional probability density and train a neural network to map the noisy input to the full posterior distribution of clean speech, modeled as a mixture of multiple complex Gaussian components. Experimental results on different datasets show that the proposed algorithm effectively captures predictive uncertainty and that combining powerful statistical models and deep learning also delivers a superior speech enhancement performance.

翻译:单气道深层语音增强方法往往估计出一个单一的多复制面罩,以便在不测量其准确性的情况下提取清洁言语。 相反,在这项工作中,我们提议量化神经网络语音增强中与清洁言语估计有关的不确定性。 预测不确定性通常被归类为偏移不确定性和认知不确定性。 前者解释了数据固有的不确定性,后者与模型不确定性相对应。 为了进行稳健的清洁言语估计和高效的预测不确定性量化,我们提议将统计复杂高斯混合模型(CGMMs)纳入深层语音强化框架。 更具体地说,我们用有条件的概率密度来模拟输入和输出之间的依赖性,并训练神经网络,以绘制对清洁言语全部外表层分布的噪音输入图,这种输入模型是多种复杂的高斯语组成部分的混合体。 不同数据集的实验结果表明,拟议的算法有效地捕捉了预测性的不确定性,并且将强大的统计模型和深层学习结合起来,也能够产生更优秀的语音增强性。