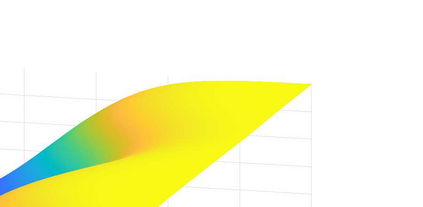

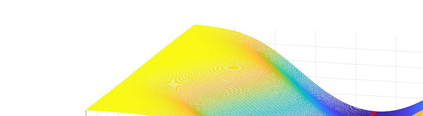

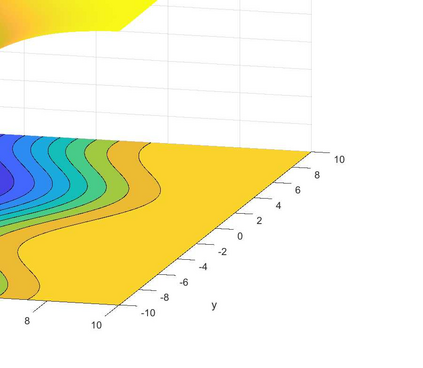

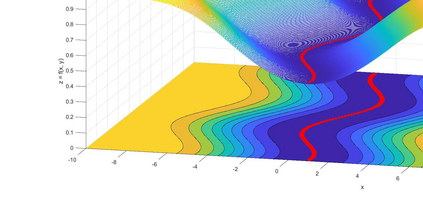

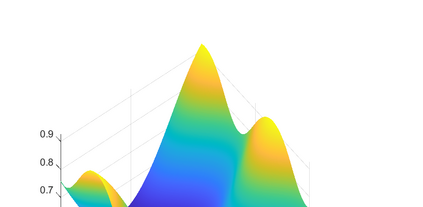

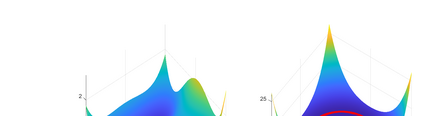

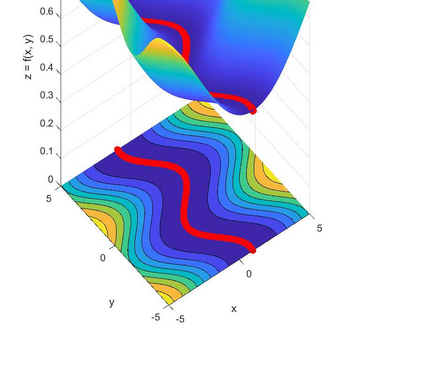

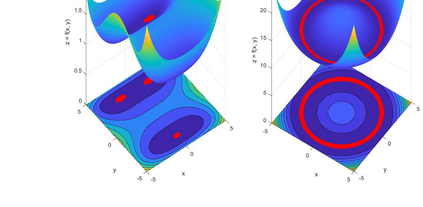

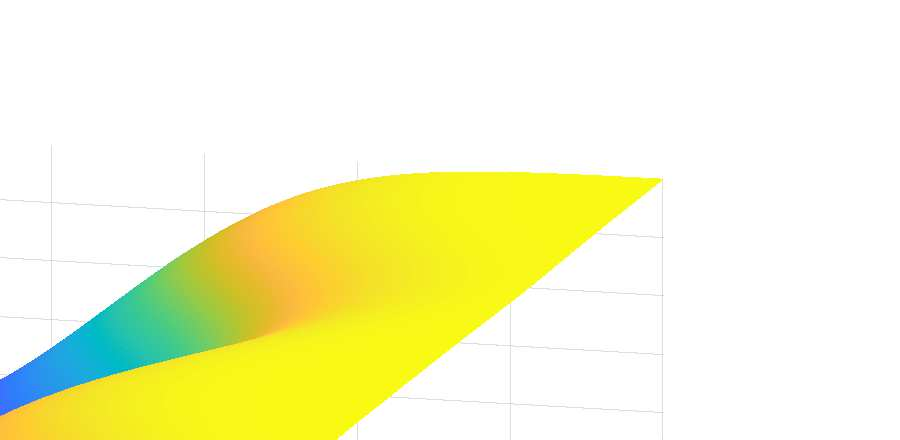

Loss functions with non-isolated minima have emerged in several machine learning problems, creating a gap between theory and practice. In this paper, we formulate a new type of local convexity condition that is suitable to describe the behavior of loss functions near non-isolated minima. We show that such condition is general enough to encompass many existing conditions. In addition we study the local convergence of the SGD under this mild condition by adopting the notion of stochastic stability. The corresponding concentration inequalities from the convergence analysis help to interpret the empirical observation from some practical training results.

翻译:一些机器学习问题中出现了与非孤立微型工程有关的损失功能的损失,在理论和实践之间造成了差距。在本文件中,我们制定了一种新的当地混凝土条件,适合于描述非孤立小型工程附近损失功能的行为。我们表明,这种条件十分普遍,足以涵盖许多现有条件。此外,我们还研究SGD在这一温和条件下在当地的融合,采用了随机稳定性的概念。从趋同分析中得出的相应的集中不平等有助于解释一些实际培训结果中的经验性观察。