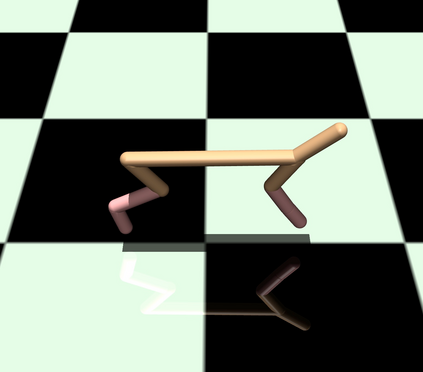

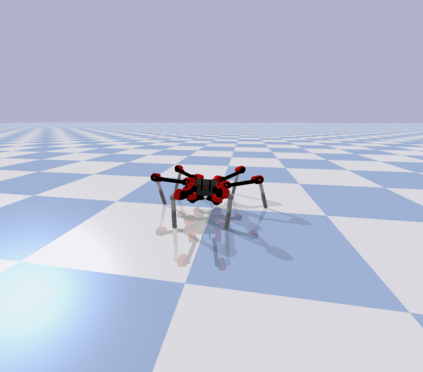

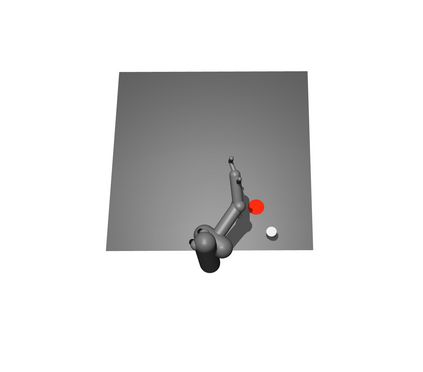

Model-based Reinforcement Learning (MBRL) is a promising framework for learning control in a data-efficient manner. MBRL algorithms can be fairly complex due to the separate dynamics modeling and the subsequent planning algorithm, and as a result, they often possess tens of hyperparameters and architectural choices. For this reason, MBRL typically requires significant human expertise before it can be applied to new problems and domains. To alleviate this problem, we propose to use automatic hyperparameter optimization (HPO). We demonstrate that this problem can be tackled effectively with automated HPO, which we demonstrate to yield significantly improved performance compared to human experts. In addition, we show that tuning of several MBRL hyperparameters dynamically, i.e. during the training itself, further improves the performance compared to using static hyperparameters which are kept fixed for the whole training. Finally, our experiments provide valuable insights into the effects of several hyperparameters, such as plan horizon or learning rate and their influence on the stability of training and resulting rewards.

翻译:以模型为基础的强化学习(MBRL)是一个很有希望的以数据效率方式进行学习控制的框架。 MBRL算法由于不同的动态建模和随后的规划算法而可能相当复杂,因此它们往往拥有数十个超参数和建筑选择。因此,MBRL通常需要大量的人类专门知识才能应用于新的问题和领域。为了缓解这一问题,我们提议使用自动超参数优化(HPO)来有效解决这一问题。我们证明,自动HPO可以有效解决这一问题,这显示与人类专家相比,它能大大改善性能。此外,我们还表明,在培训期间,对若干MBRL双参数进行动态调整,比使用固定用于整个培训的静态超参数进一步提高了性能。最后,我们的实验为一些超参数的影响提供了宝贵的洞察力,例如计划视野或学习率,以及它们对培训和结果回报的稳定性的影响。