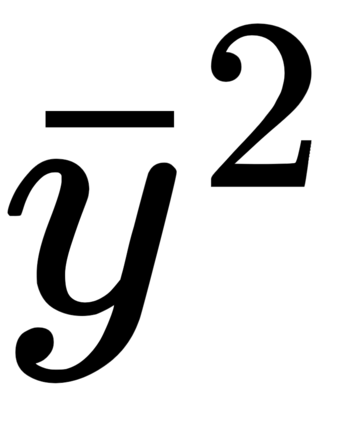

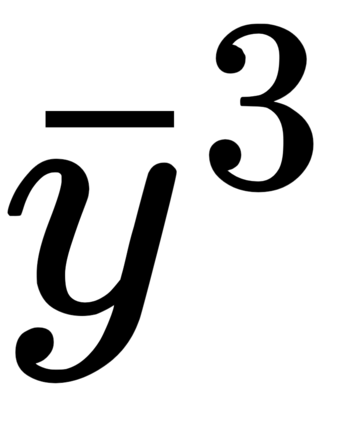

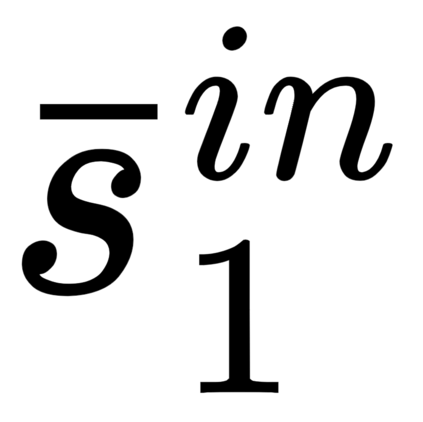

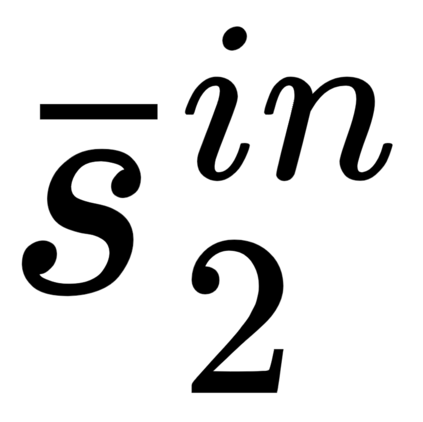

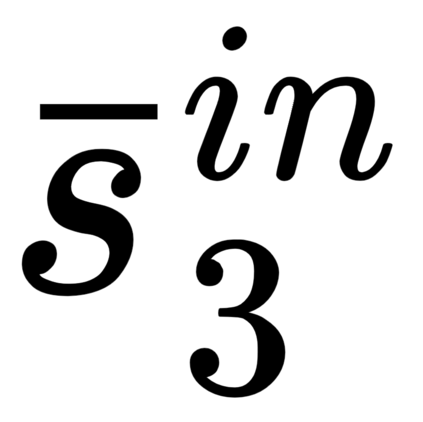

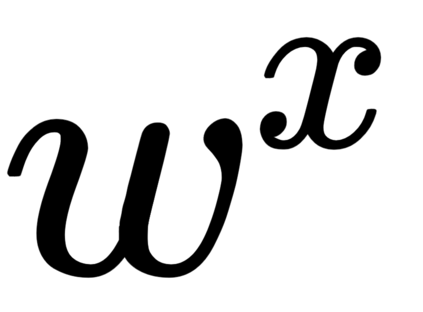

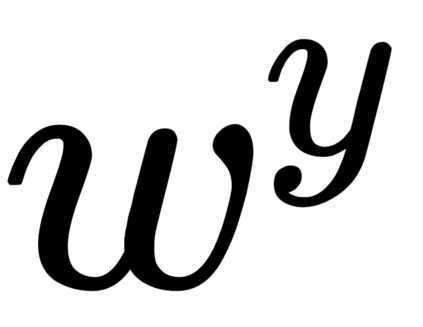

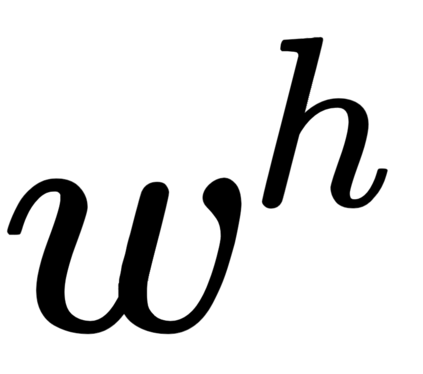

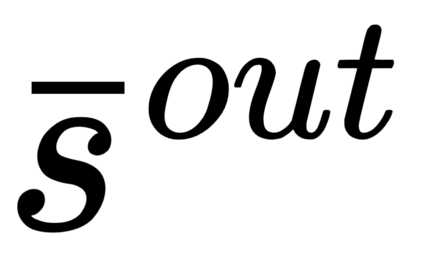

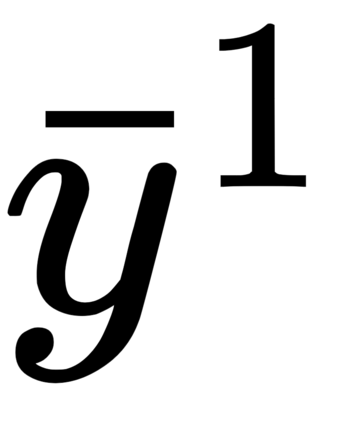

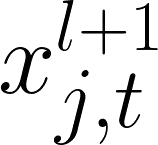

Predictive coding networks (PCNs) are an influential model for information processing in the brain. They have appealing theoretical interpretations and offer a single mechanism that accounts for diverse perceptual phenomena of the brain. On the other hand, backpropagation (BP) is commonly regarded to be the most successful learning method in modern machine learning. Thus, it is exciting that recent work formulates inference learning (IL) that trains PCNs to approximate BP. However, there are several remaining critical issues: (i) IL is an approximation to BP with unrealistic/non-trivial requirements, (ii) IL approximates BP in single-step weight updates; whether it leads to the same point as BP after the weight updates are conducted for more steps is unknown, and (iii) IL is computationally significantly more costly than BP. To solve these issues, a variant of IL that is strictly equivalent to BP in fully connected networks has been proposed. In this work, we build on this result by showing that it also holds for more complex architectures, namely, convolutional neural networks and (many-to-one) recurrent neural networks. To our knowledge, we are the first to show that a biologically plausible algorithm is able to exactly replicate the accuracy of BP on such complex architectures, bridging the existing gap between IL and BP, and setting an unprecedented performance for PCNs, which can now be considered as efficient alternatives to BP.

翻译:预测性编码网络(PCNs)是大脑信息处理的有影响的模型。它们有吸引人的理论解释,并提供一个单一机制,说明大脑各种感知现象。另一方面,后推法(BP)通常被认为是现代机器学习中最成功的学习方法。因此,令人兴奋的是,最近的工作提出了推论学习(IL),将PCN培训到接近BP。然而,还存在若干其余的关键问题:(一) IL是接近BP的,有不切实际/非三重的要求;(二) IL在单步重量更新中接近BP;它是否导致与BP在同一点,在进行重量更新后更多的步骤是未知的;(三) IL的计算成本比BP要高得多。为了解决这些问题,已经提出了一个严格相当于完全连通的网络中BPP的替代方案。 然而,我们可以通过显示它也能维持更复杂的结构,即,即,Cental 神经网络和(man-toone) BPeral 的精确性能显示我们现有的B级结构。