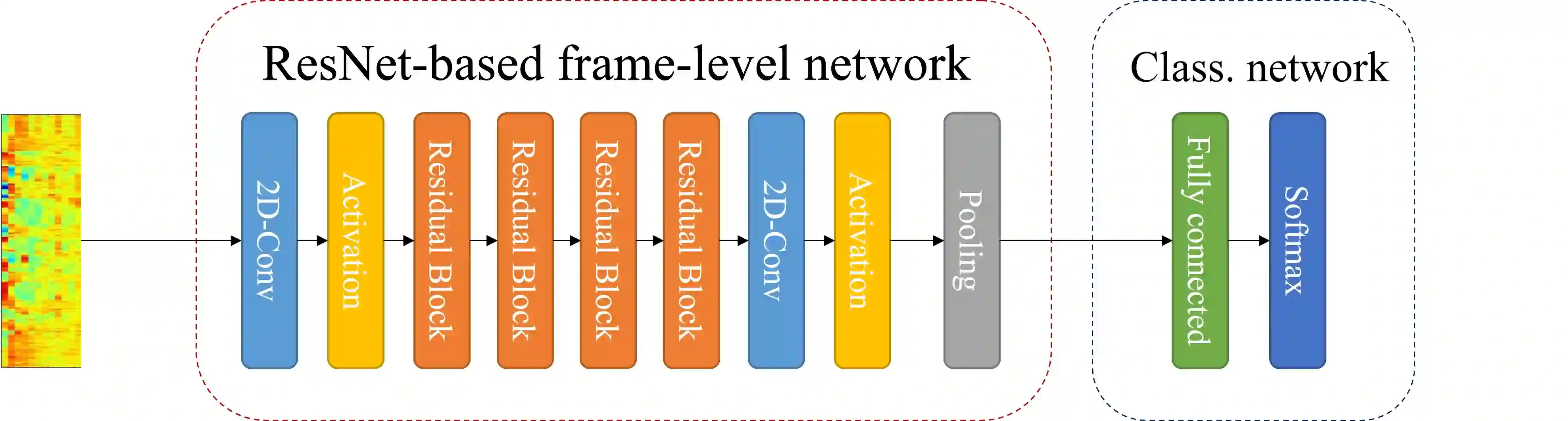

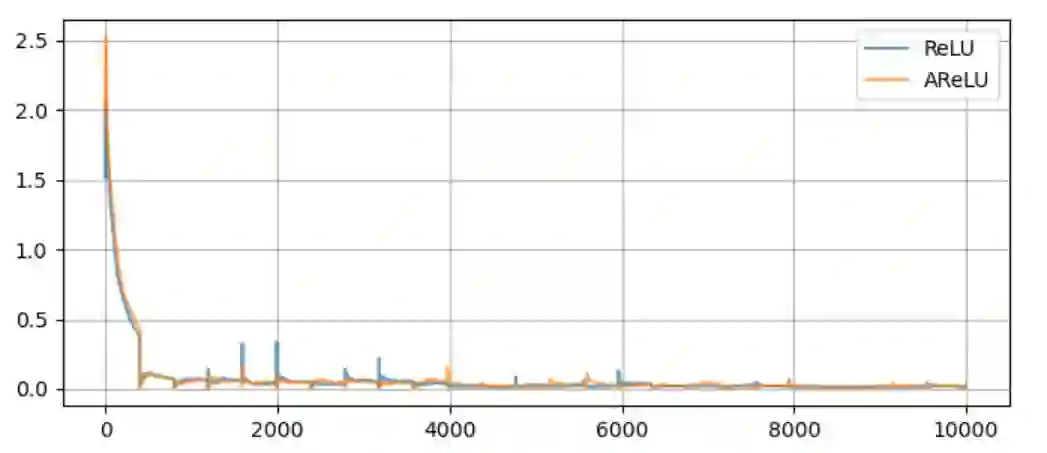

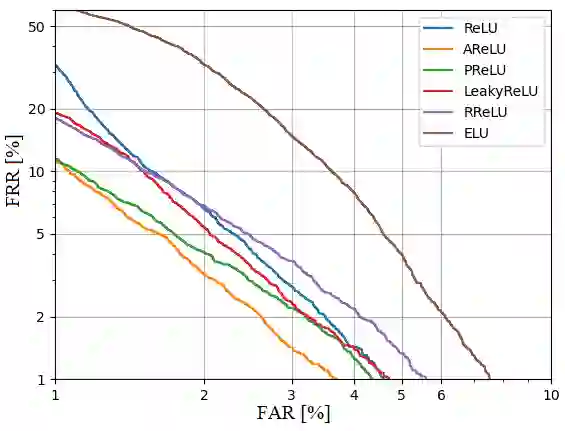

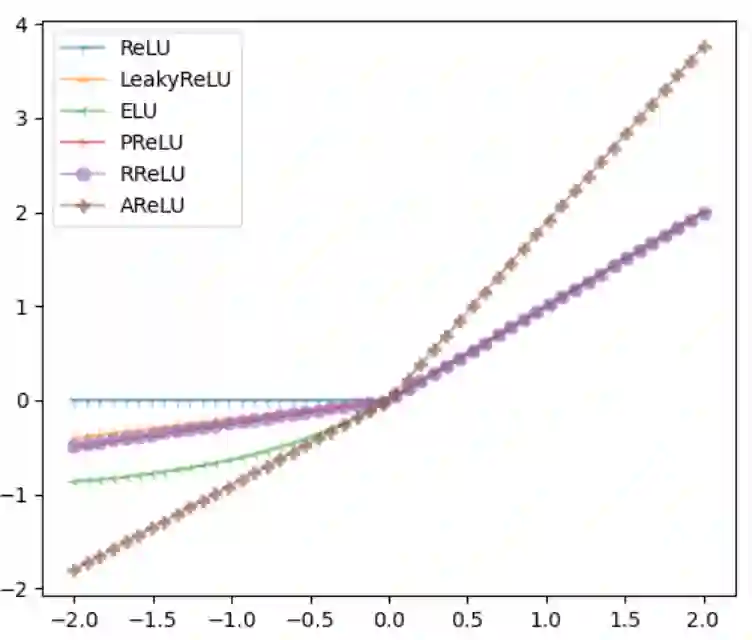

The main objective of the spoofing countermeasure system is to detect the artifacts within the input speech caused by the speech synthesis or voice conversion process. In order to achieve this, we propose to adopt an attentive activation function, more specifically attention rectified linear unit (AReLU) to the end-to-end spoofing countermeasure system. Since the AReLU employs the attention mechanism to boost the contribution of relevant input features while suppressing the irrelevant ones, introducing AReLU can help the countermeasure system to focus on the features related to the artifacts. The proposed framework was experimented on the logical access (LA) task of ASVSpoof2019 dataset, and outperformed the systems using the standard non-learnable activation functions.

翻译:为了实现这一目标,我们提议采用一个专注的激活功能,更具体地说,将注意力修正线性单元(ARELU)放在端到端的表面反制系统上。由于ARELU利用关注机制来推动相关输入特性的贡献,同时抑制不相关的输入特性,引入ARELU可以帮助反措施系统关注与文物有关的特性。拟议的框架在ASVSpoof 2019数据集的逻辑访问(LA)任务上进行了实验,并用标准的不可撤销的激活功能优于系统。