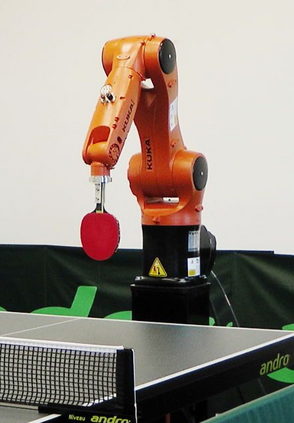

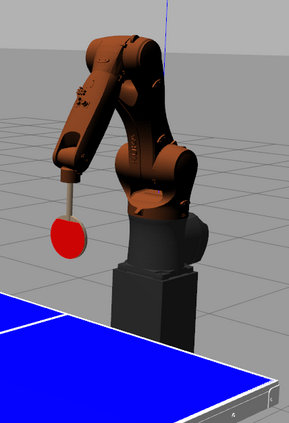

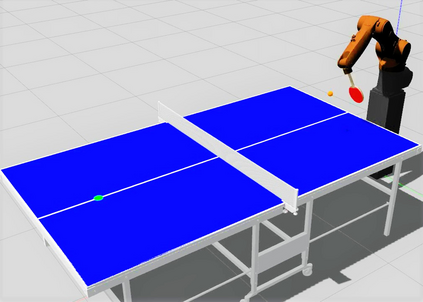

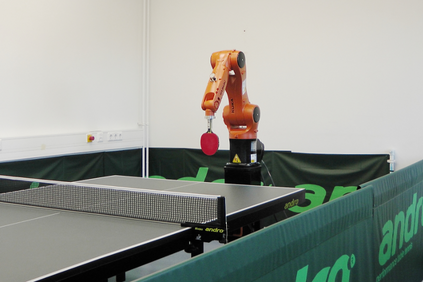

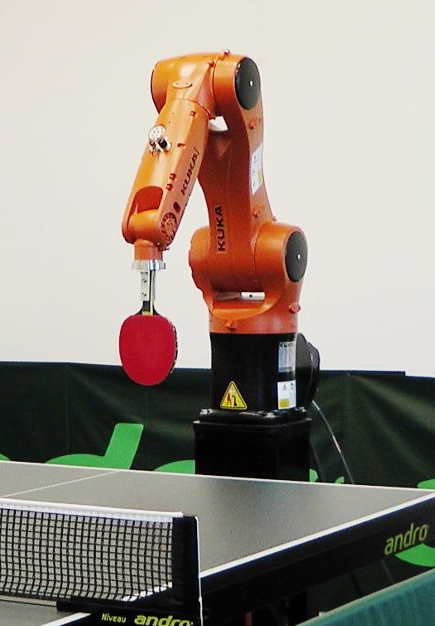

Learning to play table tennis is a challenging task for robots, as a wide variety of strokes required. Recent advances have shown that deep Reinforcement Learning (RL) is able to successfully learn the optimal actions in a simulated environment. However, the applicability of RL in real scenarios remains limited due to the high exploration effort. In this work, we propose a realistic simulation environment in which multiple models are built for the dynamics of the ball and the kinematics of the robot. Instead of training an end-to-end RL model, a novel policy gradient approach with TD3 backbone is proposed to learn the racket strokes based on the predicted state of the ball at the hitting time. In the experiments, we show that the proposed approach significantly outperforms the existing RL methods in simulation. Furthermore, to cross the domain from simulation to reality, we adopt an efficient retraining method and test it in three real scenarios. The resulting success rate is 98% and the distance error is around 24.9 cm. The total training time is about 1.5 hours.

翻译:学会玩桌球对于机器人来说是一项艰巨的任务,因为需要各种各样的中风。最近的进展表明,深强化学习(RL)能够成功地在模拟环境中学习最佳动作。然而,由于探索努力的力度很大,在真实情景中,RL的适用性仍然有限。在这项工作中,我们建议建立一个现实的模拟环境,为球的动态和机器人的动能建立多种模型。除了培训一个端到端的RL模型外,还提出一种具有TD3骨干的新的政策梯度方法,以根据球在打球时的预测状态来学习电击。在实验中,我们显示拟议的方法大大超越了模拟中现有的RL方法。此外,为了从模拟到现实,我们采用了一种高效的再培训方法,并在三种真实情景中测试它。由此产生的成功率是98%,距离误差约为24.9厘米。总培训时间约为1.5小时。