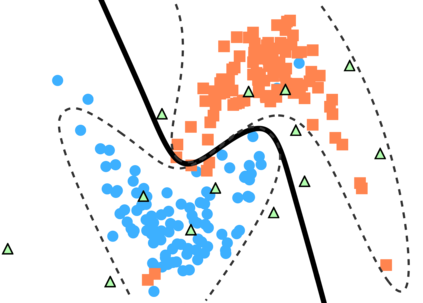

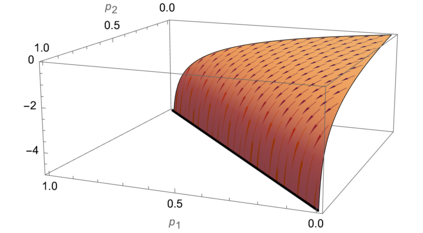

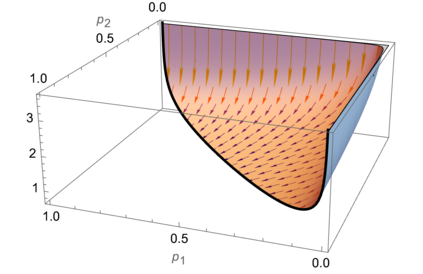

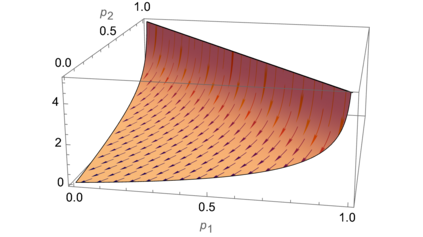

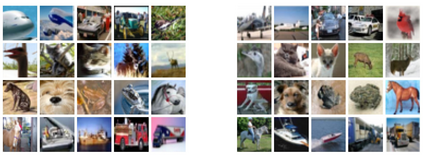

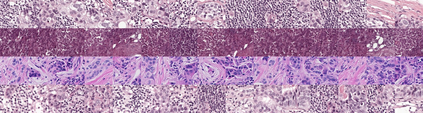

The ability to quickly and accurately identify covariate shift at test time is a critical and often overlooked component of safe machine learning systems deployed in high-risk domains. While methods exist for detecting when predictions should not be made on out-of-distribution test examples, identifying distributional level differences between training and test time can help determine when a model should be removed from the deployment setting and retrained. In this work, we define harmful covariate shift (HCS) as a change in distribution that may weaken the generalization of a predictive model. To detect HCS, we use the discordance between an ensemble of classifiers trained to agree on training data and disagree on test data. We derive a loss function for training this ensemble and show that the disagreement rate and entropy represent powerful discriminative statistics for HCS. Empirically, we demonstrate the ability of our method to detect harmful covariate shift with statistical certainty on a variety of high-dimensional datasets. Across numerous domains and modalities, we show state-of-the-art performance compared to existing methods, particularly when the number of observed test samples is small.

翻译:快速和准确地识别测试时的共变变化的能力是高风险领域部署的安全机器学习系统的关键和经常被忽视的组成部分。虽然存在一些方法来检测在何时不应在分配外试验示例中作出预测,但确定培训和测试时间之间的分布水平差异有助于确定何时应当将模型从部署设置中去除并重新培训。在这项工作中,我们将有害的共变变化定义为分布变化,可能削弱预测模型的通用化。为了检测HCS,我们使用经培训同意培训数据并在测试数据上出现分歧的分类人员联合体之间的不协调。我们为培训这一集合而得出一个损失函数,并表明分歧率和酶代表了HCS的有力歧视统计数据。我们生动地展示了我们用多种高维数据集的统计确定性探测有害共变变化的方法的能力。在许多领域和模式中,我们展示了与现有方法相比的最新性表现,特别是在观测到的测试样品数量很小的情况下。</s>