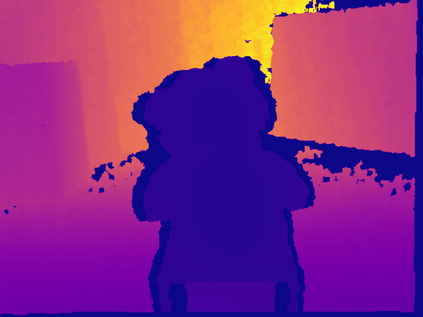

This work introduces an effective and practical solution to the dense two-view structure from motion (SfM) problem. One vital question addressed is how to mindfully use per-pixel optical flow correspondence between two frames for accurate pose estimation -- as perfect per-pixel correspondence between two images is difficult, if not impossible, to establish. With the carefully estimated camera pose and predicted per-pixel optical flow correspondences, a dense depth of the scene is computed. Later, an iterative refinement procedure is introduced to further improve optical flow matching confidence, camera pose, and depth, exploiting their inherent dependency in rigid SfM. The fundamental idea presented is to benefit from per-pixel uncertainty in the optical flow estimation and provide robustness to the dense SfM system via an online refinement. Concretely, we introduce a pipeline consisting of (i) an uncertainty-aware dense optical flow estimation approach that provides per-pixel correspondence with their confidence score of matching; (ii) a weighted dense bundle adjustment formulation that depends on optical flow uncertainty and bidirectional optical flow consistency to refine both pose and depth; (iii) a depth estimation network that considers its consistency with the estimated poses and optical flow respecting epipolar constraint. Extensive experiments show that the proposed approach achieves remarkable depth accuracy and state-of-the-art camera pose results superseding SuperPoint and SuperGlue accuracy when tested on benchmark datasets such as DeMoN, YFCC100M, and ScanNet.

翻译:这项工作从运动(SfM)问题中引入了对浓密双视结构的有效而实际的解决办法。一个重要问题是,如何谨慎地使用两个框架之间的每像素光流对应,以进行准确的估算 -- -- 因为两种图像之间的完美的每像素对应,即使不是不可能也很难建立。在仔细估计相机和预测每像素光流对应的情况下,可以计算出一个密集的场景深度。随后,引入了一个迭代完善程序,以进一步改善光学流匹配信心、摄像头和深度,利用其在硬性SfM的内在依赖性。提出的基本想法是,从光学流估计中的每像素不确定性中获益,并通过在线的改进为密集的SfM系统提供稳健。具体地说,我们引入了一条管道,其中包括:(一) 不确定性和预测的每像素流动估计密度的估算方法,提供每像素匹配的可靠度;(二) 以光学流不确定性和双向光学流一致性为基准,以完善表面和深度为依据。