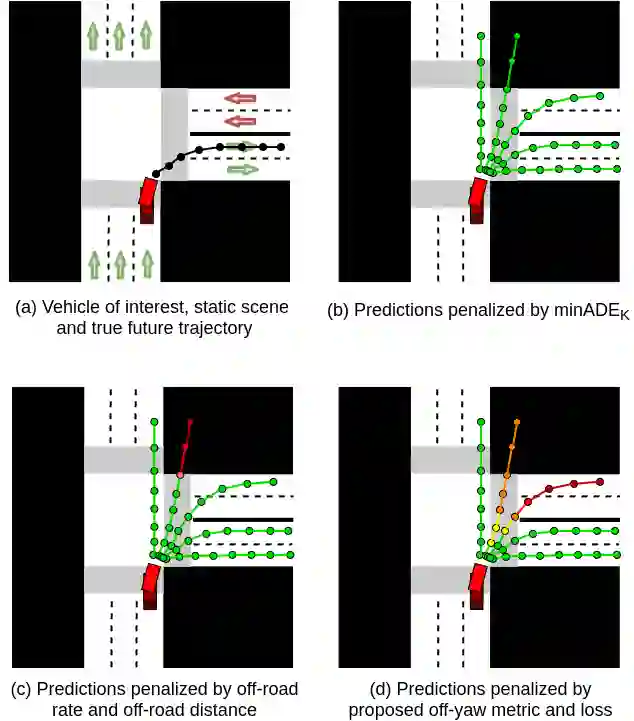

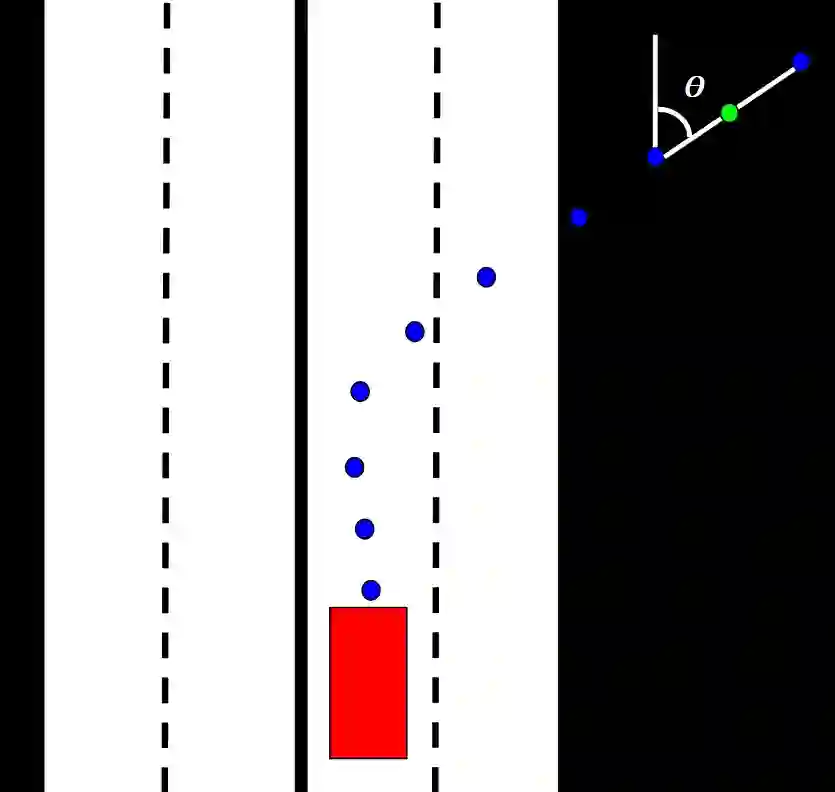

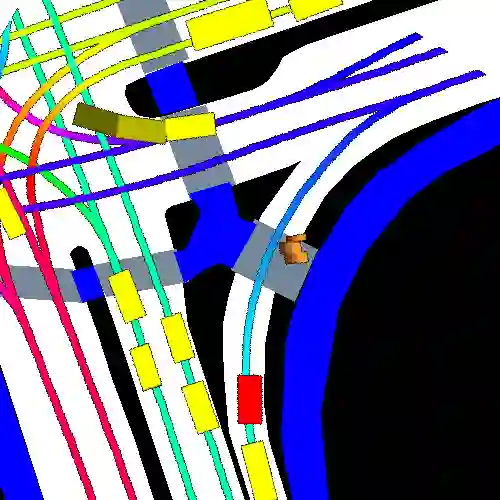

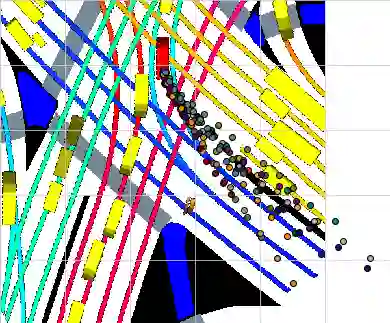

Predicting a vehicle's trajectory is an essential ability for autonomous vehicles navigating through complex urban traffic scenes. Bird's-eye-view roadmap information provides valuable information for making trajectory predictions, and while state-of-the-art models extract this information via image convolution, auxiliary loss functions can augment patterns inferred from deep learning by further encoding common knowledge of social and legal driving behaviors. Since human driving behavior is inherently multimodal, models which allow for multimodal output tend to outperform single-prediction models on standard metrics. We propose a loss function which enhances such models by enforcing expected driving rules on all predicted modes. Our contribution to trajectory prediction is twofold; we propose a new metric which addresses failure cases of the off-road rate metric by penalizing trajectories that oppose the ascribed heading (flow direction) of a driving lane, and we show this metric to be differentiable and therefore suitable as an auxiliary loss function. We then use this auxiliary loss to extend the the standard multiple trajectory prediction (MTP) and MultiPath models, achieving improved results on the nuScenes prediction benchmark by predicting trajectories which better conform to the lane-following rules of the road.

翻译:预测车辆的轨迹是穿梭复杂城市交通场景的自主车辆的基本能力。 鸟眼路图信息为预测轨迹提供了宝贵的信息,而最先进的模型通过图像混凝土提取了这些信息,辅助性损失功能可以通过进一步将社会和合法驾驶行为的共同知识编码,从深层学习中推断出更多的模式。由于人的驾驶行为本质上是多式的,允许多式产出的模式往往优于标准指标的单向模式。我们提出了一个损失功能,通过在所有预测模式上执行预期的驾驶规则来增强这种模型。我们对轨迹预测的贡献是双重的;我们提出了一个新的指标,通过对反对车道指定航向(流动方向)的轨迹进行处罚,解决越轨率衡量的故障案例,我们展示了这一指标是不同的,因此适合作为辅助性损失功能。我们随后利用这一附带损失来扩大标准的多轨迹预测和多式模型,通过预测轨迹预测更好地遵守行车道规则,从而改进了核巡测基准的结果。